Gamer79

Predicts the worst decade for Sony starting 2022

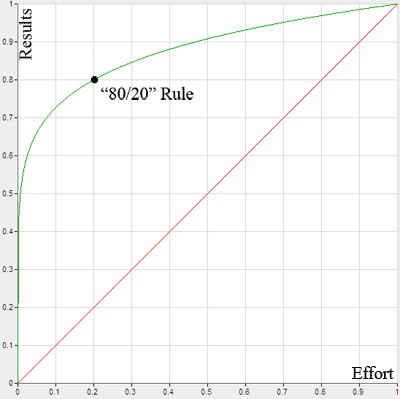

Growing up, Each game generation came with massive leaps. Going from the nes to the snes was massive. Going from the SNES to the N64 was massive. Going from the N64 to the Gamecube was massive. Going from the Gamecube to the Xbox 360 was massive. Going from the Xbox 360 to PS4 was pretty big but not so massive. Going from PS4 to PS5 is a big hop vs a giant leap.

The PC side sees the same trend: Going from the GTX to the RTX 2000 series was a massive leap. The rtx 2000 series to the 3000 series was a big big jump, Going from the 3000 series to the 4000 series was a big skip, and now the 4000 series to the 5000 series is kinda a hobble with a crutch to make it look better than it is.

Looks like Moore's Law (look it up if you don't know what that is) is dead. It seems like the days of just getting more Horsepower to outdo the previous generation is over. Especially when it comes to GPU's. If they keep getting bigger they are going to require a server tower to store them in and a power supply that comes with it's own power station to run them. Also the MSRP on the Big Dawg of GPU's is getting insane. $2000 MSRP?

Looks like the furture is going to be in Ai Upscaling and Frame Generation. There is only so much power you can pack into a small space so if we are going to advnace it has to be another way.

The PC side sees the same trend: Going from the GTX to the RTX 2000 series was a massive leap. The rtx 2000 series to the 3000 series was a big big jump, Going from the 3000 series to the 4000 series was a big skip, and now the 4000 series to the 5000 series is kinda a hobble with a crutch to make it look better than it is.

Looks like Moore's Law (look it up if you don't know what that is) is dead. It seems like the days of just getting more Horsepower to outdo the previous generation is over. Especially when it comes to GPU's. If they keep getting bigger they are going to require a server tower to store them in and a power supply that comes with it's own power station to run them. Also the MSRP on the Big Dawg of GPU's is getting insane. $2000 MSRP?

Looks like the furture is going to be in Ai Upscaling and Frame Generation. There is only so much power you can pack into a small space so if we are going to advnace it has to be another way.