Thick Thighs Save Lives

NeoGAF's Physical Games Advocate Extraordinaire

Baldur's Gate 3 has left a long early access period to critical acclaim and massive success - and after playing it I can understand why. Baldur's Gate 3 digitises the free-form gameplay of D&D in a way that keeps the spirit of the tabletop role-playing game intact, resulting in some of the most interactive and reactive narrative and gameplay that the medium has ever seen. Developers Larian are also using their own engine technology - admirable in an era when even the biggest AAA studios are falling back to Unreal Engine. I've tested the game to break down its visuals and performance, as well as provide optimised settings for a range of PCs.

Before discussing the game's graphical options, I wanted to underscore just how well Baldur's Gate 3 carries the analogue experience of Dungeons and Dragons, from the overt way the dungeon master's narration sneaks into moments as internal thoughts beyond the player's control, or the way you can navigate the environments in encounters, utilising the terrain and your attributes to come out a victor. It is all free form and flows wonderfully, and the amount of choice you have to affect the game in your own way is staggering.

My favourite aspect is how Larian has blended analogue elements from the tabletop experience into play where it matters most. In combat, the rules of chance are automatically dealt with to keep combat as fast and satisfying as possible in a turn-based system, but when it comes to big moments of player action, chance is made overt with a throw of a D20 die that you can see spinning in front of you and determining your fate. If you've ever played tabletop games you know how exhilarating this is - how empowering a natural 20 is and how hilarious it is to see a critical failure 1 pop up. As I see it, Baldur's Gate 3 is the best translation of that D&D table experience into the digital realm that I have ever played.

With that in mind then, it almost doesn't matter that the game's graphics aren't boundary-pushing, with familiar techniques that have been used since the days of the PS4: shadow maps, screen-space ambient occlusion and just enough texture and geometry quality to make convincing facsimilies of all the famous archetypes and objects you find in D&D lore.

The technical makeup of the graphics is last-generation then, but the execution is great nonetheless. Characters look great and animate well enough even in dialogue, a feat given how much voice-over there is to account for, special effects from attacks are fanciful and detailed, and the areas you traverse can be evocative and beautiful.

Given how this game is shipping with a Vulkan client, I would really love to see its graphical quality upped in meaningful ways over time, if possible. For example, replacing SSAO and indirect lighting with ray-traced equivalents would do magical things to the game's presentation. Even something as simple as ray-traced shadows would help quite a bit, as the shadows can show obvious flicker and aliasing at times, even at their highest settings.

Speaking of Vulkan, you do get a choice of both DirectX 11 and Vulkan modes from the game's launcher - a launcher that performs brilliantly on my Ryzen 5 3600 test PC and oddly slowly on my Core i9 12900K PC. (Thankfully, you can use the launch command --skip-launcher to boot straight into the DX11 version of the game, or use --skip-launcher --vulkan to boot straight into Vulkan.)

We've already covered the DX11 vs Vulkan choice for Baldur's Gate 3 in detail, so we'll summarise here. For systems that are GPU-limited (~99% GPU utilisation recorded in Windows Game Bar > Performance), DirectX 11 is the better choice, as it runs around eight percent faster than Vulkan on Nvidia GPUs and 25 percent faster than Vulkan on Intel GPUs, with no appreciable difference on AMD GPUs.

If you're CPU-limited, we still recommend DX11 for Nvidia and AMD GPU owners, as performance in areas with NPCs is around three to five percent faster, though Vulkan is faster in static scenes with these GPUs. Meanwhile, Intel GPUs run 13 percent faster in Vulkan in this CPU-limited scenario. DirectX 11 also benefits from a working triple-buffered and double-buffered v-sync implementation, which doesn't work in Vulkan, and my colleague Will Judd also saw crashes on Vulkan that weren't repeated in DX11.

Therefore, most AMD and Nvidia users ought to use the DX11 API, especially as your CPU becomes more modern, whereas Vulkan is best used on very old CPUs where users are struggling to attain 60 fps. For Intel GPU owners targeting 60fps at higher resolutions, DX11 is again better, but those targeting 120fps at lower resolutions, Vulkan is better.

Here's how DX11 and Vulkan stack up when GPU-limited on an Nvidia, AMD and Intel graphics card respectively. In short: DX11 wins two (Nvidia, Intel) and draws one (AMD), making it a good default choice.

With the API question out of the way, we can enter the main menu. The options here are good enough, with descriptions for each setting often accompanied by an illustration. However, information on which component is taxed (CPU, GPU, VRAM) could be a helpful addition for the future.

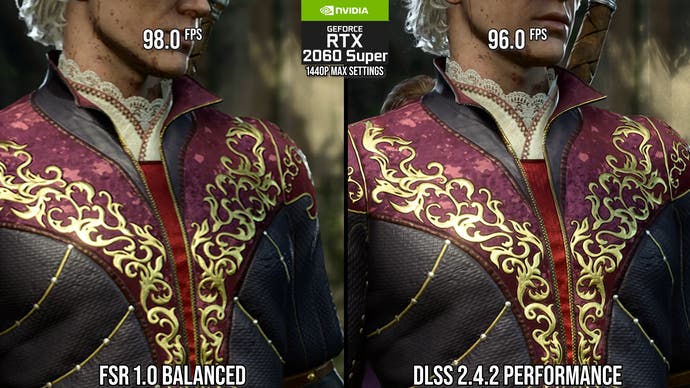

I'm grateful to see DLSS and DLAA here, but those without an RTX graphics card would benefit from the inclusion of FSR 2 and XeSS. Instead, AMD and Intel GPU owners need to rely on FSR 1, although FSR 2 should arrive in September (and a DLSS replacement mod is already available from former DF interviewee PotatoOfDoom).

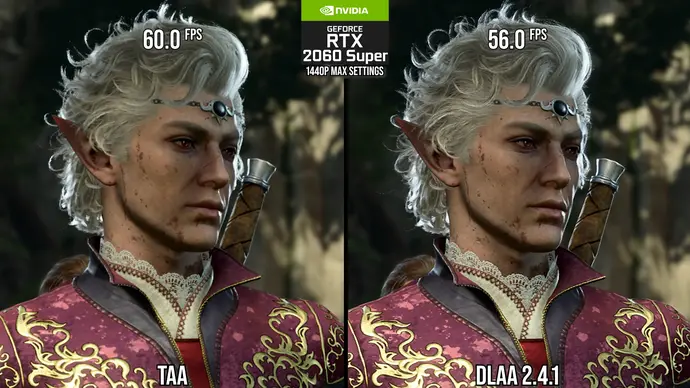

If DLSS and DLAA are an option for you though, you'll find that it looks better than the default TAA at nearly every quality setting, with less blur and ghosting. There are two minor DLSS issues, the first being trails behind hair when moving, and second being depth of field being (I think accidentally) tied to internal resolution, such that background objects appear clearer in DLSS performance than in DLSS quality.

FSR can't really compare to DLSS 2, given the latter's use of extra temporal data, so it would have been nice to see FSR 2 (and XeSS) included at launch for users of non-Nvidia graphics cards.

The game's DLAA looks better than the default TAA, making it worth enabling given its relatively low performance cost.

Altogether, the technical user experience in Baldur's gate 3 is good - options are adequate and you even get some niceties like seamless UI and camera style changes when you switch between mouse and keyboard and controller. Still, I'd like to see these DLSS issues cleared up and an official FSR 2 implementation as quickly as possible.

Baldur's Gate 3 isn't particularly heavy on the CPU and GPU, as it runs maxed out at 1440p on an RTX 2060 Super, a four-year-old mid-range GPU, with performance between 40 and 60fps while using 4.5GB of VRAM. In this scenario, activating DLSS quality mode is sufficient to achieve a stable 60fps. Still, not everyone is equipped with this calibre of GPU or has access to DLSS, so optimised settings are still worth detailing - with the target being reasonable performance gains without too harsh an impact on visual quality.

Taking the options in turn, fog quality provides a small two percent performance advantage when dropped to high with a negligible visual difference, while medium shadow quality is a more noticeable drop that pays for itself with a nearly 10 percent frame-rate gain. Circular depth of field is the best-looking option, making it worth keeping, but reducing its quality to quarter (without denoise) provides a three percent performance advantage with only a minor visual setback.

| Name | Optimised Setting |

|---|---|

| Model Quality | High* |

| Detail Distance | High |

| Instance Distance | High |

| Texture Quality | Ultra** |

| Texture Filtering | Anisotropic x16 |

| Animation Level of Detail | High |

| Slow HDD Mode | Off |

| Dynamic Crowds | On |

| Shadow Quality | Medium |

| Cloud Quality | Ultra |

| Fog Quality | High |

| Depth of Field | Circular |

| Depth of Field Quality | Quarter |

Beyond this, there are few performance wins that come without oversized visual penalties. Model quality is arguably in this category, but dropping quality to medium does provide six percent better performance albeit with more noticeable pop-in and reduced model quality on distant objects. I'd suggest trying high first, but consider dropping to medium if you're not able to achieve 60fps. The other settings are largely useful only for very old PCs, with relatively limited scaling on settings like detail distance and cloud quality, which are best left on their highest settings. Similarly, animation level of detail and slow HDD mode didn't seem to noticeably affect performance or visuals even on my low-end system. Lastly, texture quality can be kept on ultra for modern GPU with more than 4GB of VRAM.

With that said, most of our optimised settings remain on their highest options as the game is so light in general, but we still derive a nice 20 percent boost to average performance in the scene we tested - nice to see given how little visual fidelity we've sacrificed.

Baldur's Gate 3 is a great game then, and unlike many recent PC releases, it launches in a polished state with no game-breaking issues, shaming the technical quality of other big AAA releases. I wish it did a few things better, like having FSR 2 at launch, fixing a few minor DLSS issues or improving frame-times when traversing NPC-populated areas on lower-end CPUs like the Ryzen 5 3600. Other than these minor aspects though, Larian has done a fine job on the technical side of things - and that's a genuine pleasure, given just how well this clear game-of-the-year candidate has been realised.

Baldur's Gate 3 PC tech review: polish that puts other AAA games to shame

Baldur's Gate 3 is a game of the year contender - but how does its PC release stack up in terms of visuals and performance - and how about optimised settings?

Last edited: