Nickolaidas

Member

Okay, so I am thinking of upgrading my 4060Ti 8GB to a 4070 Ti Super 16GB (double the TFLOPs, double the VRAM).

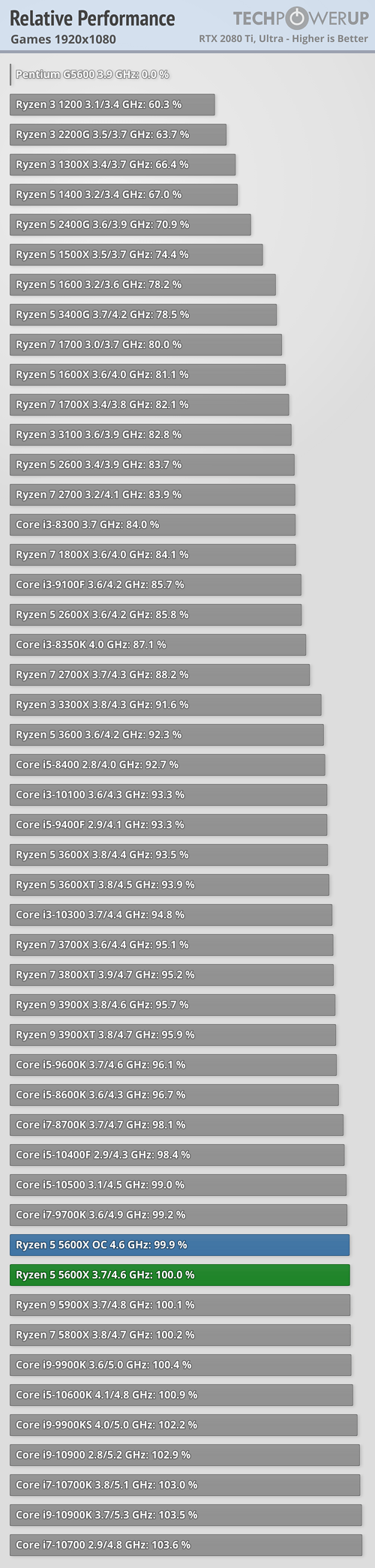

Problem is I have an i7-9700K CPU which causes a lot of bottleneck on that GPU (30% at 4K, 44% at 1440p) - according to the Bottleneck Calculator website my big brother frequents. ( https://pc-builds.com/bottleneck-calculator/ )

Right now I can't change my CPU, because I also have to upgrade my Aorus Z390 motherboard (as well at its memory) so it effectively takes my 1000$ upgrade to 2200$ (basically a new PC at that point).

So I am thinking of how much of a bottleneck I get if I use say, Quality DLSS on a game.

In such a case, by taking 1440p image quality and upscaling it to 4K (which is what Quality DLSS does, right?), is the bottleneck going to be 30%? or 44%? Note that I do not increase the framerate any higher than 60fps - due to my TV's limitations.

And extra question: is there a middle ground I can reach? A better CPU than my i7-9700K which is also compatible with my Z390 Aorus motherboard?

EDIT: Some people on the nvidia forums tell me that the bottleneck numbers the website gives me are bogus, and at 4K and 60fps (max), the game is almost entirely GPU bound and the CPU basically doesn't bottleneck the game. Is that true?

Problem is I have an i7-9700K CPU which causes a lot of bottleneck on that GPU (30% at 4K, 44% at 1440p) - according to the Bottleneck Calculator website my big brother frequents. ( https://pc-builds.com/bottleneck-calculator/ )

Right now I can't change my CPU, because I also have to upgrade my Aorus Z390 motherboard (as well at its memory) so it effectively takes my 1000$ upgrade to 2200$ (basically a new PC at that point).

So I am thinking of how much of a bottleneck I get if I use say, Quality DLSS on a game.

In such a case, by taking 1440p image quality and upscaling it to 4K (which is what Quality DLSS does, right?), is the bottleneck going to be 30%? or 44%? Note that I do not increase the framerate any higher than 60fps - due to my TV's limitations.

And extra question: is there a middle ground I can reach? A better CPU than my i7-9700K which is also compatible with my Z390 Aorus motherboard?

EDIT: Some people on the nvidia forums tell me that the bottleneck numbers the website gives me are bogus, and at 4K and 60fps (max), the game is almost entirely GPU bound and the CPU basically doesn't bottleneck the game. Is that true?