Insane Metal

Member

I believe the higher the resolution, the better. When 1080p content started to appear, a lot of people said it was a waste, that HD was good enough. And we keep seeing this over and over again.

4K is a cop out. Any game looks good in 4k with HDR on full.

I would rather they stick with 1080p, give me 120fps+ and bring back some fucking physics, destruction and swarms of enemies.

Last gen, over ten years ago!, had 99 nights and battlefield bad company 2. One had swarms of enemies, one had amazing destruction.

Nothing this gen has come close, yet we're chasing 4k? What's the point?

I don't see 4k as a waste, it's more that devs are jumping too soon.I believe the higher the resolution, the better. When 1080p content started to appear, a lot of people said it was a waste, that HD was good enough. And we keep seeing this over and over again.

The difference being that going from a 250GB HDD to a 1TB HDD doesn't require an exponential increase in computational power to drive such capacities. What's after 4K? 8K? Yeah, good luck drawing 1.9 billion pixels per second (for 8K at 60FPS). Transistors can't get much smaller, they're already smaller than some atoms. Moore's Law is failing us, the computational gains are drying up. You have to draw the line somewhere.Are you really seriously arguing that 1080P and 1440P are good enough?!!!! That is like fucking arguing that a 250GB HDD was enough storage or that 1MB of RAM was good enough. Fuck that.

What is crippled here exactly?

Not a god damn thing.

How has resolution negatively impacted consoles? It hasn't, the performance profiles of the 4K machines are still better than the base systems and they're pushing resolutions multitudes higher.

Yeah well help me find that $1,000+ Sony FW900 and then we'll talk.

All of those shots are from the Xbox One X and no they couldn't just shove a Zen CPU in there given the amount of time they had to work with, costs and testing for compatibility.Dude quantum break barely runs at stable 30 fps, same for red dead redemption at ultra settings. It's janky as fuck gameplay vs perfect smooth 1080p gameplay and barely 60 fps at 1440p.

U sacrifce a shit ton of performance that can add towards the visual quality. 100+fps 1080p > any other solution. 1440p60fps is a nice trade off if you care about more pixel quality but that's it as it goes for todays gpu offerings especially in newer games.

People advertising there shitty 4k resolutions only work in games that barely push visuals forwards. Even final fantasy 14 runs here at ~200 fps super smooth on a 1080ti at 1080p, ~100 fps at 1440p, 4k = janky sub 60 fps experience with loads of input lag. And this game is far from pushing visuals forwards.

MS also gets increased a metric ton,, 80-90 fps at ultra settings, with 4 ms, vs 30 ms 35 fps in quantum break. Yea good luck with that.

Call me when u can run 144 fps on 4k at ultra settings. Oh wait nothing there that can remotely boot it. 4k is a waste of time and that's why practically nobody cares for it, even screen vendors know it on the PC side.

Consoles also get negativily impacted by it, for the simple fact that if they pushed a lesser gpu in that box and a better cpu, u would actually have gotten 60 fps at 1080p instead of this useless chase towards sub par pixels and settings that barely can run modern games at playable framerates. Hell even that sony dev mentioned this, they needed far more performance to actually make 4k work and are the resources spend well then? nope.

This is why i mentioned with the xbox one x, they should have cut that gpu towards a 4 tflop one and ram a cheapo ryzen in that box. They would be played all games at higher resolution then xbox one and 60 fps to boot with it.

Sadly they don't because exactly what this guy is mentioning in the article they chasing this dumb as 4k dream that basically resulted in these garbo boxes that do everything half way.

PC gamers know that 4k was a pipe dream already for a while when those boxes got announced, and frankly 4k still is brutal and will stay brutal unless game developers stop pushing visuals forwards. Which isn't the case as control is a good example of that.

10 fps lows on PS4, barely runs at a stable 60 fps at ultra on 1080p on the fastest videocard. Raytracing kills performance with it entirely if you care about that. Where's 4k? Yea a pipedream.

All of those shots are from the Xbox One X and no they couldn't just shove a Zen CPU in there given the amount of time they had to work with, costs and testing for compatibility.

The X was never designed to be a gross departure from the base system, its intent was to take the results seen on the base system and essentially scale it 4x and if there was still a surplus of compute it would further steady framerates or be used for additional graphical flair. The X operates within the exact parameters it was designed to, it does what it was intended to do. Take the Xbox One experience to the 4K display and steady it out.

Nothing was lost going from the Xbox One to the Xbox One X, nothing was lost going from the PlayStation 4 to the PlayStation 4 Pro. It was a net gain on all fronts, resolution, performance, sometimes higher settings etc.

I read it just fine, the whole assertion here is that chasing 4K produces some kind of net loss, it doesn't whatsoever.So you don't read, aint going to explain it again.

The difference being that going from a 250GB HDD to a 1TB HDD doesn't require an exponential increase in computational power to drive such capacities. What's after 4K? 8K? Yeah, good luck drawing 1.9 billion pixels per second (for 8K at 60FPS). Transistors can't get much smaller, they're already smaller than some atoms. Moore's Law is failing us, the computational gains are drying up. You have to draw the line somewhere.

Nope. 3-5nm is about the limit and quantum computing isn't useful for this kind of task. Unless there is some major technological advancement, or we go back to making computers bigger and bigger we just don't have far to go I'm afraid.No such line is ever going to be drawn when it comes to technology, they will find a way.

People still ride horses and bicycles, but they aren't the default mode of transportation today.Not even tv broadcasters and blueray manufacturers caught on with 4k. In fact people still use analog TVs with digital TV boxes via scart.

"1080P gaming, its useless" says developer who doesn't want to put effort into developing 1080P games. 4K will be the industry standard as time passes and the next developer will be saying "8k gaming, its useless"

Nothing to Lol about. As per exampleI totally agree with him, 4k is seriously useless. I happily play at 1080p@60fps on my rtx 2080 in any (ultramodded) game

1440p doesn't looks awful cmon...I can't believe we are still having this lousy conversation on the cusp of 2020!

There are a lot of claims being made in this thread that I think are incorrect.

Other Thoughts:

- GPU and CPU are NOT interchangeable. You can't simply drop 4K and add 60 fps to a 4K, 1080 P game and expect it to work

- 1440 P is a non-starter, as it looks aweful and is unsupported on 4K TVs, as it is a non-integer divisor of 4K

- 4K is not simply "emerging" now, we have had 4K ready consoles for 2-3 years and 4K TVs for 5-6 years

- 8K is far off, 4K is a natural "landing zone" for the foreseeable future, next gen consoles will easily hit 4K if the upgraded consoles can do so now, some games now even natively.

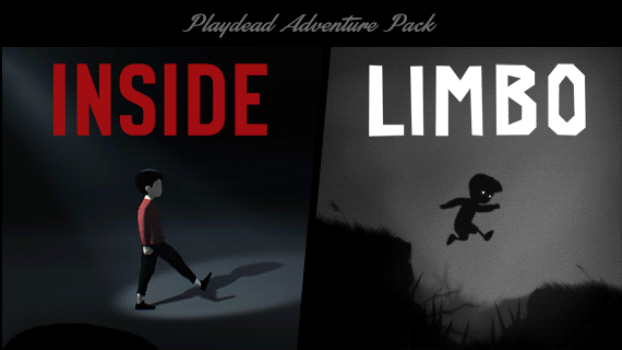

- Of course this guy thinks 4K is wasted, his games are indie games with low quality graphics.

- Of course the console manufacturers are pushing technology, they have different goals than an indie game dev. Part of their goals are (wait for it, this is really groundbreaking news) to sell...hardware. [Gasp! The horror!]

- Clearly this argument is split on peecee vs. Console. PC users sit less than 20" from their monitor, so framerate is more critical, and the difference between 4K and 1080P is largely irrelevant because of the tiny screen size. Console users have large TVs, where resolution absolutely matters, and the depth of field is very different, the edges of peripheral vision are outside the TV borders.

- Can you even purchase a non-4K TV bigger than 30" today?

I happily play on an Xbox One X so the Nintendo Switch must be useless, according to this logic.I totally agree with him, 4k is seriously useless. I happily play at 1080p@60fps on my rtx 2080 in any (ultramodded) game

People still ride horses and bicycles, but they aren't the default mode of transportation today.

People still hunt, but most people go to a grocery store.

"People" still believe that vaccinations are bad, but modern medicine strongly advises their use (and the majority follow that recommendation).

Just because some people do things the "old way" we should never consider newer alternatives? Note: that I am not saying it is mandatory, just as I wouldn't outlaw horses, and hunting (though anti vaxxers need to be publicly shamed).

It's not worthless whatsoever.4k is useless, right now. But it wont be once the next gen of consoles comes out with the hardware to support it better.

I dont put much stock in what he says though because he sounds like a whiner trying to get some attention is all. He completely ignores the fact you dont have to make 4k games. Undertale isnt 4k, blasphemous isnt 4k, god of war isnt 4k, tons of games arent 4k but sold well and are good games. But some games are cool with it. Thats why we have so many games because there is something for everyone.

Did we really need HD games? No because the games would still be the exact same thing, just not look as good but yet we all want them.

And he can select his own path, which they have done, no one stopped them. No tech vendor said make 4k games or Ill kill you. Infact Id dare say he hasnt been approached by tech vendors at all but making it sound like he is.

This guy is pretty much someone Ill just ignore now because this means nothing to no one.

According to my logic I'm saying the oppositeI happily play on an Xbox One X so the Nintendo Switch must be useless, according to this logic.

The hilarious thing is, you hit the mark. There are people who still think 1080p is useless. Not only that, but given the chance they'd play on 720p or less resolution because somehow it's more immersive."1080P gaming, its useless" says developer who doesn't want to put effort into developing 1080P games. 4K will be the industry standard as time passes and the next developer will be saying "8k gaming, its useless"

Yep. What a weird thing to say.1440p doesn't looks awful cmon...

Ugly girls can look pretty too if you're far enough away.(repeats same fact about how fine details are lost at distance, and how 1080p and 4K will appear the same when viewed beyond a certain threshold)

Does 4K look better? Yes. Does it look good enough for me to accept about 1/3 the framerate? No. (A game that is hitting 120FPS at 1080p will typically drop to about 40FPS at 4K.) If people want this retarded race to the top of the frametime graph then they're welcome to it, but don't expect the rest of us to cheer and clap as developers consistently cripple the playability of their games on console for the sake of "muh 4k".It's not worthless whatsoever.

Forza Horizon 2 1080p

Forza Horizon 3 4K

Both cars cropped (Not zoomed)

The framerate is untouched here, what's the argument now?Does 4K look better? Yes. Does it look good enough for me to accept about 1/3 the framerate? No. (A game that is hitting 120FPS at 1080p will typically drop to about 40FPS at 4K.) If people want this retarded race to the top of the frametime graph then they're welcome to it, but don't expect the rest of us to cheer and clap as developers consistently cripple the playability of their games on console for the sake of "muh 4k".

You're ignoring that the X could quite possibly push past 60FPS if it wasn't running at 4K. It definitely would've been able to if they hadn't intentionally hobbled it with a shit CPU to save a buck. They wouldn't have done that if plebians had made it clear that 30FPS was unacceptable. The higher the framerate the smoother the motion looks, the more responsive it feels and the better it feels to play. These benefits extend well beyond 60FPS.The framerate is untouched here, what's the argument now?

It can push 60 FPS but at 1080p and many sacrifices need to be made graphically, Horizon 4 shows this.You're ignoring that the X could quite possibly push past 60FPS if it wasn't running at 4K. It definitely would've been able to if they hadn't intentionally hobbled it with a shit CPU to save a buck. They wouldn't have done that if plebians had made it clear that 30FPS was unacceptable. The higher the framerate the smoother the motion looks, the more responsive it feels and the better it feels to play. These benefits extend well beyond 60FPS.

It can push 60 FPS but at 1080p and many sacrifices need to be made graphically, Horizon 4 shows this.

What's the point here though? Is a 4K take on our existing console generation in any way diminishing that current generation experience? No, it's enhancing it.

I have something I'd like to introduce you to. You may pick any point within this triangle...but...you can only prioritize one thing and only one of these things is actually important to gameplay. You may not care about it this generation...but if you think you're getting 4K60 with drastically improved graphical fidelity next-gen you're dead wrong...so you might want to make your choice now, before developers (and publishers...publishers just LOVE shiny screenshots) choose for you.It can push 4K but at 30FPS and many sacrifices need to be made graphically, Horizon 4 shows this.

The question is why not moving forward to something more worthy? Better physics, IA, drawing distance, RAY TRACING, poly count bla bla bla1080p crippled consoles last gen, we should have stayed at 720p. Then again, 720p crippled some last gen games too. 480i maybe best. i remember some games dropping frames at 240p when in 60hz, I think 50hz is fine. Computers couldn’t push more than 256 colours. Why bother moving forward?

There's a certain resolution threshold you can hit where it being native or not is basically imperceptible. I'd say north of 3072x1728 is that resolution, when you start to go below that you can pick up that it's not quite native but at it or beyond it you'd be hard pressed to ever tell.