ResurrectedContrarian

Suffers with mild autism

I'd define the "AI doomer" position as a combination of two beliefs:

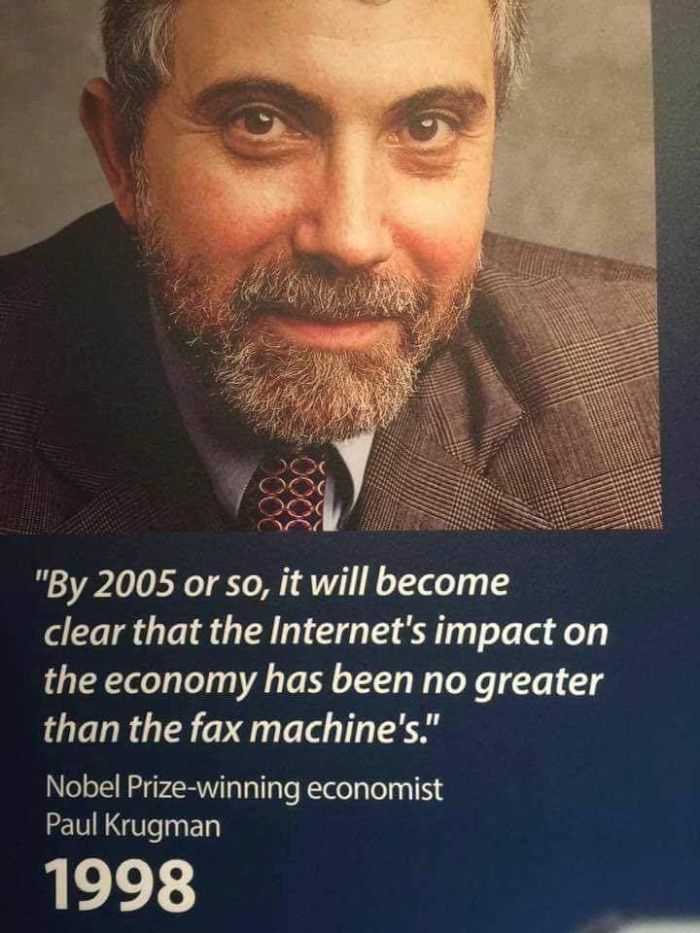

I'm close to a doom position. I firmly believe in (1), and for (2) I'm hopeful that it won't be the case (instead, hope the economy will adjust as AI increases, and new opportunities will outweigh destructions) but I'm nonetheless a bit fearful of the future, and what my kids will deal with in a few years.

In this thread, debate your level of AI doom. How bad will it get? Or is it all upside? Or are you simply a skeptic of the tech in general?

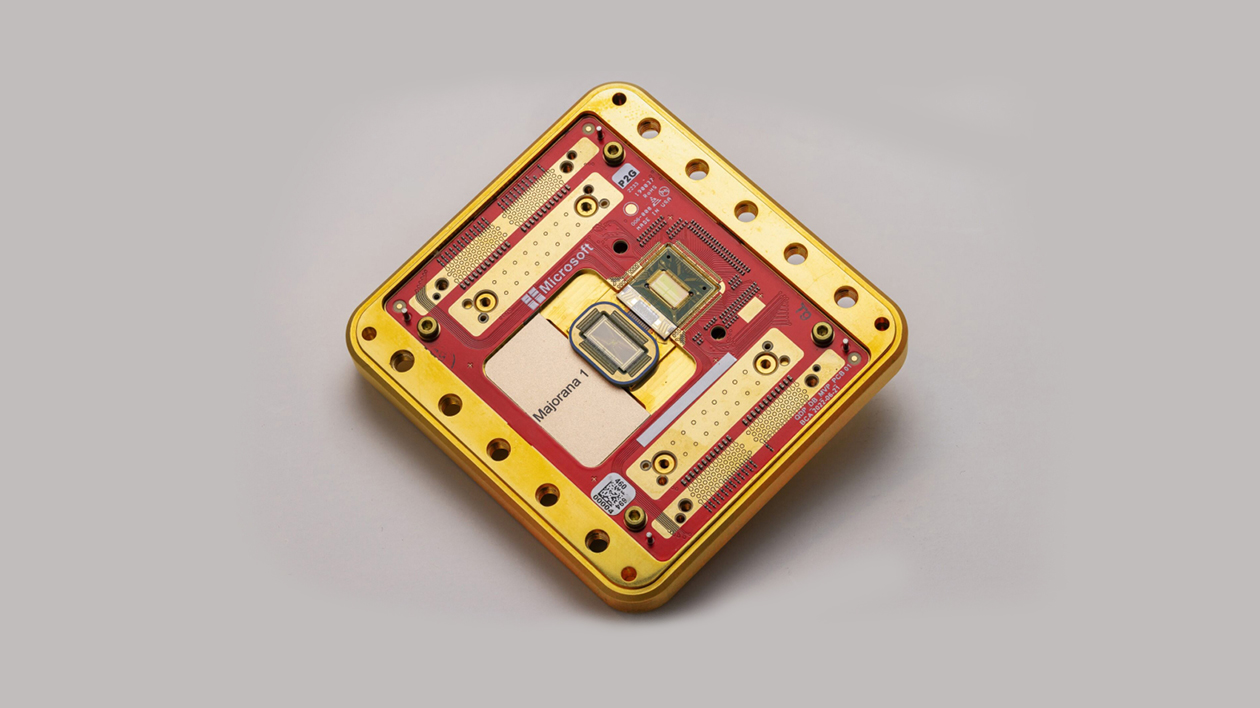

- AI is powerful, and will soon become even more powerful; it's not just hype

- Its effects will cause major economic or social upheavals, with highly unpredictable dangers

I'm close to a doom position. I firmly believe in (1), and for (2) I'm hopeful that it won't be the case (instead, hope the economy will adjust as AI increases, and new opportunities will outweigh destructions) but I'm nonetheless a bit fearful of the future, and what my kids will deal with in a few years.

In this thread, debate your level of AI doom. How bad will it get? Or is it all upside? Or are you simply a skeptic of the tech in general?