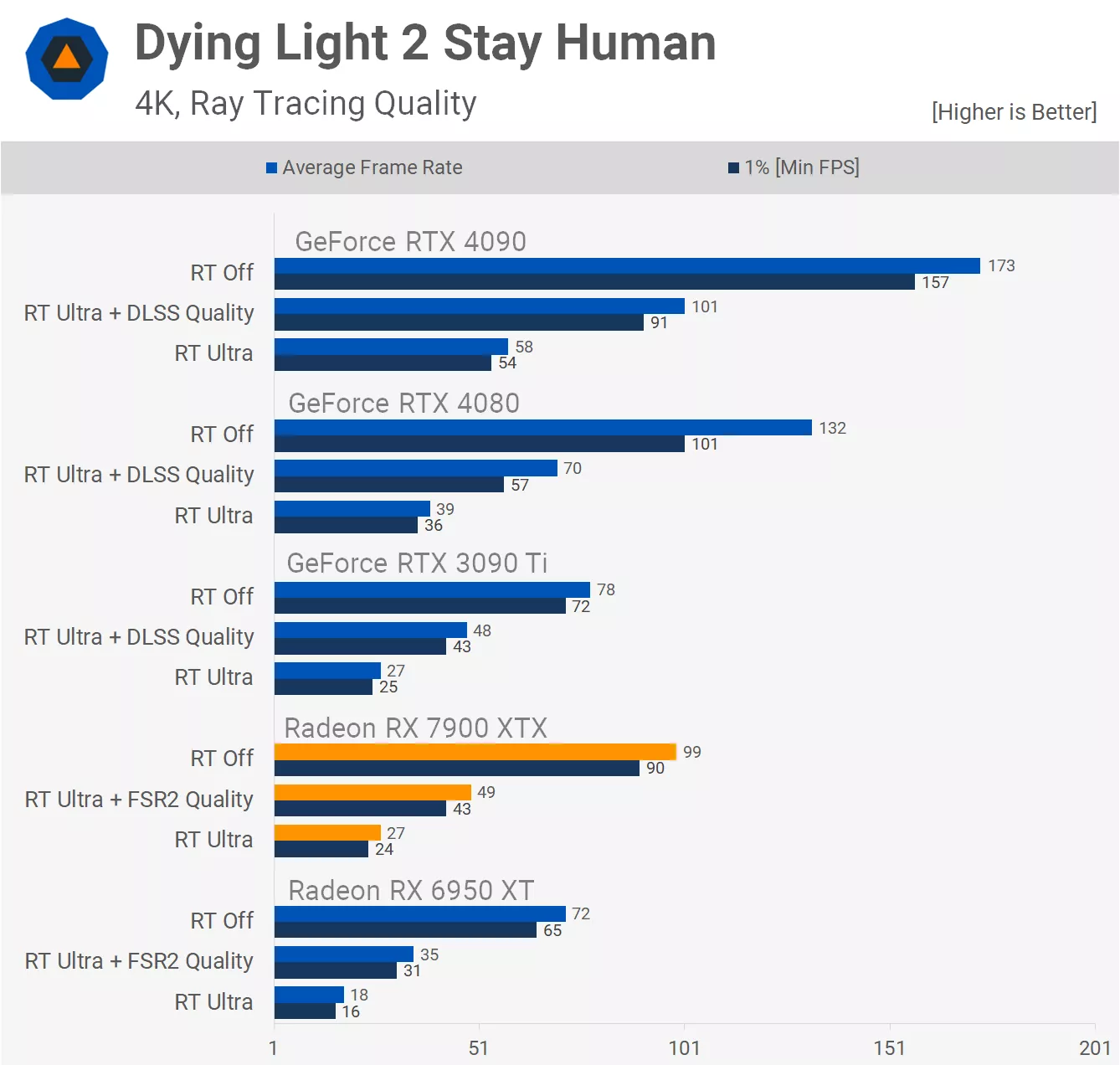

Because averaging is insanely misleading and doesn't take the distribution into account. The 7900 XTX has a sizeable advantage in rasterization in the order of 15%. For it to end up neck-and-neck with the 3090 Ti on average means its 15% average gets completely wiped by a random sample of like 5 games. However, the performance impact on ray tracing varies enormously on a per-game basis, as does the implementation, so just taking on average of a small sample of games is incredibly flawed. And you two are also incorrect in stating that people say the 3090 and 3090 Ti were said to suck following the release of the 7900 XTX. They still obliterate it when you go hard with the ray tracing.

In Alan Wake 2, the 3090 Ti outperforms it by a whopping 19% with hardware ray tracing turned on. This is despite the 7900 XTX being 18% faster without it.

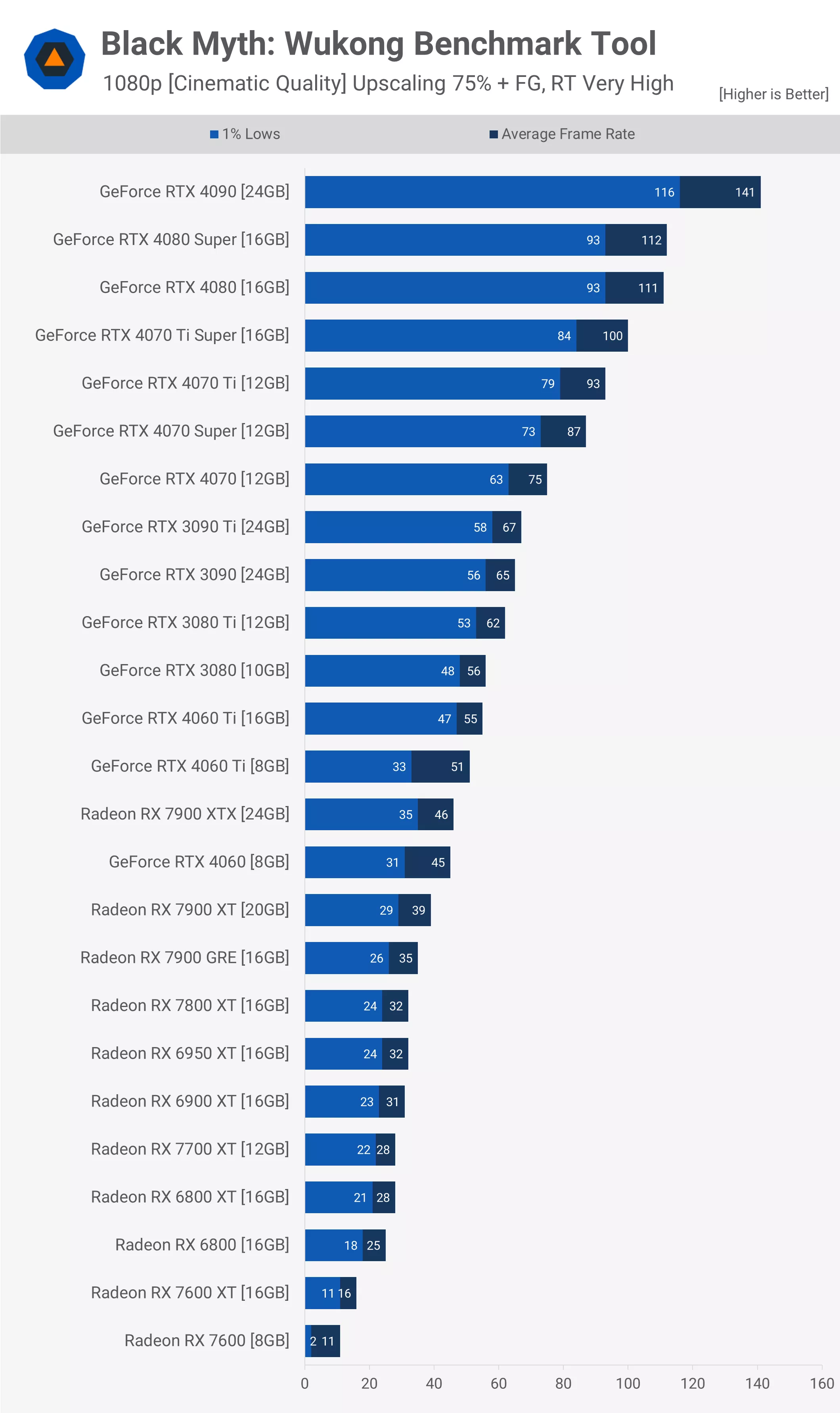

In Black Myth Wukong, the 3090 Ti leads it by 46% at 1080p, max settings. Using Techspot because it doesn't seem like Techpowerup tested AMD cards.

In Rift Apart, it loses by 21%.

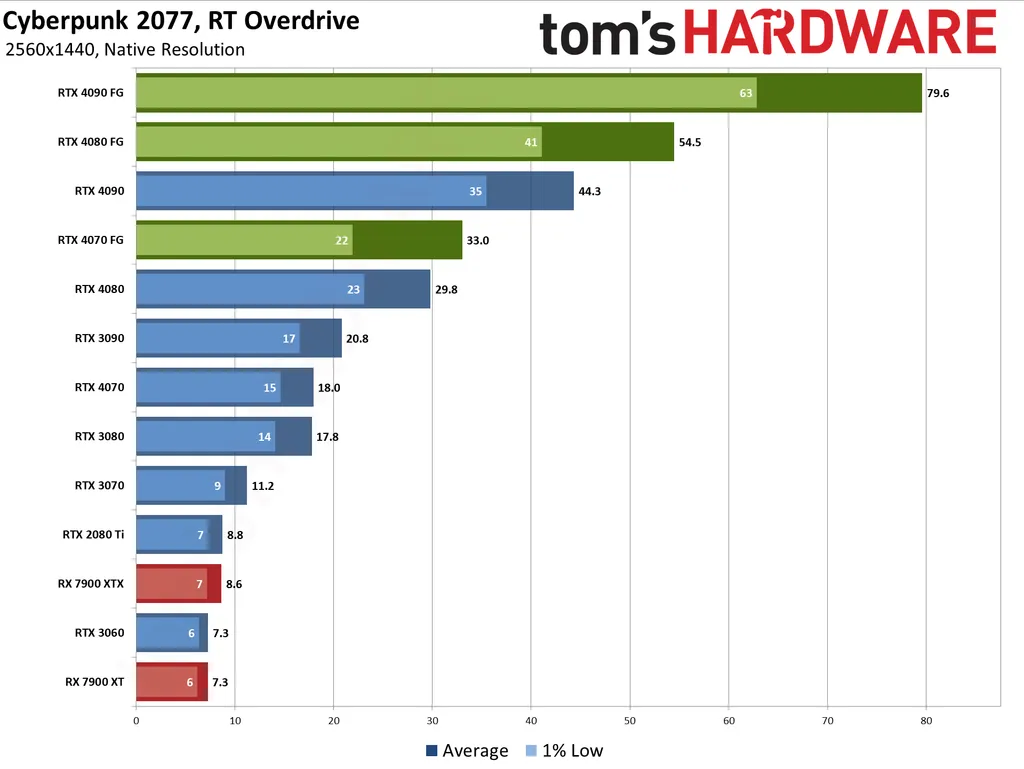

In Cyberpunk with path tracing, the 3090 Ti is 2.57x faster. However, I think AMD got an update that boost the performance of their cards significantly, though nowhere near enough to cover that gap.

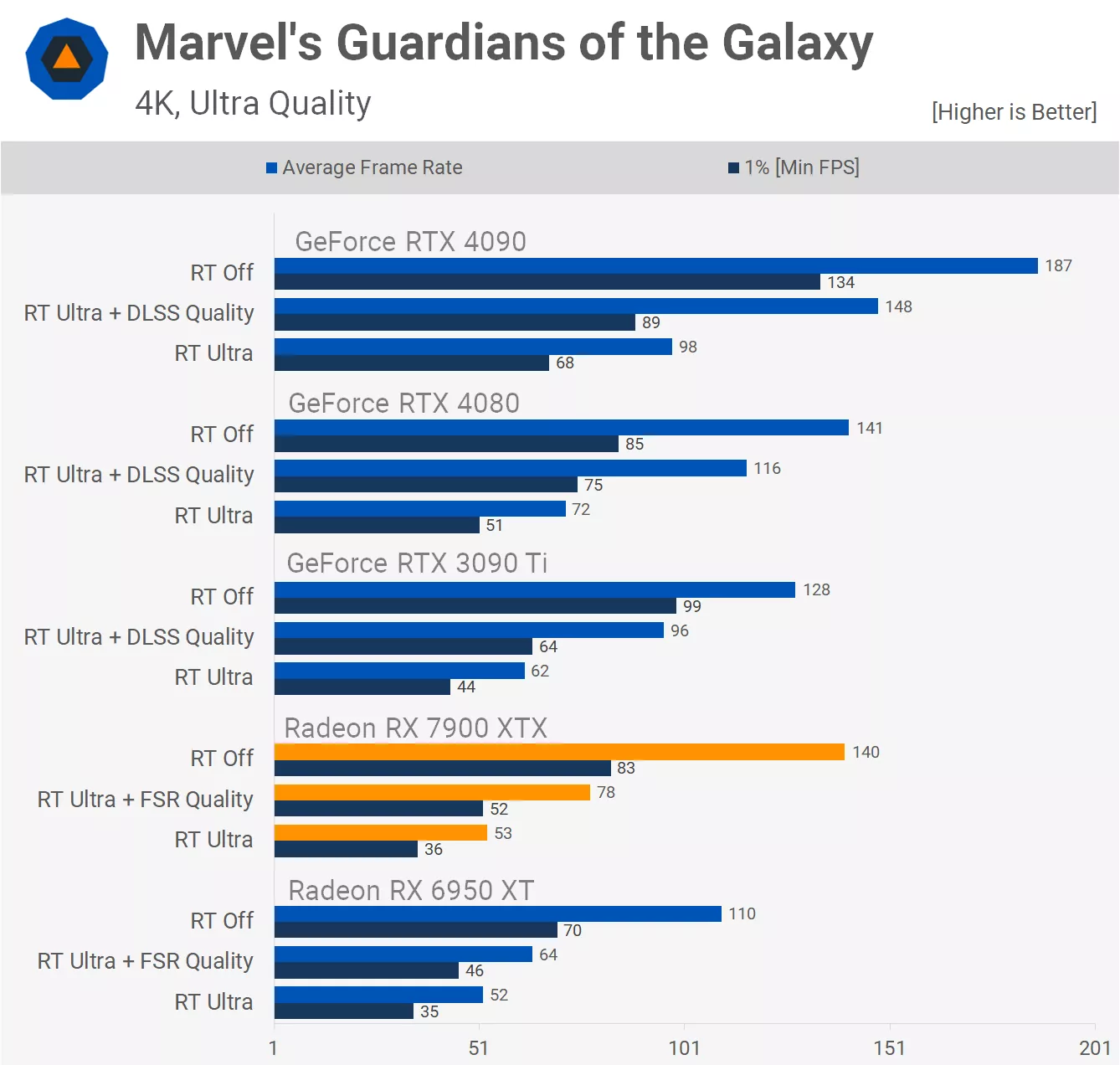

Guardians of the Galaxy 4K Ultra, the 3090 Ti is 22% faster.

Woah, would you look at this? The 3090 Ti is 73% faster in ray tracing according to my sample of 5 games. But of course, the eagle-eyed among you quickly picked up that this was my deliberate attempt at making the 7900 XTX look bad. In addition, removing Cyberpunk, a clear outlier, from the results would probably drop the average down to the 20s. The point I'm making is that the average of 8 games does not represent the real world. You want to use ray tracing where it makes a difference and shit like Far Cry 6 or REVIII ain't that, explaining in part why they're so forgiving on performance (and why they came out tied). Expect to see the 3090 Ti outperform the 7900 XTX by 15% or more in games where ray tracing is worth turning on despite losing by this exact amount when it's off. The 3090 Ti's RT performance doesn't suddenly suck because it's equal to the 7900 XTX (it isn't, it's faster), but AMD is at least one generation behind, and in games where ray tracing REALLY hammers the GPUs, AMD cards just crumble.

tl;dr don't let averages fool you. Look at the distribution and how ray tracing looks in the games.