Loxus

Member

???

???

PS5pro is RDNA 3.5WGP, RDNA4 is difference WGPSo basically RDNA4 = PS5 Pro tech with almost simultaneous launches.

PS5pro is RDNA 3.5WGP, RDNA4 is difference WGP

That need more detail analyze between PS5pro RT(on RDNA 3.5 WGP) and RDNA4 RT(on R4 WGP)I meant it has what matters of RDNA4, the new ray tracing blocks and AI stuff.

I hope they can come through with massive RT performance gains. If this generation has taught us anything, it’s that RT is only really noticeable and worth the cost when you use gobs and gobs of it or just full path tracing. And in games with heavy RT/path tracing AMD gets destroyed.

The same way I account for 4090 losing more fps than 3050, when going from 7800X3D to Celeron n3450.Don’t RDNA2/3 cards lose more performance than Nividia cards once you turn on Raytracing? How do you account for the relatively larger loss in performance if not for a comparable inefficiency somewhere

7900GRE is about 10% behind 4070 and about 25% behind 4070sup in tpu benchmarks.I'll wait to see what AMD does with this. If they manage to up RT perf to somewhat competitive levels

If the game is built to take advantage of all the RT performance saving features (like OMM) built into the RTX 40-series card, the RT performance gap is massive compared to RDNA3 cards.7900GRE is about 10% behind 4070 and about 25% behind 4070sup in tpu benchmarks.

You can always find games where the gap is bigger, or where AMD wins at RT.

This is normally 'countered' by inventing new metrics to judge "RT efficiency"...

It is an elegant way to refer to a green sponsored game.If the game is built to take advantage of all the RT performance saving features (like OMM) built into the RTX 40-series card

www.dsogaming.com

www.dsogaming.com

We are also partnering deeply with our close friends at Activision

to deliver the absolute best experience on Call of Duty: Black Ops 6, with game optimizations and integration of FSR 3.1. We're also working very hard to enablethe next generation ML-Based FSR on Call of Duty: Black Ops 6.

If it was an AMD sponsored title, Black Myth Wukong would not even use Path Tracing, becasue RDNA3 cards dont have necessary features to run extremely demanding PT at reasonable framerate. Even the developer made a video about that. Read about "opacity micro maps" (OMM) build into RTX40 series. This feature alone can speed up RT performance by up to 10x when there's a lot of vegetation in the scene, which is why even RTX30 series cards perform much worse than the RTX40 series. RDNA3 also does not have this feature and that's the reason why we such drastic performance difference in this game.It is an elegant way to refer to a green sponsored game.

4090 running something at 41 fps at fake 4k is, possibly, a sign of particularly awesome coolness which I am too old to grasp.

Things are mighty impressive even without "hardwah RT" it seems:

Black Myth: Wukong - Ray Tracing & DLSS 3 Benchmarks

In this article, we're going to share our impressions, comparison screenshots and benchmarks for its Ray Tracing effects and NVIDIA DLSS 3.www.dsogaming.com

18 fps without upscaling... was it developed for 5000 series?

Also, the bazinga-efficiency-metric seems to be pretty bad, going from 38 to 18 fps, right?

Any particular reason you've picked up this game as an example?

Will AI FSR will require latest hardware?BTW, this is from the new video from AMD:

..

FSR with AI might be coming sooner than we thought.

Will AI FSR will require latest hardware?

Thanks. I've got to discussion titled "RT still not worth it", which is probably not what you meant."opacity micro maps" (OMM) build into RTX40 series

I'd say a game that does 38 frames per second without any "hardwahr RT" enabled is simply pretty demanding.Black Myth Wuking is pushing RT technology to the max. The game is very demanding at maxed out settings with PT

Might be compression artefacts, but the face/hair look quite blurred.the image quality still looks like 4K to my eyes

You need to enable english subtitles. The raytracing and OMM segment starts at 7:23.Thanks. I've got to discussion titled "RT still not worth it", which is probably not what you meant.

Could you link to the developer talking about AMD missing the needed bits to make a performant game.

I'd say a game that does 38 frames per second without any "hardwahr RT" enabled is simply pretty demanding.

Might be compression artefacts, but the face/hair look quite blurred.

BTW, this is from the new video from AMD:

FSR with AI might be coming sooner than we thought.

I mean it sounds funny but it's true to some extent.Lol...RT is so good now because it was so bad before.

Path Tracing, which is what Black Myth Wukong uses, is a different galaxy than previous attempts at Ray TracingLol...RT is so good now because it was so bad before.

N48 top

80%+ of the gaming market is hardware that`s not going to run decent RT (or rather its PT subcategory) anytime soon. And even with new hardware the cross-development phases have gotten so much longer due to budget reasons alone that hybrid systems in mass market products probably aren`t going anywhere till at least the end of the decade.As Nvidia hardware has gotten better at the RT calculations, it's no longer necessary to use the old hybrid system where most lighting is still raster and a few RT effects get pasted on top

7900XT always was never GRE, and they crank up clocks nowIt was rumored to be 7900GRE-XT and now on par with 7900XTX in raster?

So based on previous AMD rumours add minus 20% and you will have final performance.

It was rumored to be 7900GRE-XT and now on par with 7900XTX in raster?

Looks nice. My reference 7900xt is fantastic in both looks and to the touch.Seems ref 8800

I hope FSR4 comes to the 7000 series with most features intact.From one of AMD's most recent driver builds.

Not yet available for the public.

Yeah because you can compare an APU with a full sized PC GPU.Well based on the ps5 pros supposed rdna4 capabilities the rt boost is nothing to write home about..

More like back to old naming schemeAMD will adopt a naming scheme similar to NVIDIA’s RTX x0x0 tiers.

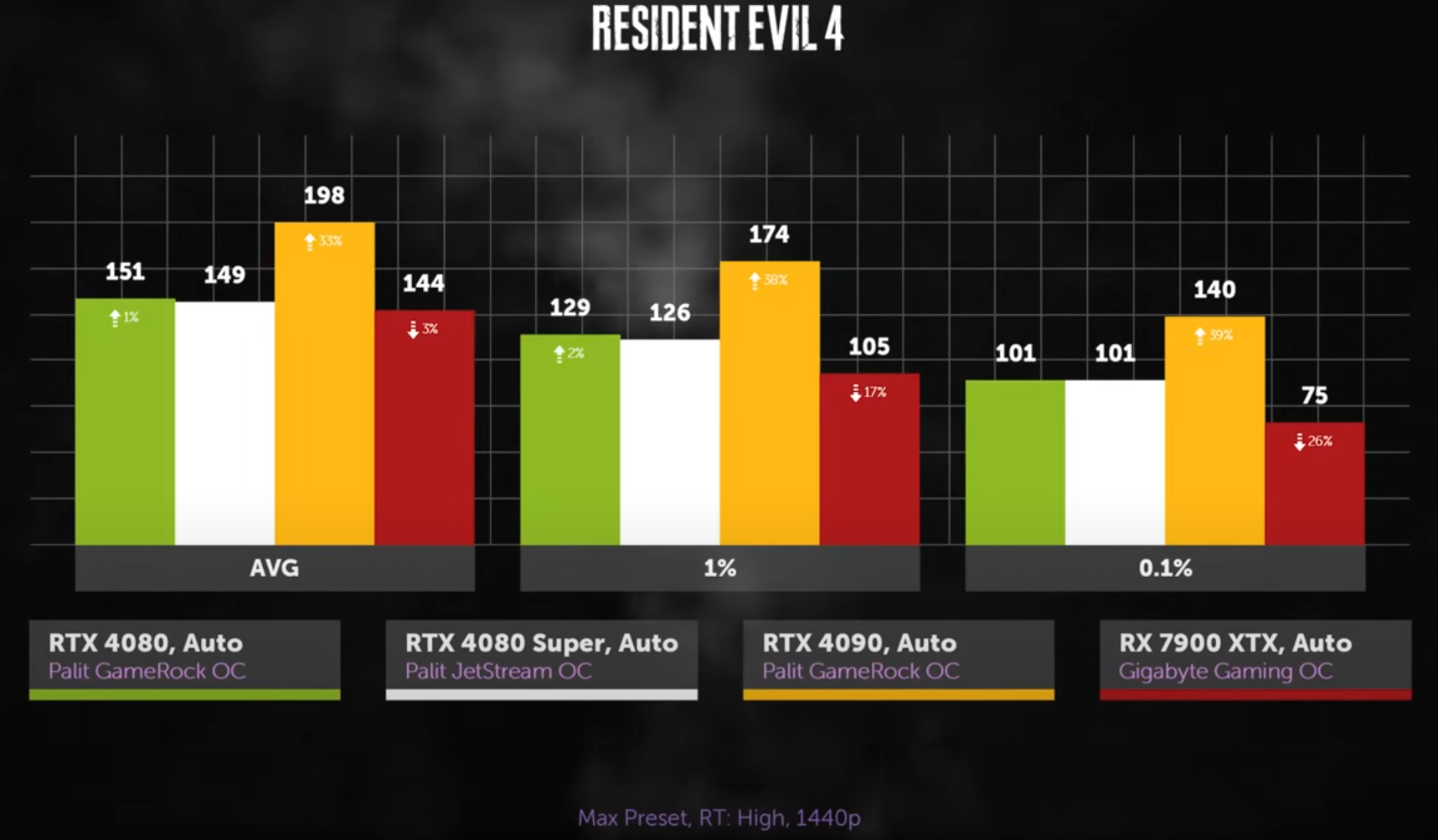

Except 7900XTX + 50%-ish is where 4090 is.Lol...RT is so good now because it was so bad before.

This got debunked because you posted the same bullshit last time. We all know the 7900 XTX is not equal to the 4080 in RT, so why do you post this thinking we don’t know how differentials and distribution work?Except 7900XTX + 50%-ish is where 4090 is.

And 7900XTX RT is about 4080 levels.

AMD Radeon RX 7900 XTX Review - Disrupting the GeForce RTX 4080

Navi 31 is here! The new $999 Radeon RX 7900 XTX in this review is AMD's new flagship card based on the wonderful chiplet technology that made the Ryzen Effect possible. In our testing we can confirm that the new RX 7900 XTX is indeed faster than the GeForce RTX 4080, but only with RT disabled.www.techpowerup.com

AMD reportedly preparing Radeon RX 9070 XT and RX 9070 GPUs, mobile variants also identified

Not the Radeon 8000 series, but the 9000 series. Following the disclosure of the Radeon 9070 series by a Chiphell editor, other sources have come forward with information on the upcoming RDNA4 and RDNA3/3.5 updates.

Starting with the Radeon 9000 series, All The Watts has revealed a list of upcoming desktop and mobile SKUs, including four product tiers. Notably absent from the list are the Radeon 9080 models, with the highest SKU mentioned being the Radeon RX 9070 XT. AMD will also have the RX 9070 non-XT, as well as 9070M XT, 9070M, and 9070S variants for laptops.

AMD Radeon 9000 Series SKUs

- AMD Radeon™ RX 9070 Series Graphics

- AMD Radeon™ RX 9060 Series Graphics

- AMD Radeon™ RX 9050 Series Graphics

- AMD Radeon™ RX 9040 Series Graphics

- AMD Radeon™ RX 9070 XT Graphics

- AMD Radeon™ RX 9070M XT Graphics

- AMD Radeon™ RX 9070 Graphics

- AMD Radeon™ RX 9070M Graphics

- AMD Radeon™ RX 9070S Graphics

The leaker also confirms names for three Strix Halo integrated graphics. This high-end APU, set to launch at CES 2025, will have three variants featuring up to 40 RDNA3.5 compute units.

AMD Radeon 8000S Series Integrated SKUs (Strix Halo)

The expected update for RDNA3 desktop and mobile graphics is also mentioned, following rumors of the Radeon 7650 GRE update planned for CES or later. It seems that AMD will be updating more SKUs with xx50 variants. The RDNA3 family is not affected by the change in the naming scheme for Radeon GPUs.

- AMD Radeon™ RX 8060S Graphics

- AMD Radeon™ RX 8050S Graphics

- AMD Radeon™ RX 8040S Graphics

AMD Radeon 7000 Series SKUs

Another leaker, momomo_us, seems to have already spotted the new Radeon 9070 series at one retailer (Grosbill Pro), who is listing the upcoming RDNA4 graphics cards as two SKUs: 9070XT and 9070.

- AMD Radeon™ RX 7750 Series Graphics

- AMD Radeon™ RX 7650 Series Graphics

- AMD Radeon™ RX 7750S Graphics

- AMD Radeon™ RX 7650 GRE Graphics

- AMD Radeon™ RX 7650M XT Graphics

- AMD Radeon™ RX 7650M Graphics

- AMD Radeon™ RX 7650S Graphics

All considered, it appears that the rumors about a potential change in naming are indeed true, and AMD will adopt a naming scheme similar to NVIDIA’s RTX x0x0 tiers. And by the way, Grossbill also lists RTX 5090 and RTX 5080, but for now only categories without any products.

AMD reportedly preparing Radeon RX 9070 XT and RX 9070 GPUs, mobile variants also identified - VideoCardz.com

AMD copies NVIDIA naming Multiple sources now confirm change in AMD naming for next-gen graphics. Not the Radeon 8000 series, but the 9000 series. Following the disclosure of the Radeon 9070 series by a Chiphell editor, other sources have come forward with information on the upcoming RDNA4 and...videocardz.com

Except 7900XTX + 50%-ish is where 4090 is.

And 7900XTX RT is about 4080 levels.

AMD Radeon RX 7900 XTX Review - Disrupting the GeForce RTX 4080

Navi 31 is here! The new $999 Radeon RX 7900 XTX in this review is AMD's new flagship card based on the wonderful chiplet technology that made the Ryzen Effect possible. In our testing we can confirm that the new RX 7900 XTX is indeed faster than the GeForce RTX 4080, but only with RT disabled.www.techpowerup.com

Where does that timespy score place tha card relative to the 4080/7900xtx?TimeSpy 9070xt

More like back to old naming scheme

Let's wait final score with driver. Also that is ref scoreWhere does that timespy score place tha card relative to the 4080/7900xtx?

AMD and retarded name changes...where will they go from 9xxx? 10xxx?

Equal in RT in what game, Dirt 5?

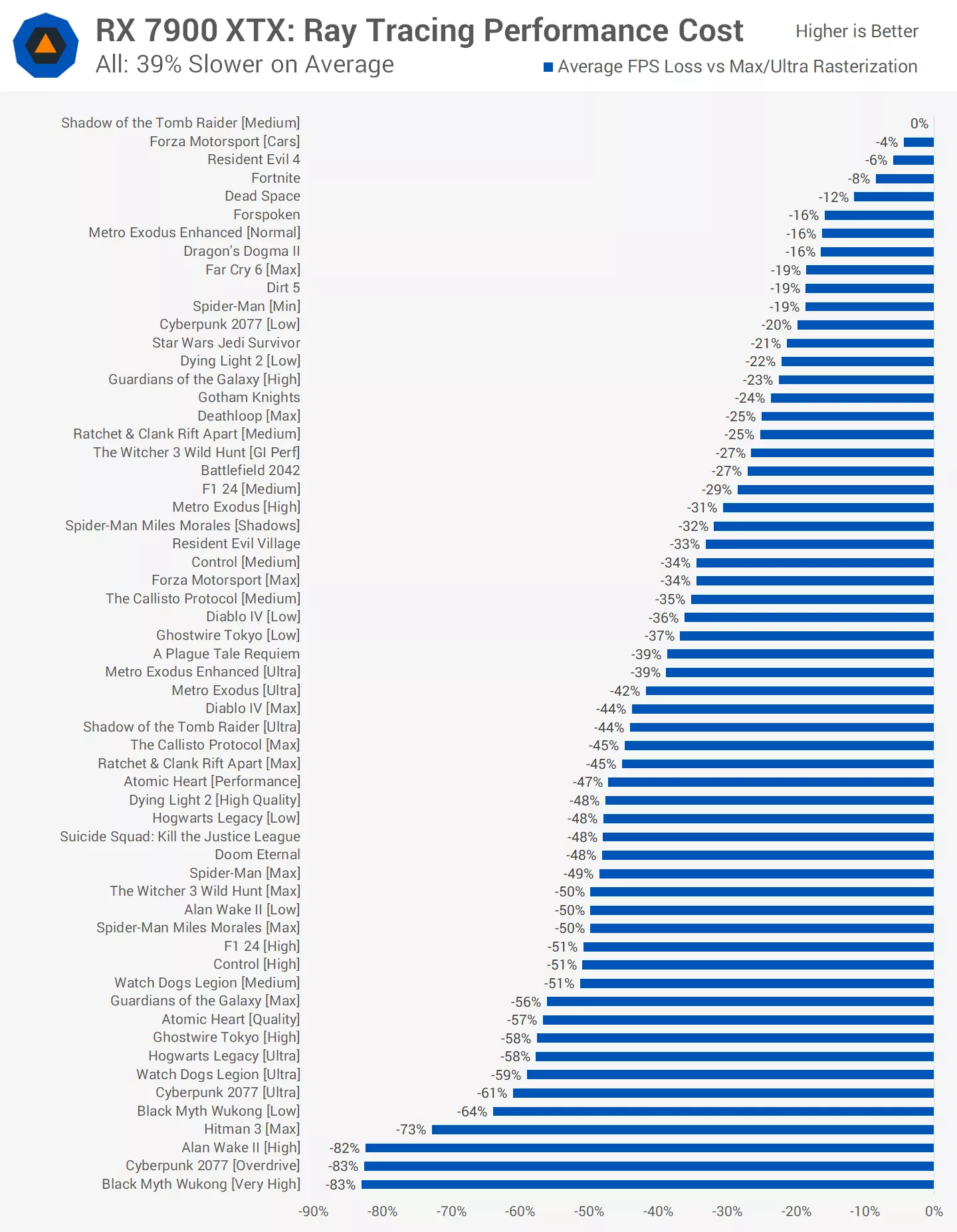

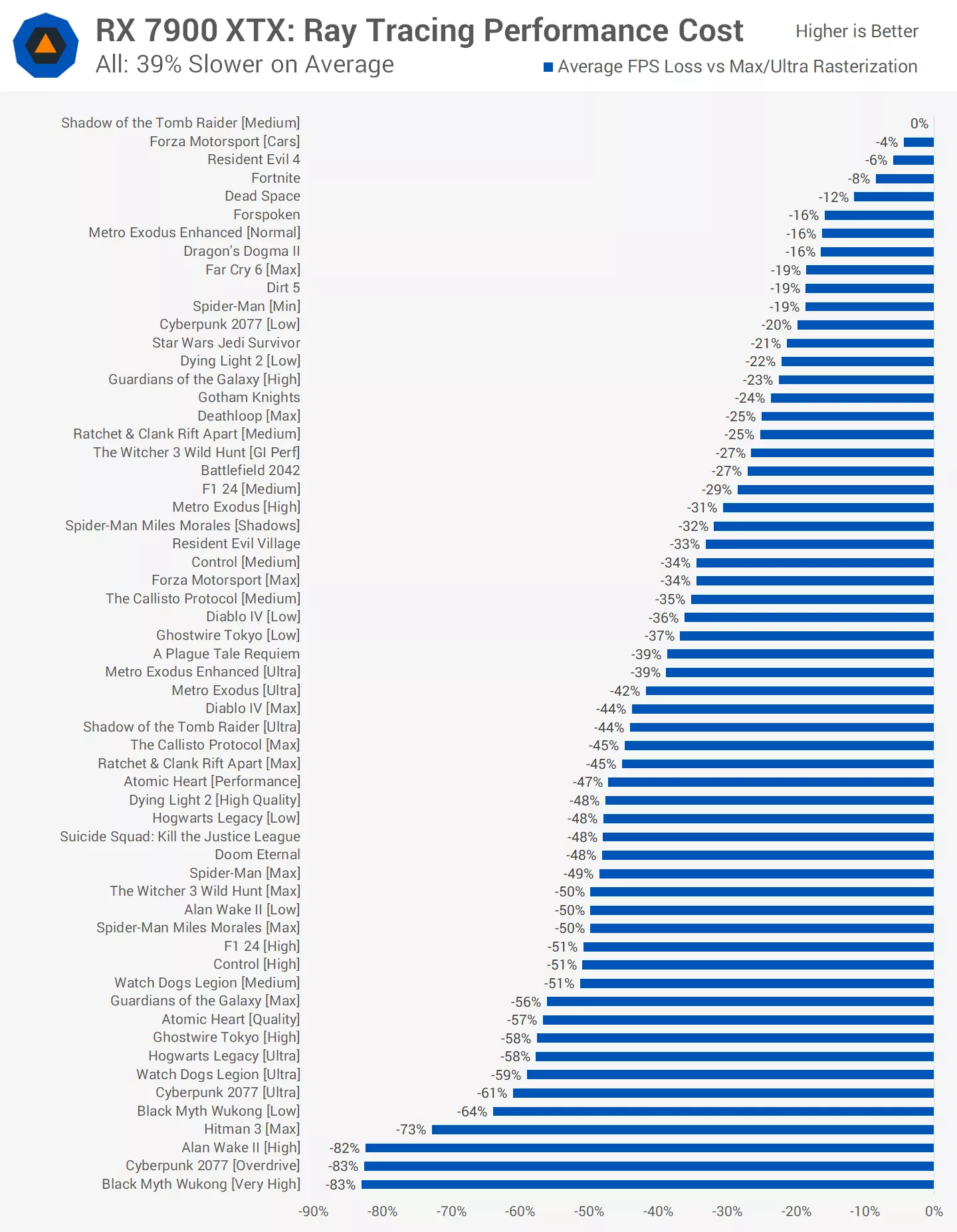

Across the 59 game configurations, the average performance impact from enabling ray tracing was 39%. This includes some enormous performance losses in path-traced configurations; without those outliers, the performance impact was more like 35%.

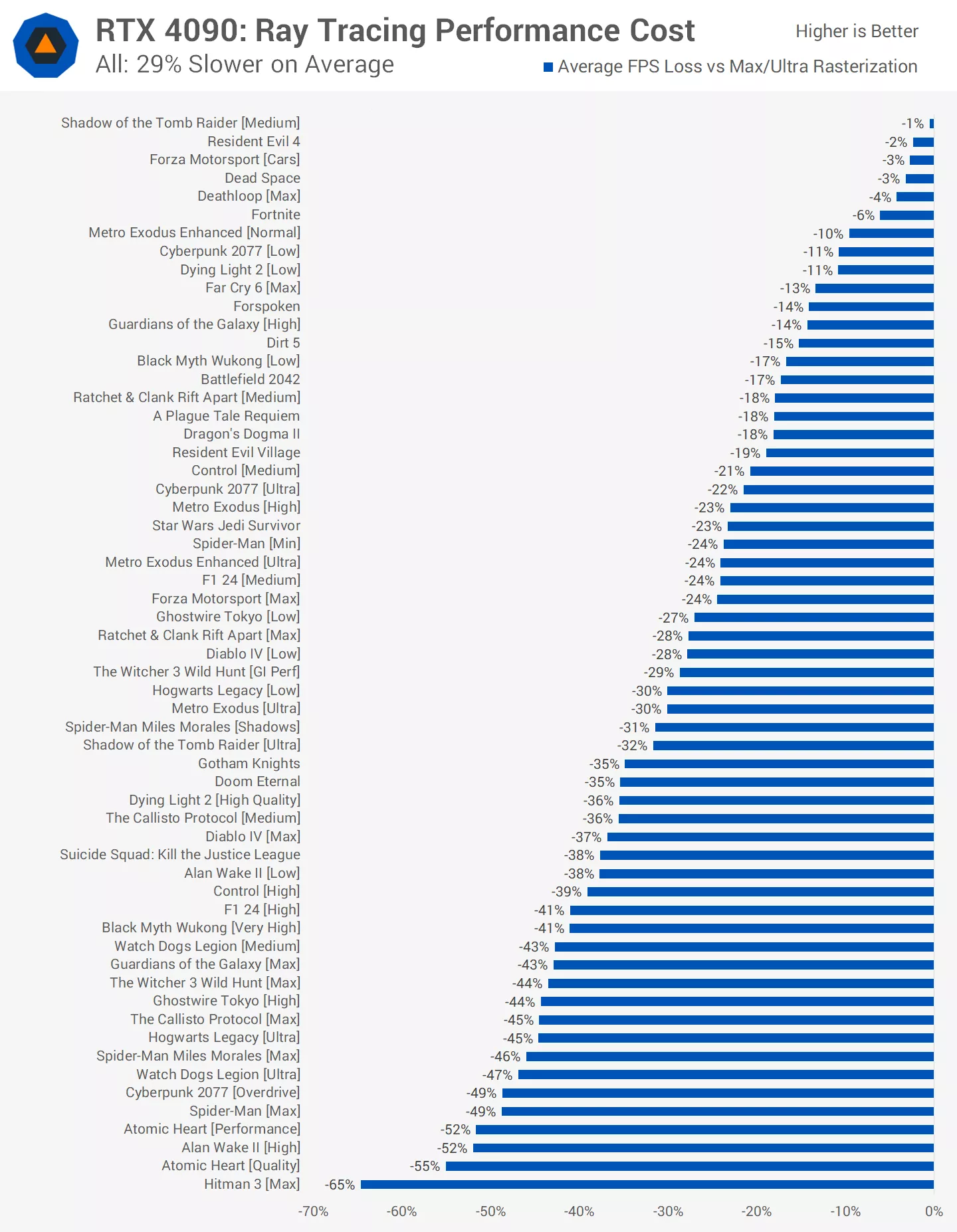

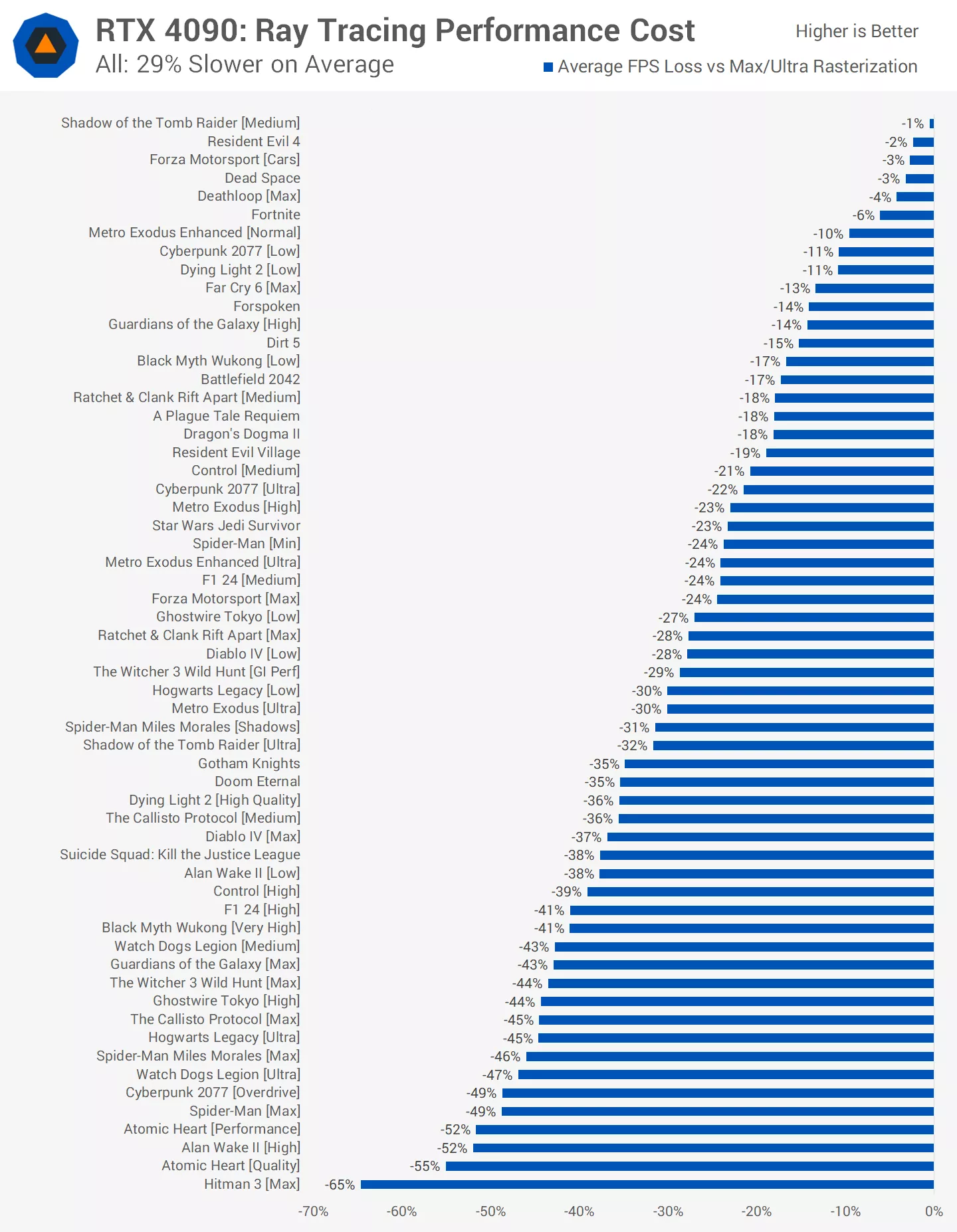

Across all 59 ray tracing configurations, on average, compared to running on ultra or maximum rasterized settings at 4K, enabling ray tracing will cause a 29% reduction in frame rate.

www.techspot.com

www.techspot.com

The link I've shared not only shows the average RT at 3 resolutions, but lists the games too.RT in what game

4090

Is Ray Tracing Worth the FPS Hit? 36 Game Performance Investigation

Ray tracing can enhance visuals, but it often comes at a high performance cost. We analyze its impact across 36 games comparing the performance of Nvidia and...www.techspot.com

The link I've shared not only shows the average RT at 3 resolutions, but lists the games too.