clarky

Gold Member

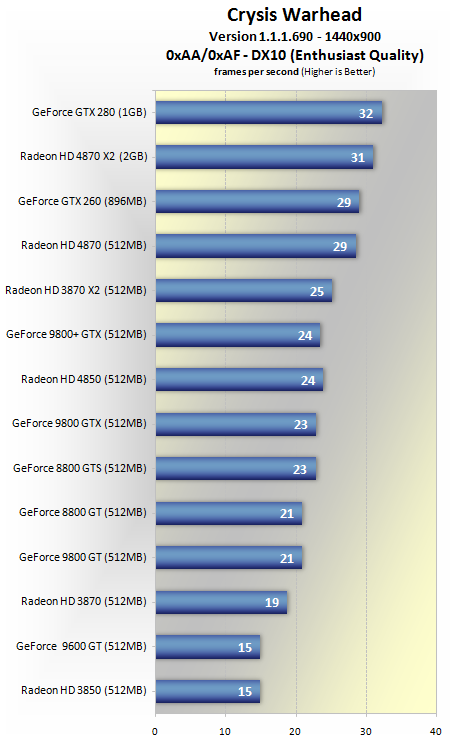

Its with the new frame genPeople arguing over the 29fps, while i'm sat here thinking since when does DLSS, even on performance mode, get you near 10 times the performance!.

29fps to 240fps is insane. I guess maybe they are using framegen too?