HeisenbergFX4

Member

G-SYNC Displays Dazzle At CES 2024: G-SYNC Pulsar Tech Unveiled, G-SYNC Comes To GeForce NOW, Plus 24 New Models

By Guillermo Siman & Andrew Burnes on January 08, 2024 | Featured StoriesG-SYNCGeForce NowGeForce RTX GPUsHardwareNVIDIA ReflexPulsarIn the ever-evolving realm of gaming technology, NVIDIA has consistently pioneered technologies that have redefined experiences for users.

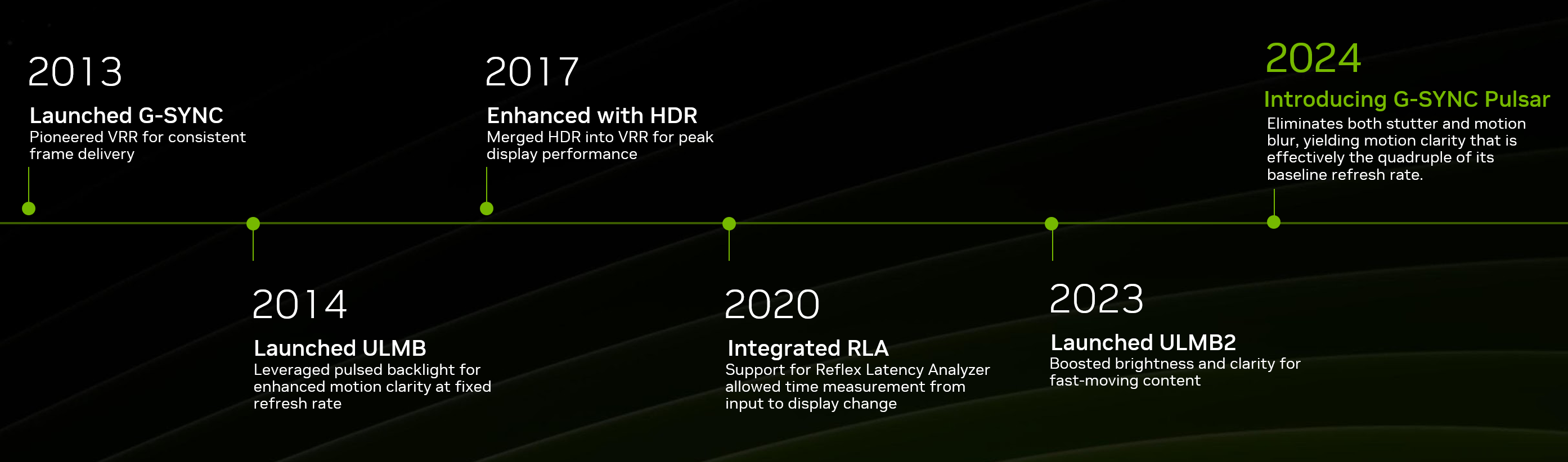

In 2013, the introduction of G-SYNC revolutionized display technology, and G-SYNC has continued bringing new advancements in displays ever since.

Today, you can buy hundreds of G-SYNC, G-SYNC ULTIMATE, and G-SYNC Compatible gaming monitors, gaming displays, and TVs, ensuring a fantastic smooth, stutter-free experience when gaming on GeForce graphics cards.

At CES 2024, our partners at Alienware, AOC, ASUS, Dough, IO Data, LG, Philips, Thermaltake, and ViewSonic announced 24 new models that give further choice to consumers. Among the new models is the Alienware AW3225QF, one of the world’s first 240Hz 4K OLED gaming monitor, the Philips Evnia 49M2C8900, one of the world’s first ultrawide DQHD 240Hz OLED gaming monitors, and LG’s 2024 new 144Hz OLED and wireless TV lineup, which are available in a multitude of screen sizes, from 48 inches to a whopping 97 inches.

At CES, we also announced two new G-SYNC innovations: G-SYNC on GeForce NOW, and G-SYNC Pulsar technology.

G-SYNC technology comes to the cloud with GeForce NOW, vastly improving the visual fidelity of streaming to displays that support G-SYNC. Members will see minimized stutter and latency for a nearly indistinguishable experience from a local gaming experience.

G-SYNC Pulsar is the next evolution of Variable Refresh Rate (VRR) technology, not only delivering a stutter-free experience and buttery smooth motion, but also a new gold standard for visual clarity and fidelity through the invention of variable frequency strobing. This boosts effective motion clarity to over 1000Hz on the debut ASUS ROG Swift PG27 Series G-SYNC gaming monitor, launching later this year.

With G-SYNC Pulsar, the clarity and visibility of content in motion is significantly improved, enabling you to track and shoot targets with increased precision

No longer will users have to choose between the smooth variable refresh, or the improved motion clarity of Ultra Low Motion Blur (ULMB) – our new G-SYNC Pulsar technology delivers all the benefits of both, working in perfect harmony to deliver the definitive gaming monitor experience.

ASUS ROG Swift PG27 Series G-SYNC gaming monitors with Pulsar technology launches later this year

Read on for further details about our new CES 2024 G-SYNC announcements.

The Next Generation: Introducing G-SYNC Pulsar Technology

NVIDIA’s journey in display technology has been marked by relentless innovation. The launch of G-SYNC in 2013 eradicated stutter by harmonizing the monitor’s refresh rate with the GPU’s output. This was just the beginning.Following G-SYNC, we introduced Variable Overdrive, enhancing motion clarity by intelligently adjusting pixel response times. Then we incorporated HDR, bringing richer, more vibrant colors and deeper contrast, transforming the visual experience. Additionally, the expansion into broader color gamuts allowed for more lifelike and diverse color representation, while the introduction of wider refresh rate ranges further refined the smoothness of on-screen action—catering to both high-speed gaming and cinematic quality, and everything in between.

The Breakthrough Innovation Of G-SYNC Pulsar

Traditional VRR technologies dynamically adjust the display’s refresh rate to match the GPU’s frame rate, effectively eliminating stutter.To evolve VRR further, the aspiration has always been in unifying it with advanced strobing techniques to eliminate display motion blur (not to be confused with in-game motion blur visual effects). Display motion blur is caused by both slow LCD transitions, and the persistence of an image on the retina as our eyes track movement on-screen. Slow pixel transitions can’t keep up with fast-moving objects, leading to a smear effect. These slow transitions can be eliminated by Variable Overdrive—but motion blur born out of object permanence in the eye can only be removed by strobing the backlight.

However, strobing the backlight at a frequency that is not fixed causes serious flicker—which, until now, had prevented effective use of the technique in VRR displays.

For over a decade, our engineers have pursued the challenge of marrying the fluidity of VRR timing with the precise timing needed for effective advanced strobing.

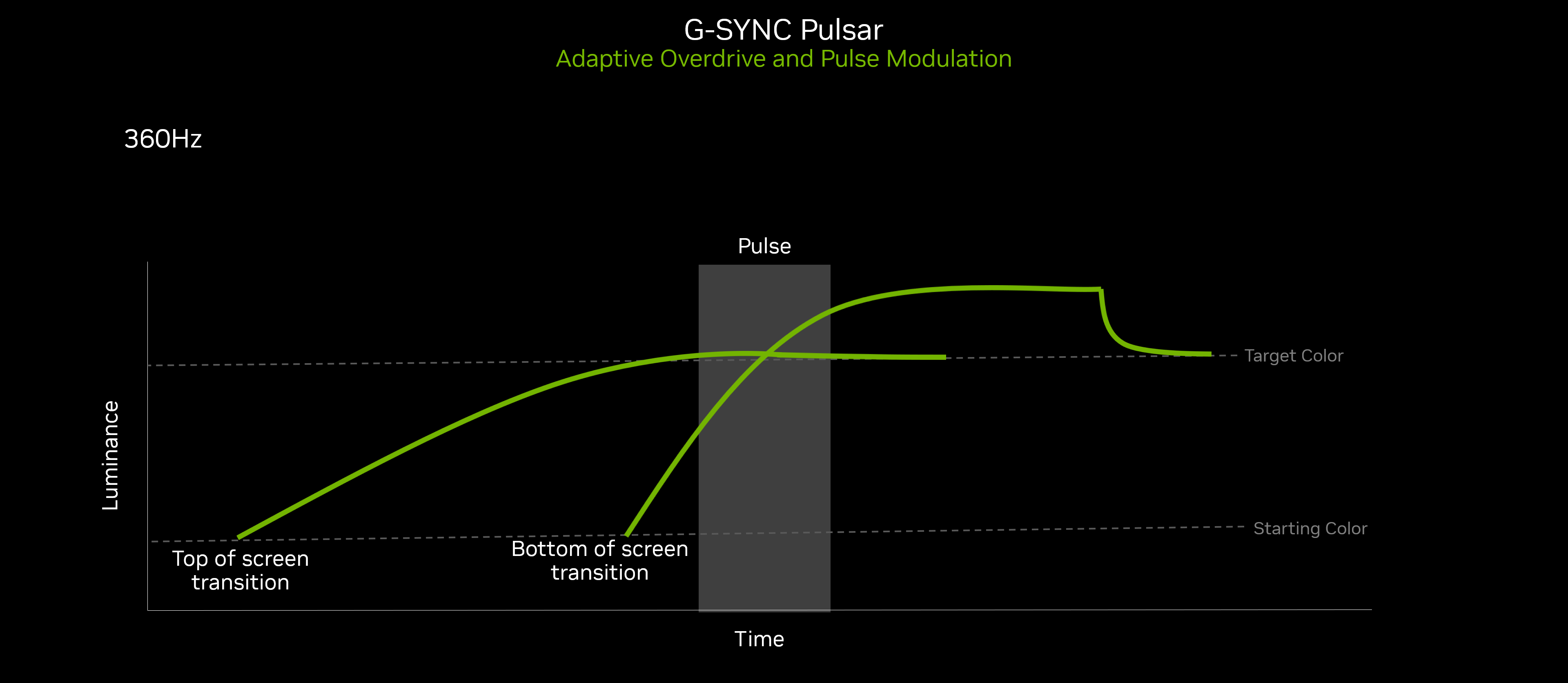

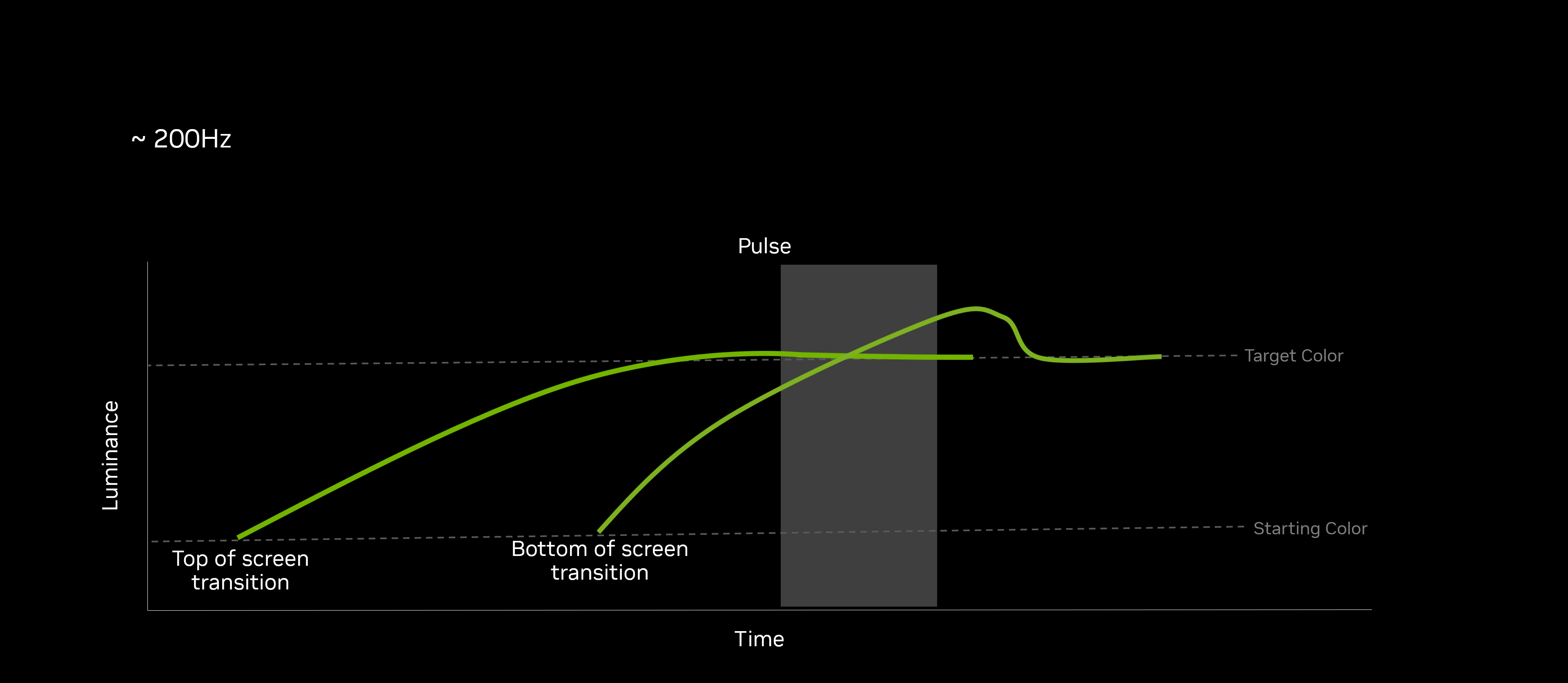

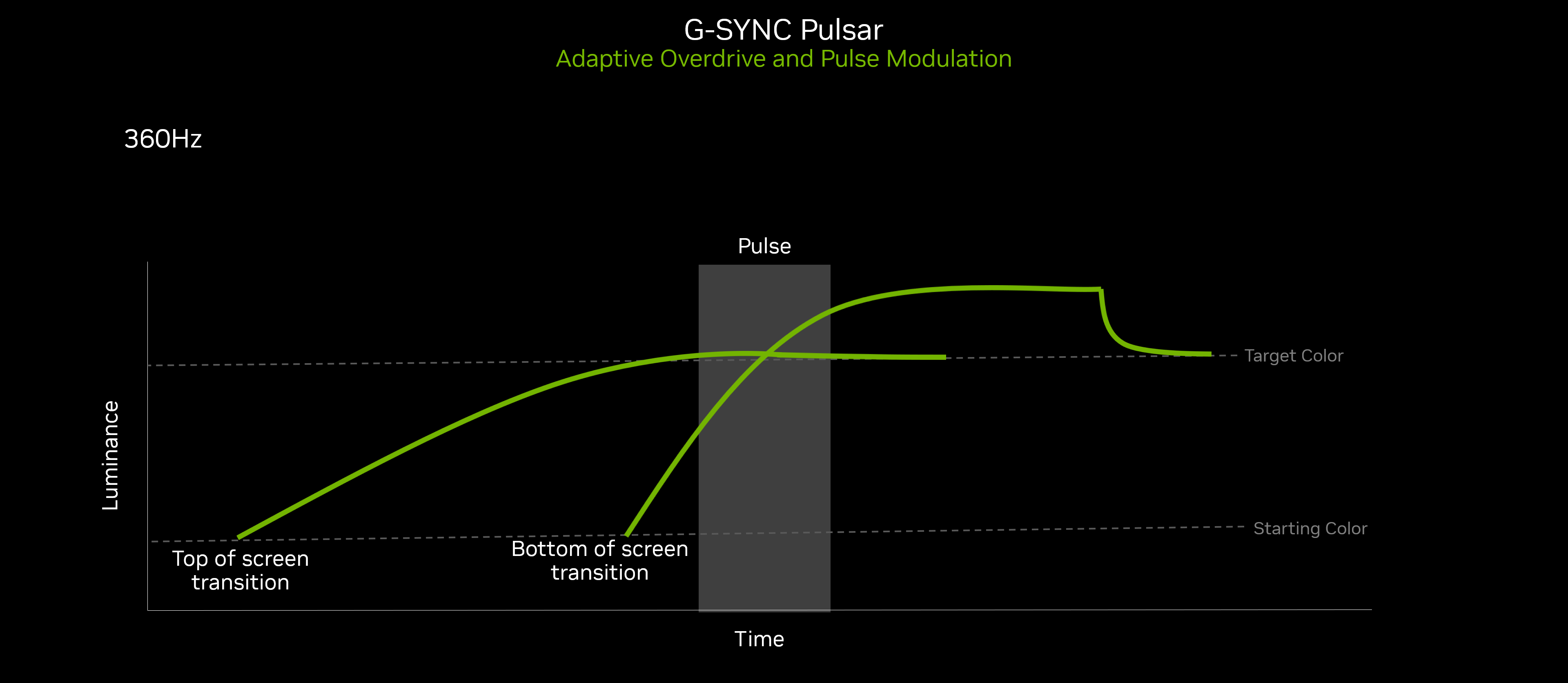

The solution was a novel algorithm that dynamically adjusts strobing patterns to varying render rates. NVIDIA’s new G-SYNC Pulsar technology marks a significant breakthrough by synergizing two pivotal elements: Adaptive Overdrive and Pulse Modulation.

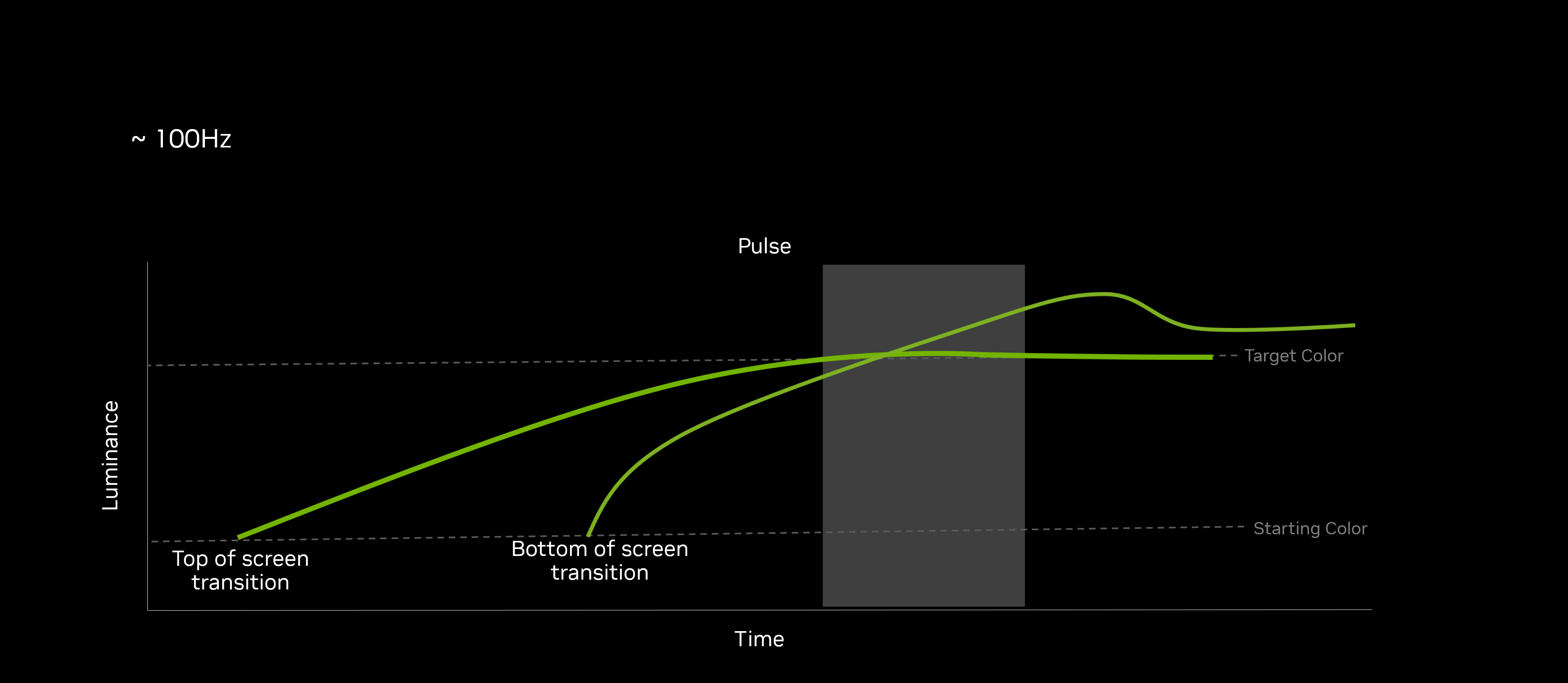

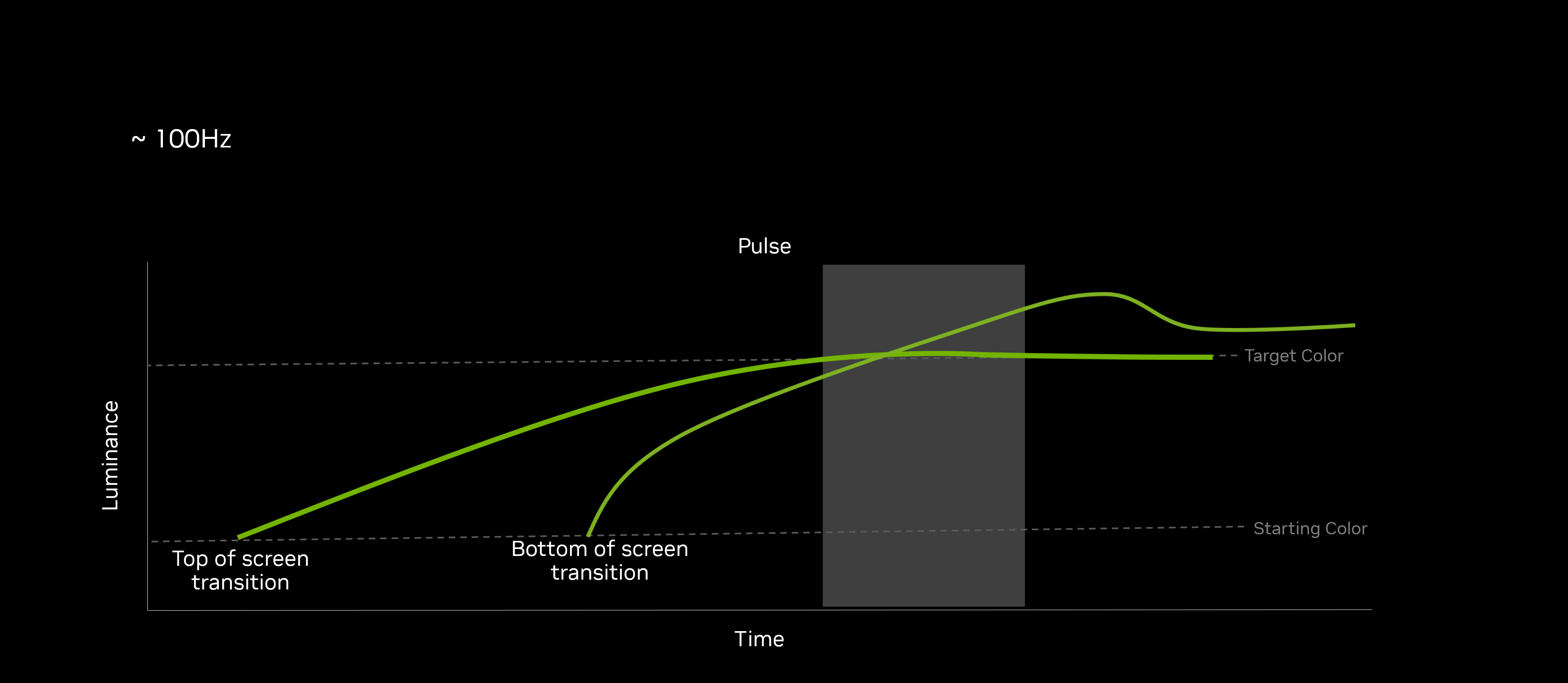

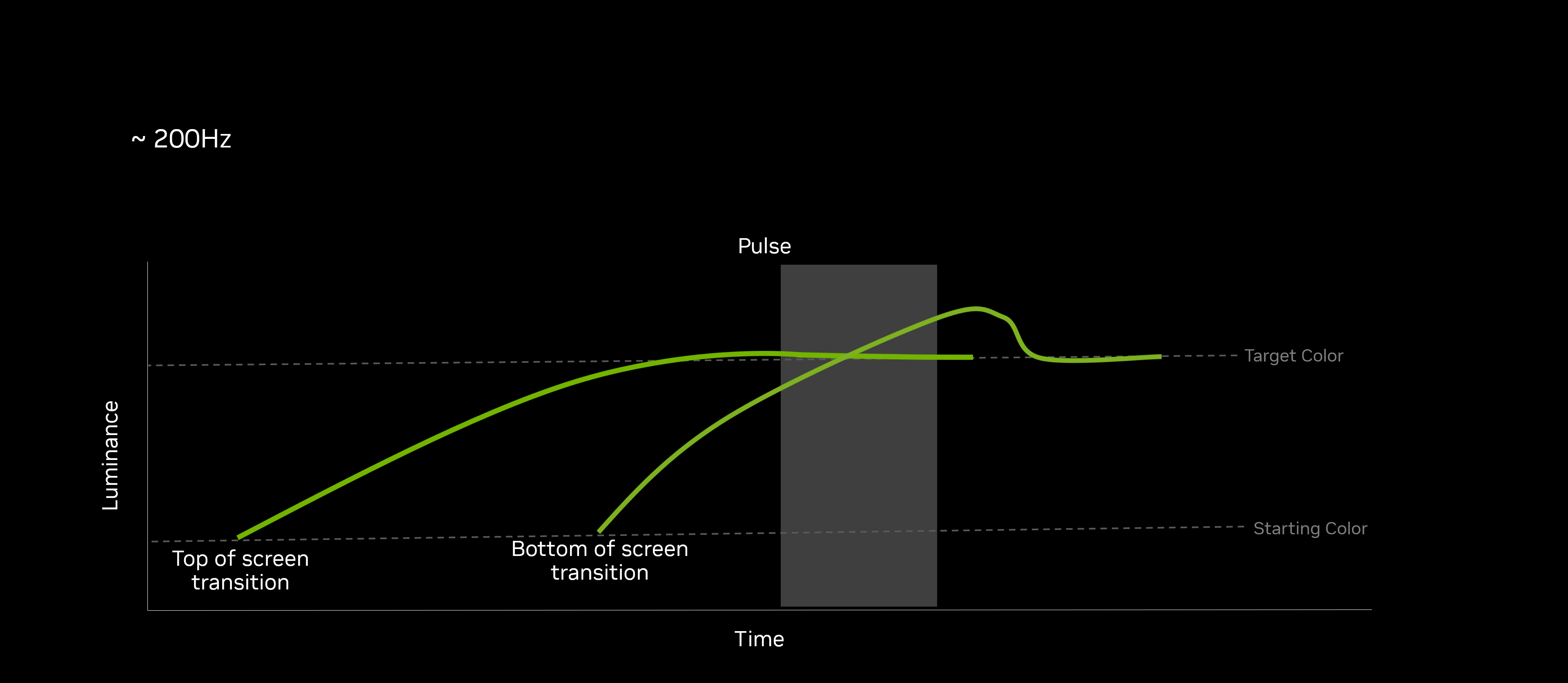

With Adaptive Overdrive, G-SYNC Pulsar dynamically adjusts the rate at which pixels transition from one color to another, a vital technique to reduce motion blur and ghosting. This process is complicated by VRR technology, where the refresh rate fluctuates in tandem with the GPU's output. G-SYNC Pulsar’s solution modulates overdrive based on both screen location and refresh rate—ensuring that clarity and blur reduction are maintained across a spectrum of speeds, and across the entire screen space.

Complementing this, the technology also intelligently controls the pulse's brightness and duration—key to maintaining visual comfort and eliminating flicker. Flickering, often a byproduct of strobing methods used to diminish motion blur, can disrupt the gaming experience and cause viewer discomfort. By adaptively tuning backlight pulses in response to the constantly changing game render rate, G-SYNC Pulsar creates a consistent and comfortable viewing experience, effectively accommodating the display's dynamic nature.

Merging these two adaptive strategies, G-SYNC Pulsar transcends previous challenges associated with enhancing VRR with strobing backlight techniques. Prior attempts have often stumbled, leading to flickering and diminished motion clarity. However, G-SYNC Pulsar’s innovation ensures perfect synchronization between overdrive and backlight pulse with the screen's refresh cycle.

This represents a leap beyond incremental updates or a combination of existing technologies: it is a radical rethinking of display technology—necessitating the development of new panel technology, and representing a fundamental reengineering at both hardware and software levels.

The resulting gaming experience is transformative, where each frame is delivered with both stutter-free smoothness, and motion clarity that is effectively the quadruple of its baseline refresh rate—enabling a truly immersive and uninterrupted visual journey for gamers. Even in the most intense and fast-paced games.

G-SYNC Pulsar Demo: See The Technology In Action

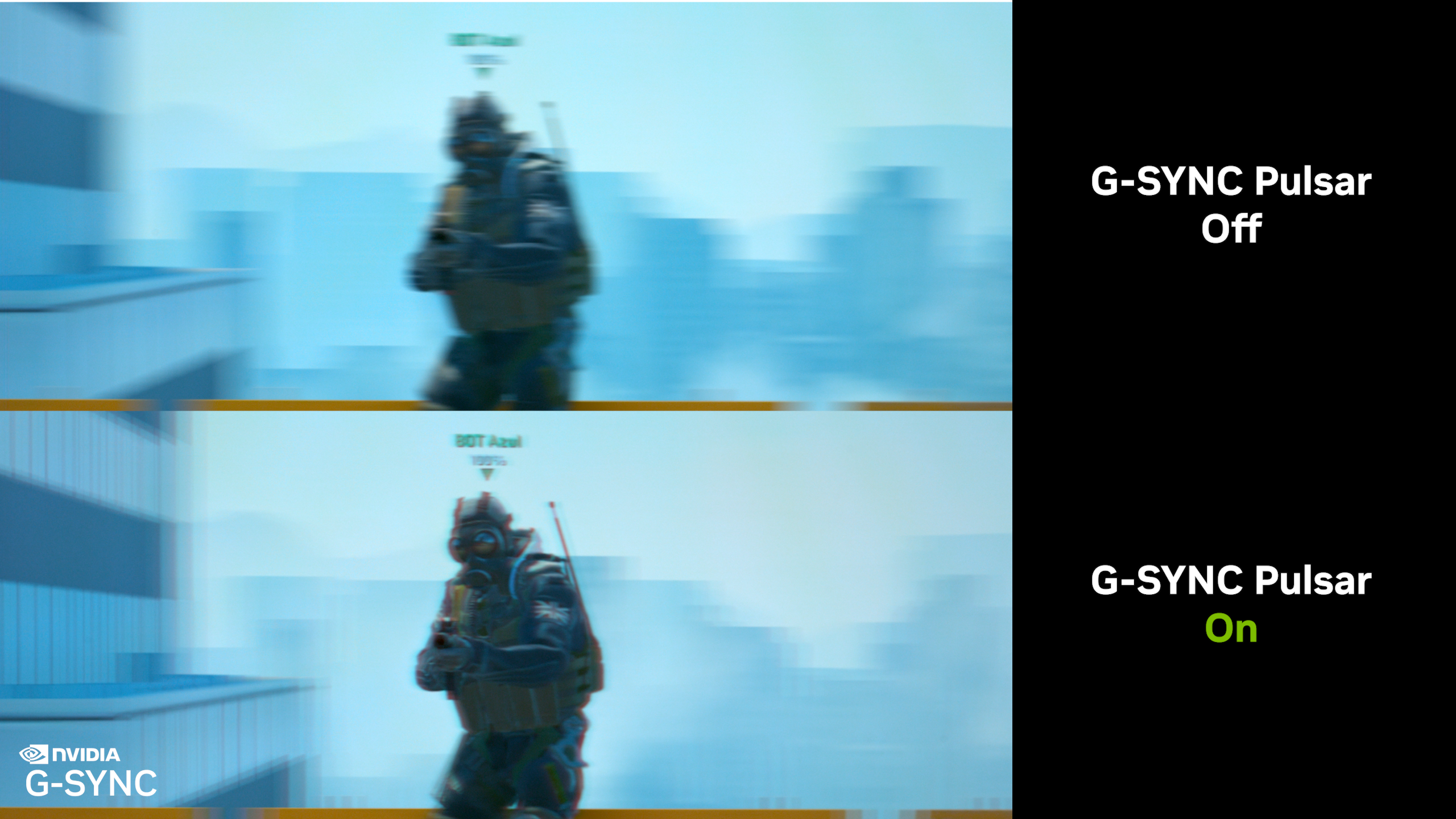

The true impact of G-SYNC Pulsar is unmistakable in action. When enabled, the technology offers starkly smoother scenes and sharper clarity, surpassing the capabilities of earlier monitor technologies that attempted to combine VRR and strobing.This advancement offers distinct advantages for various gaming genres. In competitive gaming, the elimination of stuttering is crucial, as these distractions can impede performance and affect outcomes. Similarly, enhanced motion clarity can provide a competitive edge, where precise tracking and response to fast-moving elements and friend-foe distinction are paramount. For immersive games, the technology's ability to maintain consistent smoothness and clarity enhances the player's sense of being part of the game world, free from immersion-breaking visual artifacts.

Furthermore, G-SYNC Pulsar simplifies the user experience by eliminating the need to switch between different monitor settings for either VRR or strobing technologies. Whether it's for the high-stakes environment of competitive gaming, or the rich, detailed worlds of immersive titles, G-SYNC Pulsar delivers a superior and convenient visual experience tailored to all facets of gaming.

In the video below, a 1000 FPS high-speed pursuit camera recorded Counter-Strike 2 running identically on a 360Hz G-SYNC monitor with Pulsar technology enabled, versus with Pulsar technology disabled. Played back at 1/24 speed, the reduction of monitor-based motion blur on the G-SYNC Pulsar display is immediately evident, greatly improving clarity, fidelity, target tracking and target acquisition, helping improve hit rate, and more, making users more competitive online.

With G-SYNC Pulsar, the clarity and visibility of content in motion is significantly improved, enabling you to track and shoot targets with increased precision

G-SYNC Technology Comes To GeForce NOW

G-SYNC technology will be coming soon to the cloud with GeForce NOW, raising the bar even higher for high-performance game streaming.With the introduction of the Ultimate tier, GeForce NOW delivered improved visual graphics in the cloud by varying the stream rate to the client, driving down total latency on Reflex-enabled games.

Newly improved cloud G-SYNC technology goes even further by varying the display refresh rate for smooth and instantaneous frame updates for variable refresh rate monitors, fully optimized for G-SYNC capable monitors, providing members with the smoothest tear-free gaming experience from the cloud.

Ultimate members will also soon be able to utilize Reflex in supported titles at up to 4K resolution and 60 or 120 FPS streaming modes, for low-latency gaming on nearly any device.

With both Cloud G-SYNC and Reflex, members will feel as if they’re connected directly to GeForce NOW servers, for a visual experience that is smoother, clearer, and more immersive than ever before.

24 New G-SYNC Gaming Monitors, Displays & TVs Coming Soon

There are thousands of monitors, displays and TVs out there, but only the best receive the G-SYNC badge of honor. Monitors with dedicated G-SYNC modules push the limits of technology, reaching the highest possible refresh rates, and enabling advanced features such as G-SYNC esports mode. And G-SYNC ULTIMATE displays deliver stunning HDR experiences.

Hundreds of other displays are validated by NVIDIA as G-SYNC Compatible, giving confidence for buyers looking for displays that don’t blank, pulse, flicker, ghost, or otherwise artifact during Variable Refresh Rate (VRR) gaming. G-SYNC Compatible also ensures that a display operates in VRR at any game frame rate by supporting a VRR range of at least 2.4:1 (e.g. 60Hz-144Hz), and offers the gamer a seamless experience by enabling VRR by default on GeForce GPUs.

Manufacturers are increasingly adopting VRR, and many incorporate game modes and additional features that enhance the PC experience, whether gaming on a monitor or TV. To ensure a flawless out of the box experience, partners such as Alienware, AOC, ASUS, Dough, IO Data, LG, Philips, Thermaltake, and ViewSonic share their displays with us for testing and optimization.

At CES 2024, our partners unveiled 24 new G-SYNC gaming monitors, displays and TVs, from a 16 inch portable monitor, all the way up to 97 inch TVs.

The Alienware AW3225QF, launching this January, is the one of the world’s first 240Hz 4K OLED gaming monitors. Spanning 32 inches, the curved display has a wide VRR range from 40Hz, up to its headline-grabbing 240Hz maximum refresh rate. Alienware boasts that the AW3225QF has a 0.03ms gray-to-gray minimum response time, Dolby Vision® HDR and VESA DisplayHDR True Black 400. And for peace of mind, there’s a three-year OLED burn-in warranty.

The Philips Evnia 49M2C8900 is one of the world’s first 5120x1440 (DQHD) 240Hz OLED gaming monitors, boasting a 48.9 inch curved screen. It’s DisplayHDR True Black 400 certified, for increased color accuracy and brightness, it’s VESA ClearMR-certified, for increased motion clarity, there’s a Smart Image Game Mode to tweak the display output based on what you’re playing, and Philips’ Ambiglow technology is built into the back of the monitor, suitably illuminating your surroundings with color that matches the on-screen action, heighting immersion.

LG came to CES with 4 new IPS monitors, and 5 new 2024 TV lines, with 17 different size options. The LG SIGNATURE OLED M4, the world’s first G-SYNC Compatible 144Hz TV, is one of their notable highlights. Now available in a 65-inch screen size, this thrilling addition presents diverse screen options for the striking wireless OLED TV lineup, offering selections ranging from the versatile 65-inch model to the massive 97-inch giant. Cleaner, distraction-free viewing is easily attainable with innovative wireless solutions completely free of cables, excepting the power cord, and the M4 model is the world’s first TV with wireless video and audio transmission at up to 4K 144Hz, delivering superior OLED performance with accurate details and an elevated sense of immersion.

Below, you can check out the full specs of all 24 new G-SYNC gaming monitors, displays and TVs, to see if there’s something that meets your requirements. If not, head to our complete list of G-SYNC displays, dialing in on the perfect display with our filters.

| Manufacturer | Model | Size (Inches) | Panel Type | Resolution | Refresh Rate |

| Alienware | AW3225QF | 32 | OLED | 3840x2160 (4K) | 240Hz |

| ASUS | ROG Swift PG27 series | 27 | IPS | 2560x1440 (QHD) | 360Hz |

| ASUS | PG49WCD | 49 | OLED | 5140x1440 (DQHD) | 144Hz |

| AOC | 16G3 | 16 | IPS | 1920x1080 (FHD) | 144Hz |

| AOC | 24G4 | 24 | IPS | 1920x1080 (FHD) | 165Hz |

| AOC | 27G4 | 27 | IPS | 1920x1080 (FHD) | 165Hz |

| AOC | PD49 | 49 | OLED | 5120x1440 (DQHD) | 240Hz |

| AOC | Q27G2SD | 27 | IPS | 2560x1440 (QHD) | 180Hz |

| Dough | ES07E2D | 27 | OLED | 2560x1440 (QHD) | 240Hz |

| IO Data | GCU271HXA | 27 | IPS | 3840x2160 (4K) | 160Hz |

| LG | 2024 4K M4 / G4 | 97 | OLED | 3840x2160 (4K) | 120Hz |

| LG | 2024 4K M4 / G4 | 83, 77, 65, 55 | OLED | 3840x2160 (4K) | 144Hz |

| LG | 2024 4K C4 series | 83, 77, 65, 55, 48, 42 | OLED | 3840x2160 (4K) | 144Hz |

| LG | 2024 4K CS series | 65, 55 | OLED | 3840x2160 (4K) | 120Hz |

| LG | 2024 4K B4 series | 77, 65, 55, 48 | OLED | 3840x2160 (4K) | 120Hz |

| LG | 24G560F | 24 | IPS | 1920x1080 (FHD) | 180Hz |

| LG | 27G560F | 27 | IPS | 1920x1080 (FHD) | 180Hz |

| LG | 27GR75QB | 27 | IPS | 2560x1440 (QHD) | 144Hz |

| LG | 32GP75A | 32 | IPS | 2560x1440 (QHD) | 165Hz |

| Philips | 25M2N3200 | 25 | IPS | 1920x1080 (FHD) | 180Hz |

| Philips | 27M1N5500P | 27 | IPS | 2560x1440 (QHD) | 240Hz |

| Philips | 49M2C8900 | 49 | OLED | 5120x1440 (DQHD) | 240Hz |

| Thermaltake | 27FTQB | 27 | IPS | 2560x1440 (QHD) | 165Hz |

| ViewSonic | XG272-2K-OLED | 27 | OLED | 2560x1440 (QHD) | 240Hz |

For word on future G-SYNC ULTIMATE, G-SYNC, and G-SYNC Compatible displays, keep an eye on our GeForce Game Ready Driver announcements, where support for new monitors, displays and TVs is noted.

G-SYNC At CES 2024

Whether you game on a monitor, display or TV, locally on a PC or laptop, or via GeForce NOW in the cloud, there are now even more ways to enhance your gaming experiences with G-SYNC technology.G-SYNC technology on GeForce NOW makes the service’s fantastic streaming experience even better.

24 new G-SYNC gaming monitors, displays and TVs ensure there’s a perfect smooth and stutter free display for everyone.

And with the introduction of G-SYNC Pulsar, NVIDIA has addressed a challenge that has persisted for over a decade. G-SYNC Pulsar stands as a testament to NVIDIA's unwavering commitment to innovation and redefining the boundaries of gaming technology, offering gamers a visual experience that is smoother, clearer, and more immersive than ever before.

For news about new G-SYNC monitors, stay tuned to GeForce.com, where you can also check out our other announcements from CES 2024.