YeulEmeralda

Linux User

Think of it this way, 4090 is already 3x faster from ps5 even in raster(6x+ in rt), even if 5090 is "only" 20 to 30% stronger, we got behemoth of unimaginable power on our hands here

Think of it this way, 4090 is already 3x faster from ps5 even in raster(6x+ in rt), even if 5090 is "only" 20 to 30% stronger, we got behemoth of unimaginable power on our hands here

I meant most game engines are built to accommodate the lowest common denominator, so all you're really getting with these insane GFX cards is ever increasing frame rates @4k, I'm not seeing games built ground up to take insane advantage of these cards is what I'm saying

Hopefully my 4080 is gonna remain decent until series 60.

You're forgetting that people are willing to pay $€100 more for RT and DLSS.And doesnt look like they plan on targeting the top end anytime soon.

The xx90 is so far beyond they are literally Halo products.

The real fight is gonna be the midrange where we have to hope Intels Battlemage brings the heat so Nvidia starts realizing they need to make compelling midrangers.

AMD really just needs to launch their direct competing cards well under Nvidias price..........the upper echelon just leave it to Nvidia and call it a day.

Remember the 5700XT......that was AMDs range topper for that generation.....it fought against 2070s for 100....a whole 100 dollars less......they literally pressured Nvidia to make the Super series.

They need to do that again, but this time they have the advantage of having RT and FSR, itll make their 8700XT a truly truly better alternative to the 5070(ti)......if they can also make their 8900XT a much better deal than a 5080 (which shouldnt be hard based on leaks) then they should be golden, Nvidia can have the crown with the 5090 but at every other price point AMD and Intel should have a much better deal, not a slightly better deal, it should be much better.

100 dollars less for the competitor card and Nvidia will either do price drops are lose that market point (assuming consumers are smart).

Let's talk again when gta6 is gonna be out on pc, anything less than 4k60 is gonna be far from "gliding"It will.

16GB VRAM and pretty damn fast especially if you count DLSS.

Youll easily glide through the generation till the RTX60s which will probably come with some new tech making the upgrade worthwhile.

You're forgetting that people are willing to pay $€100 more for RT and DLSS.

Let's talk again when gta6 is gonna be out on pc, anything less than 4k60 is gonna be far from "gliding"

I meant 4k dlss quality of course, 4k native is a waste when there is an almost as good option.AMD and Intel both have good RT now and have FSR and XeSS.

In the midrange paying 500 dollars vs 600 dollars for similar performance without RT and a minor loss with RT should be compelling to users.

The 7800XT already has pretty strong RT.

In light RT workloads it could catch and at times beat the 4070.

In heavy RT workloads it only just misses the mark vs the 4060Ti.

Now how many games are gonna throw the full wormage of RT features like Cyberpunk does.....who knows but in lighter RT workloads AMD is already right up on the cusp of being simply better than Nvidia at midrange price points.

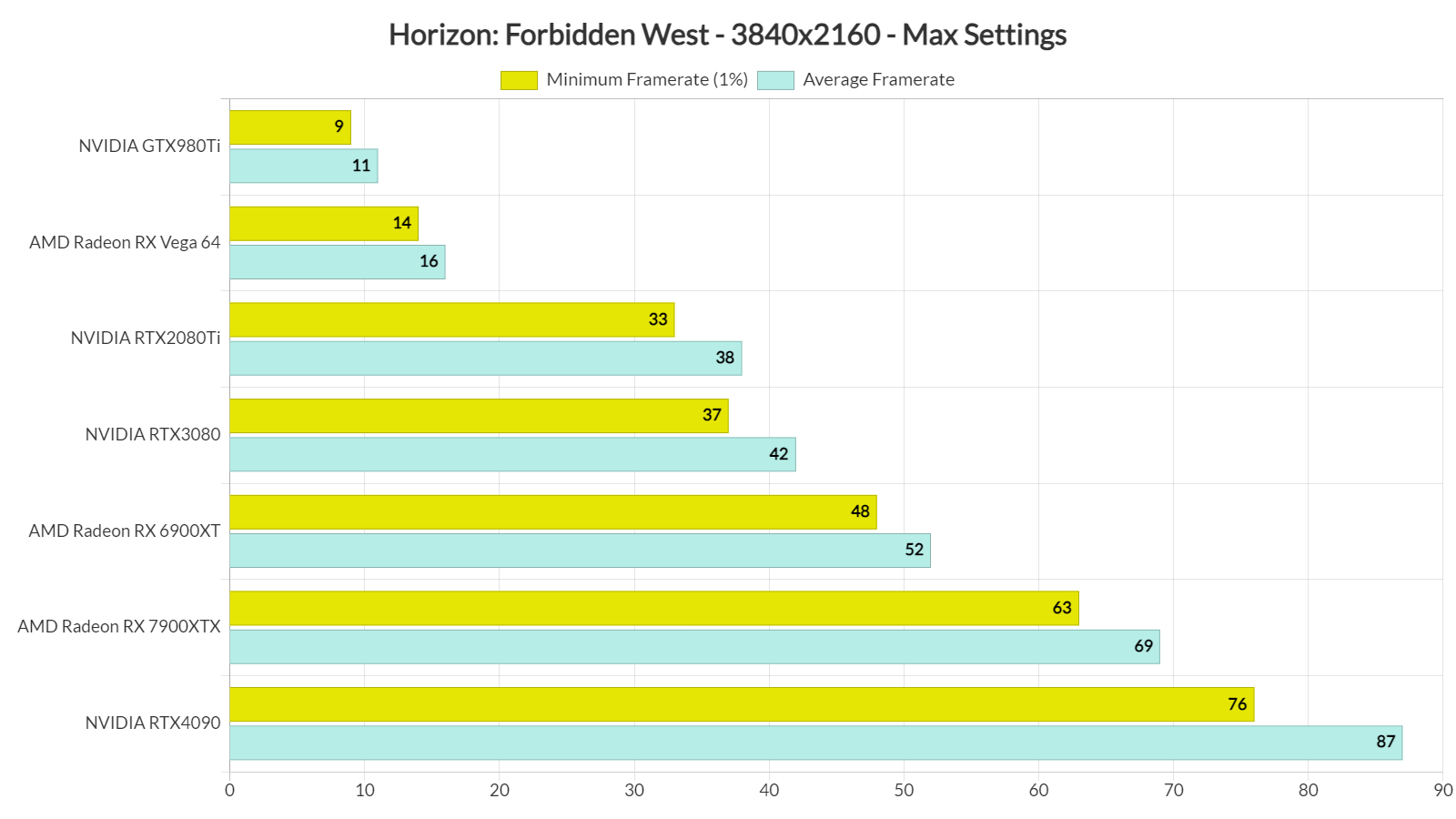

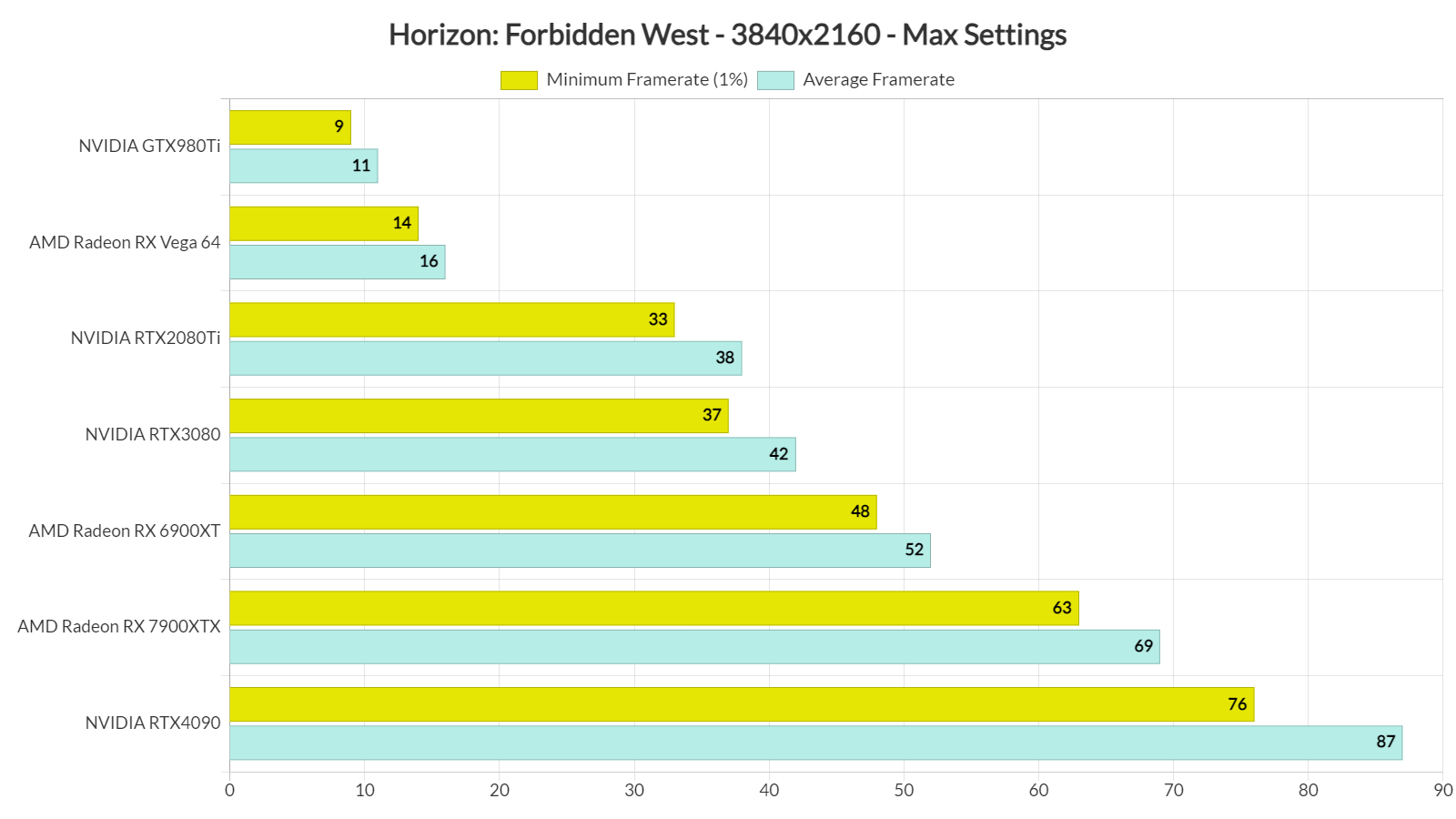

Bro your 4080 is already struggling with native 4K60 now.

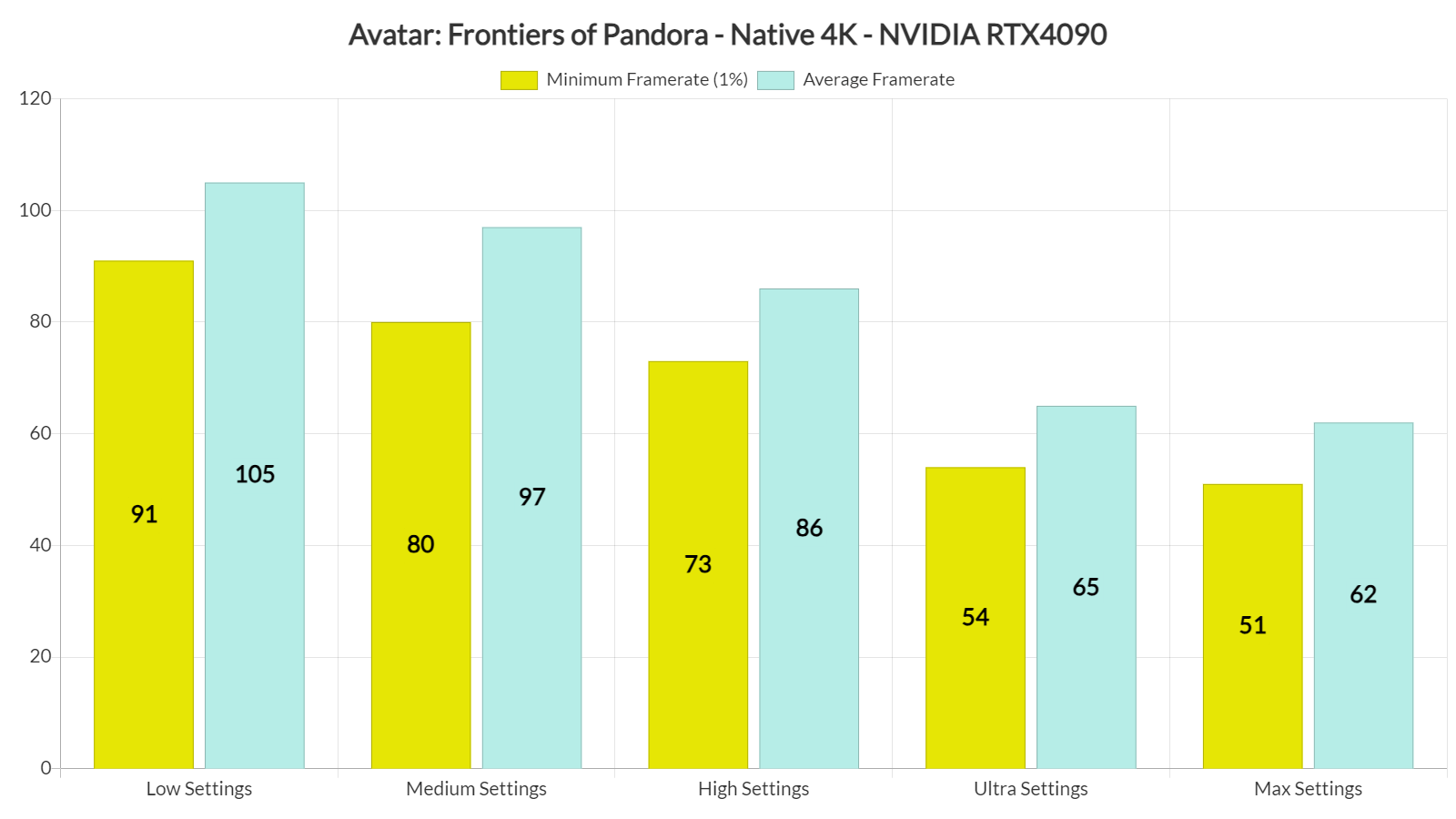

You really should be using DLSS, those Tensor cores arent there to massage your back.

P.S I actually dont think GTA6 is going to be that punishing a game to run for PC gamers.....unless they have an uber setting for Real Time RayTraced Global Illumination, i think High settings will easily be doable with a 4080.

Whether thats Native 4K60 or not well thats up to you.....i never let my Tensor cores sleep.

I meant 4k dlss quality of course, 4k native is a waste when there is an almost as good option.

And you’re be wrong because most engines have a slew of features meant to take advantage of more advanced and powerful hardware. UE5 is supported by the little Switch, all the way up to the beefiest PC with tools such as Nanite and hardware-accelerated ray tracing with Lumen. Look at the famous Matrix demo on UE5. That’s what the engine can do and this is certainly not taking into account the lowest common denominator.I meant most game engines are built to accommodate the lowest common denominator, so all you're really getting with these insane GFX cards is ever increasing frame rates @4k, I'm not seeing games built ground up to take insane advantage of these cards is what I'm saying

While my 4080 laptop ( 4070 desktop basically ) is doing wonders for 3440x1440 with dlss and framegen. I would like to have some more performance for path tracing titles for 3440x1440 and 4k gaming.

It's all going to matter on its performance and v-ram. this laptop is cutting it close with 12gb of v-ram. I would want double the v-ram on a 5080 if possible, but they probably going to set it at 16gb.

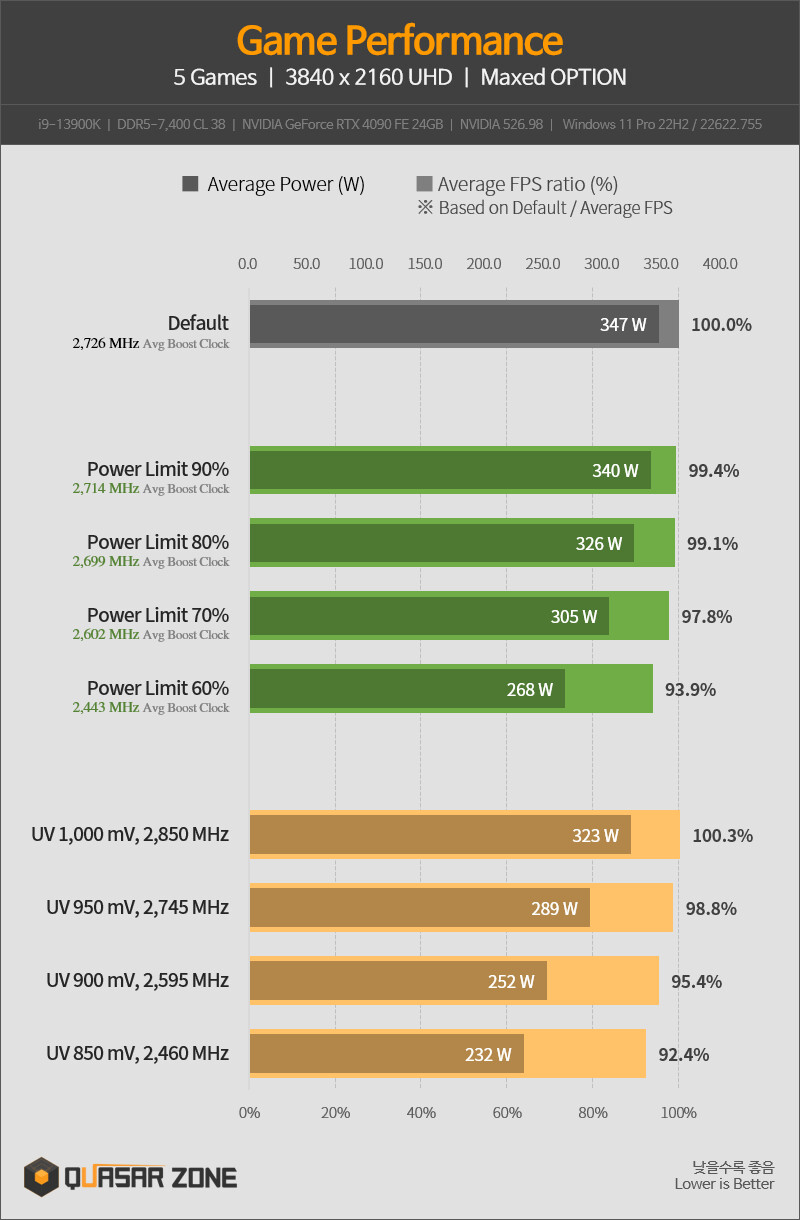

Then i could see myself be interested for a 5090 just for the v-ram and just heavily underclock it to get it into the 350w range.

We will see what the cards offer tho.

Do Nvidia cards usually have a decent margin for a meaningful underclock?

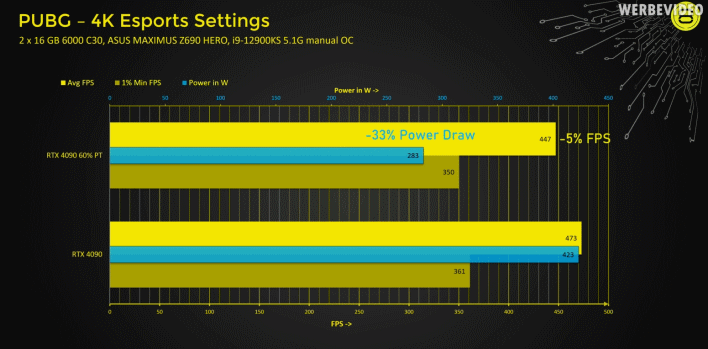

They do. At least for Ada Lovelace. My 4070Ti runs 80-100W less (at .925mv @2715mhz) with a slight negative curve undervolt, in regular gaming. And averages around 170-200W in games. Performs around the same as stock OC with a slight memory clock bump. Can barely hear the GPU spin up with the massively oversized triple cooler from MSI.Do Nvidia cards usually have a decent margin for a meaningful underclock?

I think the requirements will not skyrocket in the near future because AAA games are made with consoles in mind. I expect even the 2080ti to run all current gen games (with above console settings) by the time the PS6 launches.Hopefully my 4080 is gonna remain decent until series 60.

It will no worries, we have the same tech on consoles next 2 years, so you can wait for 6080 in 2027 with at least 50% more power than 4080.Hopefully my 4080 is gonna remain decent until series 60.

While my 4080 laptop ( 4070 desktop basically ) is doing wonders for 3440x1440 with dlss and framegen. I would like to have some more performance for path tracing titles for 3440x1440 and 4k gaming.

It's all going to matter on its performance and v-ram. this laptop is cutting it close with 12gb of v-ram. I would want double the v-ram on a 5080 if possible, but they probably going to set it at 16gb.

Then i could see myself be interested for a 5090 just for the v-ram and just heavily underclock it to get it into the 350w range.

We will see what the cards offer tho.

Do Nvidia cards usually have a decent margin for a meaningful underclock?

No idea to be honest, kinda wondering myself about it.

It will no worries, we have the same tech on consoles next 2 years, so you can wait for 6080 in 2027 with at least 50% more power than 4080.

In certain games (like spiderman in this video), the RTX4080 was around 80-95% faster than the RTX3080, so IMO there's a good chance that the 5080 will be 50% faster than the 4080 (at least in some games). The 6080 will probably be over 2x faster.

That’s honestly my biggest question right now. As a 4090 owner I’d love it if Nvidia made me feel like my current GPU is an obsolete piece of e-waste. But right now I don’t even have any games that would noticeably benefit from a more powerful GPU.I am very curious what game Nvidia will use to promote the new cards. I’m guessing Wukong.

Maybe for the 5090>4090. The further down the stack, probably not. I can see the 5080 matching the 4090 in raster and maybe beating it in RT.But maybe this happens because of 10gb on 3080. in raw power the diff between 3080 and 4080 is 50% perf. 3080 problem is 10gb of vram. I don't believe on 50% jump again this gen.

3x the power for >3x the cost just for the GPU (~5x the cost when you factor in the entire PC)Think of it this way, 4090 is already 3x faster from ps5 even in raster(6x+ in rt), even if 5090 is "only" 20 to 30% stronger, we got behemoth of unimaginable power on our hands here

I haven't noticed the VRAM usage on the 4080, it's over 12GB in spider man mile morales, so it seems you're right.But maybe this happens because of 10gb on 3080. in raw power the diff between 3080 and 4080 is 50% perf. 3080 problem is 10gb of vram. I don't believe on 50% jump again this gen.

Disable RT and even a 3060 can keep in the race.I think the requirements will not skyrocket in the near future because AAA games are made with consoles in mind. I expect even the 2080ti to run all current gen games (with above console settings) by the time the PS6 launches.

I'm hearing rumors of 28gbSo, will the 5090 at least come with 32 GB of VRAM? Otherwise, what's the fucking point of the card.

Indeed, value proposition isnt good, but its normal for topend pc hardware, its called bleeding edge for a reason, if u are enthusiast u literally gotta bleed cash out xD3x the power for >3x the cost just for the GPU (~5x the cost when you factor in the entire PC)

And I bet they'll still have the fucking gall to charge more than $1200 for it...I'm hearing rumors of 28gb

They releasing this year or no?

I am very curious what game Nvidia will use to promote the new cards. I’m guessing Wukong.

That’s honestly my biggest question right now. As a 4090 owner I’d love it if Nvidia made me feel like my current GPU is an obsolete piece of e-waste. But right now I don’t even have any games that would noticeably benefit from a more powerful GPU.

why even bother comparing to pleb hardware like thatThink of it this way, 4090 is already 3x faster from ps5 even in raster(6x+ in rt), even if 5090 is "only" 20 to 30% stronger, we got behemoth of unimaginable power on our hands here

So, will the 5090 at least come with 32 GB of VRAM? Otherwise, what's the fucking point of the card.

I am thinking about work stuff, where I frequently hit 24 GB of VRAM on my 4090. And before you tell me that I am retarded for using 4090 for work stuff: Nvidia themselves have marketed it for rendering and deep learning stuff as well. So it's not like I am outside the intended use cases.There's not a game in existence that's using 24 GB of VRAM yet. What are you even talking about?

Dont your apps use shared memory?I am thinking about work stuff, where I frequently hit 24 GB of VRAM on my 4090. And before you tell me that I am retarded for using 4090 for work stuff: Nvidia themselves have marketed it for rendering and deep learning stuff as well. So it's not like I am outside the intended use cases.

will still buy if 5090 has 512 bus.

will downsample my dongus off.

I could do either that or use dbz power levelswhy even bother comparing to pleb hardware like that

Shame they aren't getting announced until CES now.

They're just gonna get much less optimized. Developers will start using FG/DLSS as a crutch.Imma be skipping this generation.

With DLSS constantly improving and I dont think games are gonna get that much more demanding.

Im good.

Im good.

Wait for the RTX60s they will likely have some actually new features with them.

Nvidia havent even announced when their next GTC event will be......depending on its name youll have an idea whether they will announce the cards or not.

Too busy counting money to worry about this shit.

Remember Blackwell is being used both for their AI chips and for the consumer chips.

I dont think they are in any rush to start taking silicon away from Blackwell AI.

Indeed, value proposition isnt good, but its normal for topend pc hardware, its called bleeding edge for a reason, if u are enthusiast u literally gotta bleed cash out xD

Edit: Same thing goes with cpu's.

For midrange and amazing value u go for example for r7 5700x https://pcpartpicker.com/product/JmhFf7/amd-ryzen-7-5700x-34-ghz-8-core-processor-100-100000926wof so 160$, but top of the top so 7800x3d https://pcpartpicker.com/product/3hyH99/amd-ryzen-7-7800x3d-42-ghz-8-core-processor-100-100000910wof is almost 400$, and yet difference in min and avg fps, even in best case scenario so heavy cpu bottleneck, usually isnt even +50% between them

They're just gonna get much less optimized. Developers will start using FG/DLSS as a crutch.

Who am I kidding? They already are!

TLDR next gen will be glorious, for midrange/budget gamers who can go amd and spend 300-500$ and get close to what they have to pay 900$ today, and for enthusiasts who can easily pay 2k usd as long as performance is there(2200 euro i paid in august 2021 for 3080ti durning crypto boom is 2571€ now= exactly 2800 usd and i already got cash for next gpu/top end pc set aside, just waiting for correct time/launch of new hardwareLet's set expectations:

1. 5 series cards will be totally overpriced

2. Path tracing is available in a few games only, it is non performant today without frame gen and still struggles. 5 series cards will provide at best 20% I.provement, which is negligee.

3. Raster performance should be fantastic.....but if AMD actually get of their asses and provide a decent competator( not going to happen as going mid range only,).

So we are stuck with Nvidia who couldn't give a crap about consumers as they have their datacentre business, and where they want to focus wafers.

Intel is improving, making huge grounds but are playing catchup on drivers

You think they will use CES instead of GTC?

Or was this confirmed somewhere and I missed it.

They still have GTC Sept - Nov to announce them then they can release and show them off at CES if they announce at CES chances are we only see the actually work they can do at GTC March then.