Bernkastel

Ask me about my fanboy energy!

In March 19, 2018 Microsoft announced DirectX Raytracing with demos from the Futuremark demo from Epic and SEED demo from EA.

Announcing Microsoft DirectX Raytracing! - DirectX Developer Blog

If you just want to see what DirectX Raytracing can do for gaming, check out the videos from Epic, Futuremark and EA, SEED. To learn about the magic behind the curtain, keep reading. 3D Graphics is a Lie For the last thirty years, almost all games have used the same general...

3D Graphics is a Lie

DirectX Raytracing (DXR) Functional Spec

Engineering specs for DirectX features.

microsoft.github.io

Defining the Next Generation: An Xbox Series X|S Technology Glossary - Xbox Wire

[Editor’s Note: Updated on 10/21 at 11AM to ensure it is now reflective of the capabilities across both of our next-gen Xbox consoles following the unveil of Xbox Series S.] As we enter a new generation of console gaming with Xbox Series X and Xbox Series S, we’ve made a number of technology...

news.xbox.com

news.xbox.com

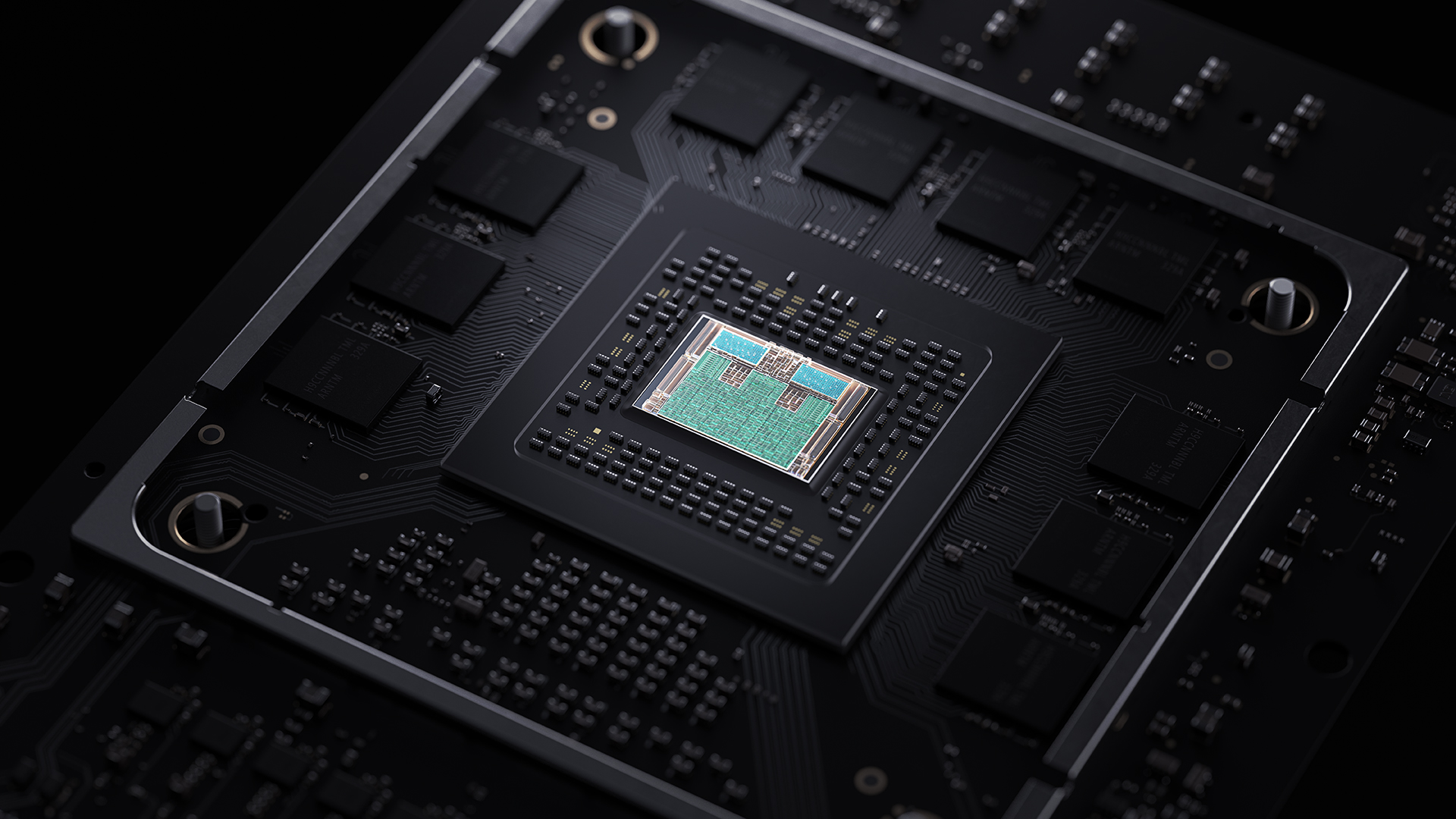

With a blog post from AMDHardware Accelerated DirectX Raytracing (DXR) – From improved lighting, shadows and reflections as well as more realistic acoustics and spatial audio, raytracing enables developers to create more physically accurate worlds. For the very first time in a game console, Xbox Series X includes support for high performance, hardware accelerated raytracing. Xbox Series X uses a custom-designed GPU leveraging the latest innovation from our partners at AMD and built in collaboration with the same team who developed DirectX Raytracing. Developers will be able to deliver incredibly immersive visual and audio experiences using the same techniques on PC and beyond.

Microsoft & AMD Supercharge Console Gaming with the Xbox Series X

I am excited to share some of the details that Microsoft announced today on Xbox® Series X, and how it is built upon the partnership with the team at AMD. Together, we have relentlessly pushed the innovation boundaries of gaming devices for the last 15 years. Today, AMD and Microsoft are...

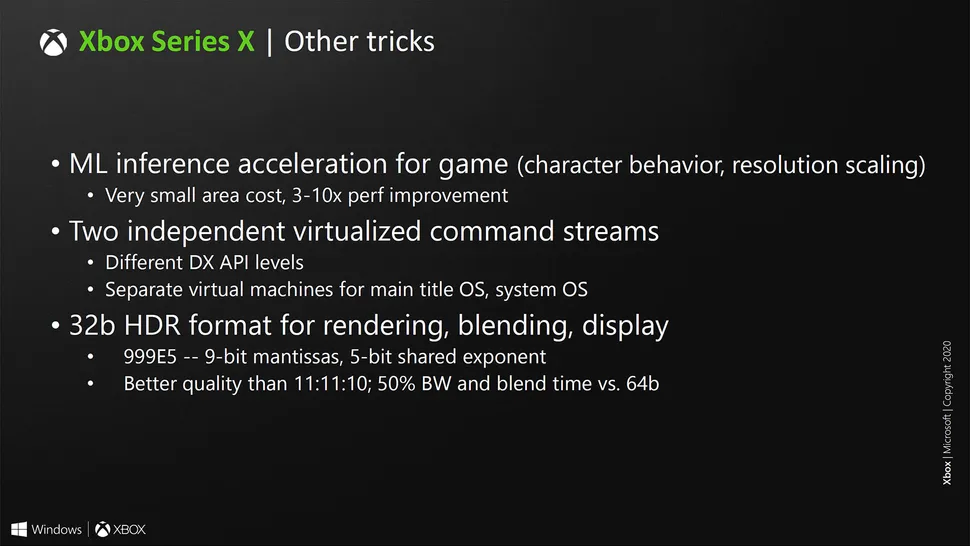

Another big game changer is DirectML....

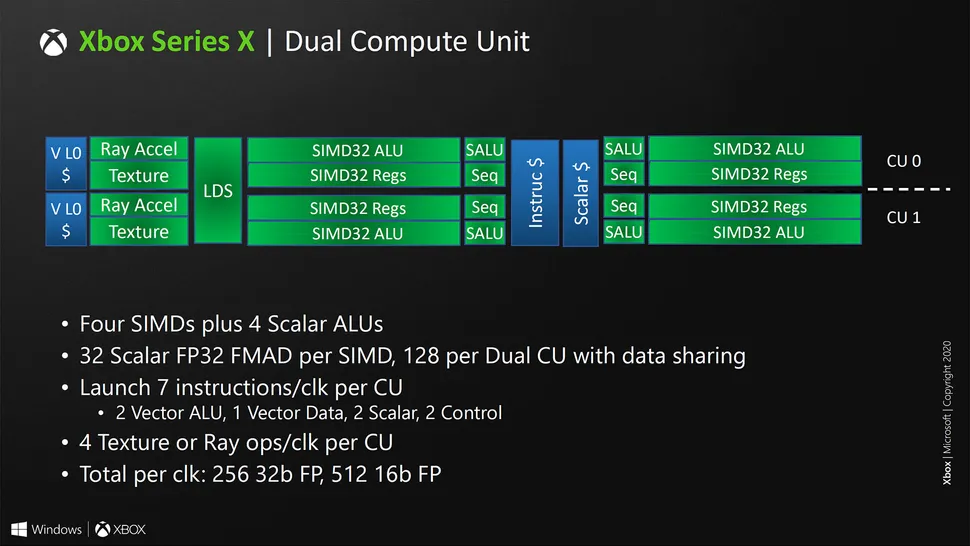

- A customized GPU based on next generation AMD RDNA 2 gaming architecture with 52 compute units to deliver 12 TFLOPS of single precision performance, enabling increases in graphics performance and hardware accelerated DirectX Raytracing and Variable Rate Shading.

The Xbox Series X SoC was architected for the next generation of DirectX API extensions with hardware acceleration for Raytracing, and Variable Rate Shading. Raytracing is especially one of the most visible new features for gamers, which simulates the properties of light, in real time, more accurately than any technology before it. Realistic lighting completely changes a game and the gamer’s perception.

...

The Xbox Series X is the biggest generational leap of SoC and API design that we’ve done with Microsoft, and it’s an honor for AMD to partner with Microsoft for this endeavor.

DirectML was part of Nvidia's SIGGRAPH 2018 tech talkDirectML – Xbox Series X supports Machine Learning for games with DirectML, a component of DirectX. DirectML leverages unprecedented hardware performance in a console, benefiting from over 24 TFLOPS of 16-bit float performance and over 97 TOPS (trillion operations per second) of 4-bit integer performance on Xbox Series X. Machine Learning can improve a wide range of areas, such as making NPCs much smarter, providing vastly more lifelike animation, and greatly improving visual quality.

NVIDIA On-Demand

A searchable database of content from GTCs and various other events.

on-demand.gputechconf.com

At 19:06

At 19:28

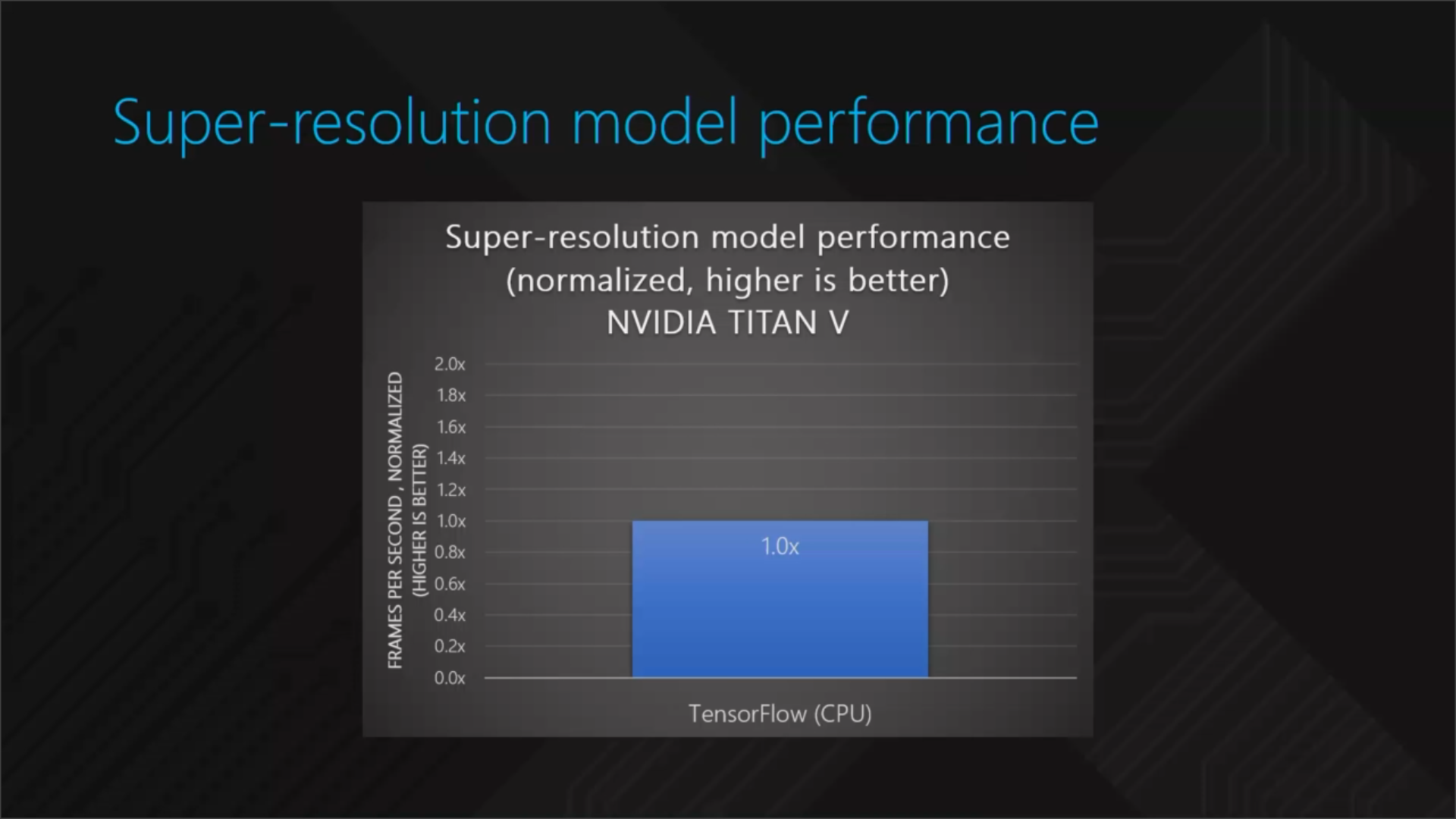

Performance comparison at 22:38

Gaming with Windows ML - DirectX Developer Blog

Neural Networks Will Revolutionize Gaming Earlier this month, Microsoft announced the availability of Windows Machine Learning. We mentioned the wide-ranging applications of WinML on areas as diverse as security, productivity, and the internet of things. We even showed how WinML can be used to...

PS : DirectML is the part of WindowsML meant for gaming.We couldn’t write a graphics blog without calling out how DNNs(Deeo Neural Networks) can help improve the visual quality and performance of games. Take a close look at what happens when NVIDIA uses ML to up-sample this photo of a car by 4x. At first the images will look quite similar, but when you zoom in close, you’ll notice that the car on the right has some jagged edges, or aliasing, and the one using ML on the left is crisper. Models can learn to determine the best color for each pixel to benefit small images that are upscaled, or images that are zoomed in on. You may have had the experience when playing a game where objects look great from afar, but when you move close to a wall or hide behind a crate, things start to look a bit blocky or fuzzy – with ML we may see the end of those types of experiences.

DirectML Technology Overview

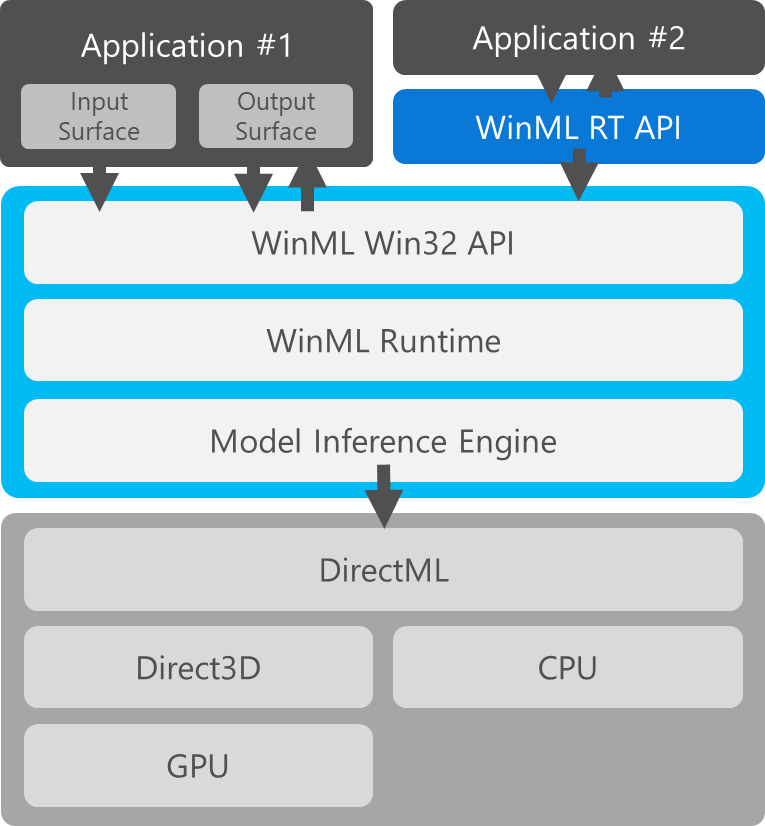

We know that performance is a gamer’s top priority. So, we built DirectML to provide GPU hardware acceleration for games that use Windows Machine Learning. DirectML was built with the same principles of DirectX technology: speed, standardized access to the latest in hardware features, and most importantly, hassle-free for gamers and game developers – no additional downloads, no compatibility issues – everything just works. To understand why how DirectML fits within our portfolio of graphics technology, it helps to understand what the Machine Learning stack looks like and how it overlaps with graphics.

DirectML is built on top of Direct3D because D3D (and graphics processors) are very good for matrix math, which is used as the basis of all DNN models and evaluations. In the same way that High Level Shader Language (HLSL) is used to execute graphics rendering algorithms, HLSL can also be used to describe parallel algorithms of matrix math that represent the operators used during inference on a DNN. When executed, this HLSL code receives all the benefits of running in parallel on the GPU, making inference run extremely efficiently, just like a graphics application.

In DirectX, games use graphics and compute queues to schedule each frame rendered. Because ML work is considered compute work, it is run on the compute queue alongside all the scheduled game work on the graphics queue. When a model performs inference, the work is done in D3D12 on compute queues. DirectML efficiently records command lists that can be processed asynchronously with your game. Command lists contain machine learning code with instructions to process neurons and are submitted to the GPU through the command queue. This helps to integrate in machine learning workloads with graphics work, which makes bringing ML models to games more efficient and it gives game developers more control over synchronization on the hardware.

Hardware & Tech News - OC3D.net

OC3D is where you can find the latest PC Hardware and Gaming News & Reviews. Get updates on GPUs, Motherboards, CPUs, and more.

Inside Xbox Series X: the full specs

This is it. After months of teaser trailers, blog posts and even the occasional leak, we can finally reveal firm, hard …

Machine learning is a feature we've discussed in the past, most notably with Nvidia's Turing architecture and the firm's DLSS AI upscaling. The RDNA 2 architecture used in Series X does not have tensor core equivalents, but Microsoft and AMD have come up with a novel, efficient solution based on the standard shader cores. With over 12 teraflops of FP32 compute, RDNA 2 also allows for double that with FP16 (yes, rapid-packed math is back). However, machine learning workloads often use much lower precision than that, so the RDNA 2 shaders were adapted still further.

"We knew that many inference algorithms need only 8-bit and 4-bit integer positions for weights and the math operations involving those weights comprise the bulk of the performance overhead for those algorithms," says Andrew Goossen. "So we added special hardware support for this specific scenario. The result is that Series X offers 49 TOPS for 8-bit integer operations and 97 TOPS for 4-bit integer operations. Note that the weights are integers, so those are TOPS and not TFLOPs. The net result is that Series X offers unparalleled intelligence for machine learning."

...

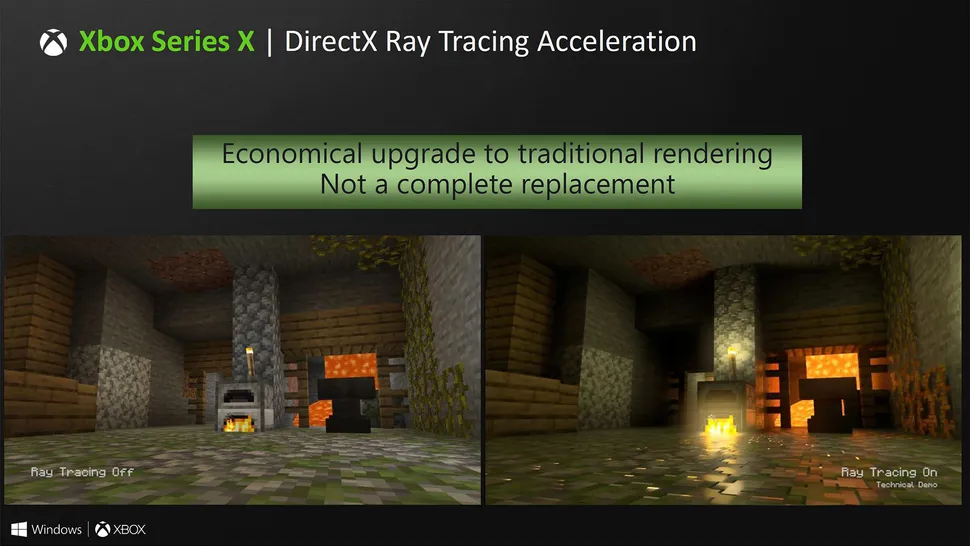

However, the big innovation is clearly the addition of hardware accelerated ray tracing. This is hugely exciting and at Digital Foundry, we've been tracking the evolution of this new technology via the DXR and Vulkan-powered games we've seen running on Nvidia's RTX cards and the console implementation of RT is more ambitious than we believed possible.

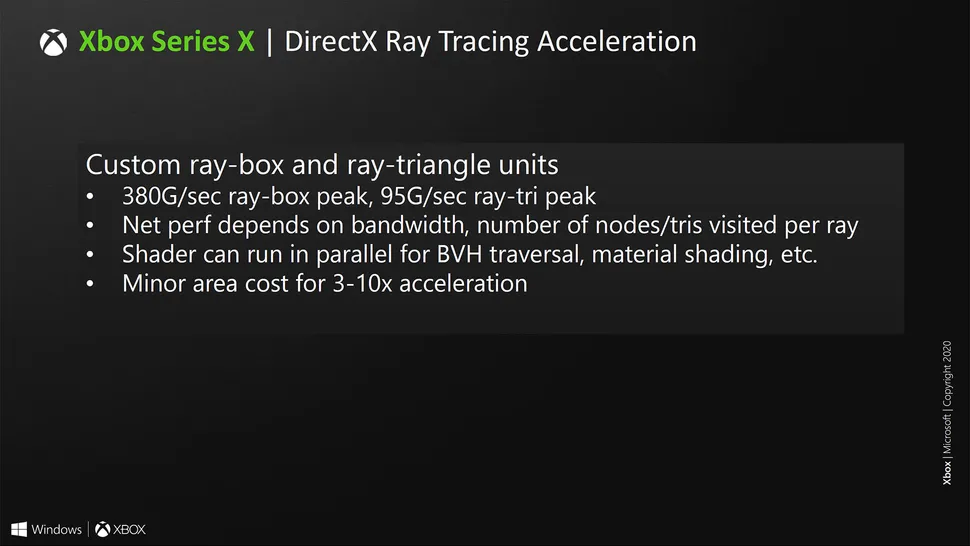

RDNA 2 fully supports the latest DXR Tier 1.1 standard, and similar to the Turing RT core, it accelerates the creation of the so-called BVH structures required to accurately map ray traversal and intersections, tested against geometry. In short, in the same way that light 'bounces' in the real world, the hardware acceleration for ray tracing maps traversal and intersection of light at a rate of up to 380 billion intersections per second.

"Without hardware acceleration, this work could have been done in the shaders, but would have consumed over 13 TFLOPs alone," says Andrew Goossen. "For the Series X, this work is offloaded onto dedicated hardware and the shader can continue to run in parallel with full performance. In other words, Series X can effectively tap the equivalent of well over 25 TFLOPs of performance while ray tracing."

It is important to put this into context, however. While workloads can operate at the same time, calculating the BVH structure is only one component of the ray tracing procedure. The standard shaders in the GPU also need to pull their weight, so elements like the lighting calculations are still run on the standard shaders, with the DXR API adding new stages to the GPU pipeline to carry out this task efficiently. So yes, RT is typically associated with a drop in performance and that carries across to the console implementation, but with the benefits of a fixed console design, we should expect to see developers optimise more aggressively and also to innovate. The good news is that Microsoft allows low-level access to the RT acceleration hardware.

"[Series X] goes even further than the PC standard in offering more power and flexibility to developers," reveals Goossen. "In grand console tradition, we also support direct to the metal programming including support for offline BVH construction and optimisation. With these building blocks, we expect ray tracing to be an area of incredible visuals and great innovation by developers over the course of the console's lifetime."

The proof of the pudding is in the tasting, of course. During our time at the Redmond campus, Microsoft demonstrated how fully featured the console's RT features are by rolling out a very early Xbox Series X Minecraft DXR tech demo, which is based on the Minecraft RTX code we saw back at Gamescom last year and looks very similar, despite running on a very different GPU. This suggests an irony of sorts: base Nvidia code adapted and running on AMD-sourced ray tracing hardware within Series X. What's impressive about this is that it's fully path-traced. Aside from the skybox and the moon in the demo we saw, there are no rasterised elements whatsoever. The entire presentation is ray traced, demonstrating that despite the constraints of having to deliver RT in a console with a limited power and silicon budget, Xbox Series X is capable of delivering the most ambitious, most striking implementation of ray tracing - and it does so in real time.

Minecraft DXR is an ambitious statement - total ray tracing, if you like - but we should expect to see the technology used in very different ways. "We're super excited for DXR and the hardware ray tracing support," says Mike Rayner, technical director of the Coalition and Gears 5. "We have some compute-based ray tracing in Gears 5, we have ray traced shadows and the [new] screen-space global illumination is a form of ray traced screen-based GI and so, we're interested in how the ray tracing hardware can be used to take techniques like this and then move them out to utilising the DXR cores.

"I think, for us, the way that we've been thinking about it is as we look forward, we think hybrid rendering between traditional rendering techniques and then using DXR - whether for shadows or GI or adding reflections - are things that can really augment the scene and [we can] use all of that chip to get the best final visual quality."

...

Microsoft ATG principal software engineer Claude Marais showed us how a machine learning algorithm using Gears 5's state-of-the-art HDR implementation is able to infer a full HDR implementation from SDR content on any back-compat title. It's not fake HDR either, Marais rolled out a heatmap mode showing peak brightness for every on-screen element, clearly demonstrating that highlights were well beyond the SDR range.

Microsoft’s Game Stack chief: The next generation of games and game development

James Gwertzman, general manager of Microsoft Game Stack, gets to see how thousand of games are developed and run. He talked about the future of games.

Journalist: How hard is game development going to get for the next generation? For PlayStation 5 and Xbox Series X? The big problem in the past was when you had to switch to a new chip, like the Cell. It was a disaster. PlayStation 3 development was painful and slow. It took years and drove up costs. But since you’re on x86, it shouldn’t happen, right? A lot of those painful things go away because it’s just another faster PC. But what’s going to be hard? What’s the next bar that everybody is going to shoot for that’s going to give them a lot of pain, because they’re trying to shoot too high?

Gwertzman: You were talking about machine learning and content generation. I think that’s going to be interesting. One of the studios inside Microsoft has been experimenting with using ML models for asset generation. It’s working scarily well. To the point where we’re looking at shipping really low-res textures and having ML models uprez the textures in real time. You can’t tell the difference between the hand-authored high-res texture and the machine-scaled-up low-res texture, to the point that you may as well ship the low-res texture and let the machine do it.

Journalist: Can you do that on the hardware without install time?

Gwertzman: Not even install time. Run time.

Journalist: To clarify, you’re talking about real time, moving around the 3D space, level of detail style?

Gwertzman: Like literally not having to ship massive 2K by 2K textures. You can ship tiny textures.

Journalist: Are you saying they’re generated on the fly as you move around the scene, or they’re generated ahead of time?

Gwertzman: The textures are being uprezzed in real time.

Journalist: So you can fit on one blu-ray.

Gwertzman: The download is way smaller, but there’s no appreciable difference in game quality. Think of it more like a magical compression technology. That’s really magical. It takes a huge R&D budget. I look at things like that and say — either this is the next hard thing to compete on, hiring data scientists for a game studio, or it’s a product opportunity. We could be providing technologies like this to everyone to level the playing field again.

Journalist: Where does the source data set for that come from? Do you take every texture from every game that ships under Microsoft Game Studios?

Gwertzman: In this case, it only works by training the models on very specific sets. One genre of game. There’s no universal texture map. That would be kind of magical. It’s more like, if you train it on specific textures and then you — it works with those, but it wouldn’t work with a whole different set.

Journalist: So you still need an artist to create the original set.

Journalist: Are there any legal considerations around what you feed into the model?

Gwertzman: It’s especially good for photorealism, because that adds tons of data. It may not work so well for a fantasy art style. But my point is that I think the fact that that’s a technology now — game development has always been hard in terms of the sheer number of disciplines you have to master. Art, physics, geography, UI, psychology, operant conditioning. All these things we have to master. Then we add backend services and latency and multiplayer, and that’s hard enough. Then we added microtransactions and economy management and running your own retail store inside your game. Now we’re adding data science and machine learning. The barrier seems to be getting higher and higher.

That’s where I come in. At heart, Microsoft is a productivity company. Our employee badge says on the back, the company mission is to help people achieve more. How do we help developers achieve more? That’s what we’re trying to figure out.

Scorn Interview - Xbox Series X Is a 'Very Balanced System'; Trailer Was Running on an RTX 2080Ti

We talk to the Game Director of Scorn. He claims that the Xbox Series X is a very balanced system and clarifies the GPU used in the trailer.

Xbox Series X also features support for DirectML. Are you planning to use the Machine Learning API in some ways for Scorn?

DirectML and NVIDIA's DLSS 2.0 are very interesting solutions when the game is not hitting the desired performance and it feels like these solutions could help players with weaker systems quite substantially. A lot of these new features have been at our disposal for a very limited amount of time. We will try our best to give players as many options as possible.

Last edited: