SquiddyCracker

Banned

Transhuman

Member

(Today, 09:05 PM)

I suggest his name is changed to Human.

Transhuman

Member

(Today, 09:05 PM)

So, transhumanism/extropianism is obviously about avoiding death.

Does anyone know of any articles on how long this avoidance of death can be prolonged, what with the end of the universe and all?

Is it basically a situation of "Well, if we haven't solved that by then, we'll probably have come to accept or even long for death"?

Who's gonna pay for all this stuff? Would people even reproduce anymore? Those are the two most frightening questions I associate with this future (should it happen). Class warfare on the scale of genocide would likely occur. The ability to have the power with none of the responsibility or enlightenment is too scary to comprehend. The trajectory I imagine involves a heavily militarized government segment due to cost colored by the super rich. Do we really want those types to have more power than they already do? I also worry about the poor being harvested for organs or replacement parts becausebecause those metahumans with power have changed the definition of what "human" is.

I recently caught about 2 minutes of some American science doc presented by Neil Tyson and it had Ray Kurzweil sitting in his kitchen saying he takes 150 pills a day. Anyone know what that is all about? I am really interested in h+ but that totally threw me. What can he possibly be taking 150 pills a day for?

his theory is that if you can replace all the breaking down parts of your body you can slow aging. apparently its working for him but hes also got a messed up heart that might take him out

What if we didn't recognize this and thought that we had conquored death, when in reality we had not? The dying person's consious presence leaves, and only the person's behavior persists in an AI. Yet we all think we're immortal! Food for thought.

I was thinking how one of the first body augmentationi want to get is to have small speakers built in into my ears, that can connect via bluetooth (or some future wireless tech)

its unlikely we'd harvest organs from the poor. we can already grow some organs in the lab with the host stem cells so there is no chance of rejection

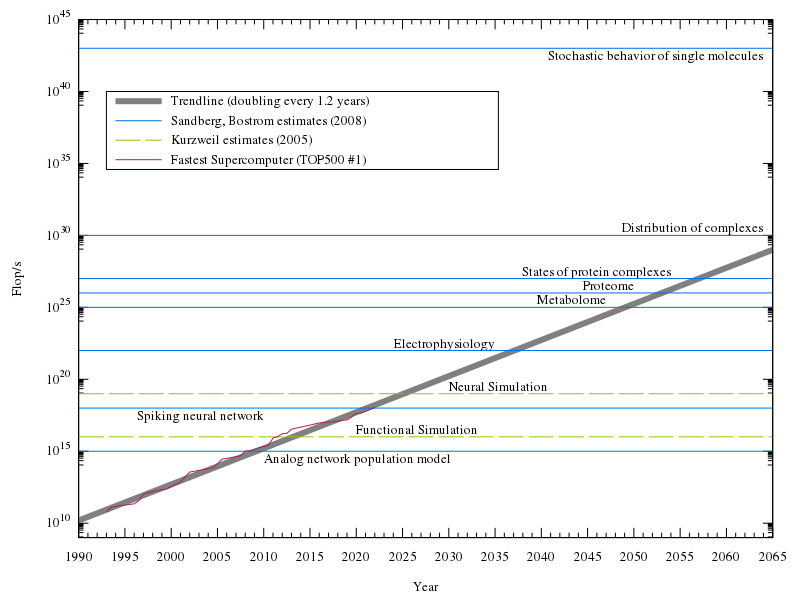

we do need to shift away from capitalist societies at some point, because soon automation is going to push everyone out of the job market. i think the next 20-40 years will be very tumultuous, but if we survive it things should become much more ideal afterwards

his theory is that if you can replace all the breaking down parts of your body you can slow aging. apparently its working for him but hes also got a messed up heart that might take him out

The point you make about automation beginning to rule large sectors of the job market is something that I think is much more of a real possibility than what some think right now; I don't know where displaced workers are going to go, and likewise, believe the capitalist system will almost become inferior and unable to support the capacity left on it's doorstep due to the progress of technology. You could say the labor of building machines is work, but then you have ideas such as self-replicating robots; in which cases you'd need even less human labor force for construction.

Also, do you have source from this? I'm interested in hearing if there are any reports or documentation on the negative affects it's given to his body otherwise.

Is it foolish to assume that once the Singularity occurs, we shall either be immortals, or we will all be wiped out?

I see two scenarios once it happens;

(A) Technological singularity occurs. After that event, the machine keeps creating a better version of itself. Each version creating a more advanced machine, until we reach the Godhead. A machine that cannot be upgraded; the absolute pinnacle.. After that, there are no limits.

(B) Technological singularity occurs. The machine is sentient, and starts the chain of events that lead to our extinction.

To me, the singularity is the key to all this. I hope it truly happens in our lifetime.

It is also possible that someone will intentionally engineer our destruction.

Imagine a rich cult, obsessed with the "apocalypse", what's there to stop them from creating a progressively developing A.I. programmed to destroy humanity?

As technology becomes more and more ubiquitous, it's possible that the processing power required to jumpstart the creation of such an A.I. might end up in the hands of even a small group of individuals.

It is also possible that someone will intentionally engineer our destruction.

Imagine a rich cult, obsessed with the "apocalypse", what's there to stop them from creating a progressively developing A.I. programmed to destroy humanity?

As technology becomes more and more ubiquitous, it's possible that the processing power required to jumpstart the creation of such an A.I. might end up in the hands of even a small group of individuals.

Transhumanism? Extropianism? Seems a little bit like science fiction to me.

1. Do you believe this at all possible to any extent? If so, how far do you think we can go, and if not, why?

2. Would you be in favor of being able to alter humanity at the core of what makes us "human"?

3. How long do you think this will take for us to achieve?

4. What personal moral issues do you have (if any) with this movement?

5. If given the chance, would you alter your mind or body (abolitionist theme) to reduce pain? Or, simply augment?

6. If this became possible, how would it affect world religions?

7. Is this possible without severe class warfare, i.e., the rich advancing their state, the poor behind left behind?

8. Can you think of any solutions?

I'm having trouble understanding this question. Does having augmentations done to oneself make you less "human"? Are amputee victims less human once they decide to get a prosthetic limbs? How about when a person gets a artificial heart? I personally wouldn't consider them less human.

Would it affect them? I mean, again do people hear individuals complain when people get prosthetic limbs, artificial hearts or laser eye surgery?

It is also possible that someone will intentionally engineer our destruction.

Imagine a rich cult, obsessed with the "apocalypse", what's there to stop them from creating a progressively developing A.I. programmed to destroy humanity?

As technology becomes more and more ubiquitous, it's possible that the processing power required to jumpstart the creation of such an A.I. might end up in the hands of even a small group of individuals.

I'm more referring to a point where the majority of your body could be replaceable, whether willingly or necessary, or perhaps 'mind uploading' to transfer thoughts and memories into something that by nature, isn't born flesh. The term "post-human" used in the TH definition.

Achieving things such as living into hundreds of years, regenerative DNA, altering your mental and physical state to superhuman heights, and escaping death, would be quite contrary to what has been the norm for the last x-amt. of millennia.

Counter A.I. development will of course be a large and important part of future computing development.

Indeed, that's really the only solution.

Create a benign evolving A.I. to stop the development of a malign A.I.

Assuming that an evolving A.I. would in short time become near omnipotent as a result of it being capable of improving itself - over and over again.

Seed AI is fascinating, although here's an interesting excerpt from the Wiki:

"However, they cannot then produce faster code and so this can only provide a very limited one step self-improvement. Existing optimizers can transform code into a functionally equivalent, more efficient form, but cannot identify the intent of an algorithm and rewrite it for more effective results. The optimized version of a given compiler may compile faster, but it cannot compile better. That is, an optimized version of a compiler will never spot new optimization tricks that earlier versions failed to see or innovate new ways of improving its own program[citation needed]."

My understanding in laymen's terms means that as far as our evolving AI concept is concerned right now, it can only evolve in one singular functionality, but can't "think" of new ways of programming itself outside of that realm. I think.

Honestly I think tech like this will be a total disaster for the human race short term. We're going to reach this tech will still in a capitalism mode, meaning resource allocation will be extremely 'chunky', relegated to already rich assholes.

On the plus side, I'm pretty sure nothing really world changing (basically anything besides replacing parts) will happen for well over 100 years. You might get an eye implant, or good working hands, but you won't be able to digitize your memories and live forever. I'm all for fixing parts, I'm against technological immortality.

I think she's stopped with these shenanigans now though.

Do programs or lines of code have permanence?When it comes to 'brain uploading' there is only one way I think I could be comfortable with it.

Let's say eventually we can externalize our memories. Like we have a home super-computer that is wirelessly connected to our brain. Let's take that another step and let's say that eventually we can create a 'virtual space' inside this computer, and then see/hear/smell etc from an avatar of ourselves living in this virtual space. So now we have our memories and a virtual representation of us living in a digital setting.

I can keep expanding on this model slowly until I basically have the ability to simultaneously 'exist' in a physical world and a digital world. My awareness is comfortably split between these two representations of myself, where my digital self is for example... living in a virtual world, maybe some video game - it doesn't matter. When I physically go to sleep, I'm able to continue to do things digitally, as my digital self has some autonomy.

I think if after years of living like this, where my two selves become nigh indistinguishable, and my physical self were to die - I wouldn't have an existential crisis. It would be more like losing a limb than dying. And I could make limb (body) via cloning or the like. Heck, maybe I could have more than one body at this point.

Something like that would have to happen to keep me comfy with a brain upload.

Do programs or lines of code have permanence?

Because regardless of how these alternatives to instant, lethal digital uploads put you in digital space, they nonetheless put you into an existence where your 0s and 1s will by necessity be copied and erased whenever moved. Personally, I'd rather avoid entering a digital existence to begin with. I would like my self to be linked to particular physical substrate, rather than being an interchangeable sets of 0s and 1s.

That way, the element of permanence remains. You'd still be able to interact with digital universes and such, through analogues of BTC-connections (only instead of a organic fleshy brain, your silicate neural substrate will be linked to the internet) and while you might experience some lag unlike an entity existing within the digital universe, you remain located in one spot, and in one piece.

Do programs or lines of code have permanence?

Because regardless of how these alternatives to instant, lethal digital uploads put you in digital space, they nonetheless put you into an existence where your 0s and 1s will by necessity be copied and erased whenever moved. Personally, I'd rather avoid entering a digital existence to begin with. I would like my self to be linked to particular physical substrate, rather than being an interchangeable sets of 0s and 1s.

That way, the element of permanence remains. You'd still be able to interact with digital universes and such, through analogues of BTC-connections (only instead of a organic fleshy brain, your 8silicate neural substrate will be linked to the internet) and while you might experience some lag unlike an entity existing within the digital universe, you remain located in one spot, and in one piece.

Will read through the thread more thoroughly when I get home, so apologies if this has been discussed already;

Whenever we talk about copying the brain, everyone agrees that 'you' would die, and the other you is just a copy that will live on forever digitally.

But...

What about transferring you from the biological brain to a machine made brain that does not degrade? Will that still be you? I would say yes.

What do you think?