You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Black Myth: Wukong PC benchmark tool available on Steam

- Thread starter LectureMaster

- Start date

Mister Wolf

Member

Time to see what this rig is made of.

LectureMaster

Has Man Musk

Performance could be better I guess once I update the graphic card drive.

MikeM

Member

DLSS perf or balanced?Performance could be better I guess once I update the graphic card drive.

LectureMaster

Has Man Musk

It does change performance on my machine.Does the raytracing option work properly? I don't get much difference in FPS from off to max.

Off: avg 100 fps

Max: avg 76 fps

LectureMaster

Has Man Musk

See the super resolution is set for 50. There is a slider in settings where you can change the percentage of internal res, 50 is 50% so 1080p.DLSS perf or balanced?

SpearHea.:D

Member

Getting 64 fps avg with everything set to max. 2K DLSS Quality. RTX set to very high. 4070, R5 7600, 32 GB ram.

Magic Carpet

Gold Member

8GB benchmark!

Edit-RTX 4070 Intel 13700F

Using the same settings as Lecture Master above

67FPS average

35FPS low

I saw lots of artifacts in the sky that looked like Vsync strobing. Driver issue?

edit edit-I guess China now has all my PC's info.

Edit-RTX 4070 Intel 13700F

Using the same settings as Lecture Master above

67FPS average

35FPS low

I saw lots of artifacts in the sky that looked like Vsync strobing. Driver issue?

edit edit-I guess China now has all my PC's info.

Last edited:

FoxMcChief

Gold Member

I'm sure they had it already.8GB benchmark!

Edit-RTX 4070 Intel 13700F

Using the same settings as Lecture Master above

67FPS average

35FPS low

I saw lots of artifacts in the sky that looked like Vsync strobing. Driver issue?

edit edit-I guess China now has all my PC's info.

Magic Carpet

Gold Member

Yea I could not tell a difference in performance either when turning on or off Raytracing.Does the raytracing option work properly? I don't get much difference in FPS from off to max.

SlimySnake

Flashless at the Golden Globes

You need to restart the game.Yea I could not tell a difference in performance either when turning on or off Raytracing.

My fps dropped from an average of 55 to 20 fps when i turned on rt on very high.

Rtx 3080. Even cyberpunk path tracing isn't that high.

VeganElduderino

Member

4070ti everything on the highest , ray tracing on high at 4k avg 74 fps

HRK69

Gold Member

Black Myth: Wukong PC benchmark tool available on Steam

OverHeat

« generous god »

Damn sorry guys

HRK69

Gold Member

It's all rightDamn sorry guys

Lets hope the game is good. No reason to brag if the game turns out to be shite

You still got a 4090 though so you've won either way

Last edited:

StreetsofBeige

Gold Member

Did the test on whatever the default settings it used. Got a laptop.

Agent_4Seven

Tears of Nintendo

Well, here's my results. Resizable Bar enabled, Windows 11 memory protection and other needless shit turned off. Locked FPS to 30 via Riva, turned AA all the way down to low cuz it's completely pointless since DLSS is doing all the work.

I honestly can't believe that the game runs so well (perfectly straight line with zero deeps and stutters whatsoever) with this kind of visuals and only eating up 6,5GBs of VRAM at 88% of 4K and 99% of settings at max and without RT (with my GPU at 4K and DLSS even, there's no point in even bother cuz RT is optimized for Frame Gen and much better CPUs). I mean, I'm considering this level of performance on my rig and with my CPU and GPU at max settings and 88% of 4K as great performance so don't at me

But somehow I don't think that this is indicative of real world performance during actual gameplay with lots of alpha effects and particles on screen and such, so based on that I still won't be pre-ordring the game and will wait for PC reviews and impressions.

I honestly can't believe that the game runs so well (perfectly straight line with zero deeps and stutters whatsoever) with this kind of visuals and only eating up 6,5GBs of VRAM at 88% of 4K and 99% of settings at max and without RT (with my GPU at 4K and DLSS even, there's no point in even bother cuz RT is optimized for Frame Gen and much better CPUs). I mean, I'm considering this level of performance on my rig and with my CPU and GPU at max settings and 88% of 4K as great performance so don't at me

But somehow I don't think that this is indicative of real world performance during actual gameplay with lots of alpha effects and particles on screen and such, so based on that I still won't be pre-ordring the game and will wait for PC reviews and impressions.

Last edited:

TheStam

Member

Maxed out at 3440x1440p doesn't seem at all feasible on my rig (13700k + 4080) after restarting the application. I got in the 40s with very high Ray Tracing and DLAA + Frame Gen. This is pre-driver and pre-release though.

There's something weird with post processing. Image doesn't look clean even maxed out on DLAA, a lot of artifacts around branches for instance. Both smudgy and oversharpened at the same time. The game is supposed to also have Ray Reconstruction even if I didn't see it in the settings, maybe that is why there are some smudginess and artifacts like in Cyberpunk and Alan Wake.

EDIT: To be a bit specific, I got 46 fps with DLAA and Ray Tracing: Very High + Frame Gen. With same settings and DLSS Quality I got 78 fps, which is a huge jump.

There's something weird with post processing. Image doesn't look clean even maxed out on DLAA, a lot of artifacts around branches for instance. Both smudgy and oversharpened at the same time. The game is supposed to also have Ray Reconstruction even if I didn't see it in the settings, maybe that is why there are some smudginess and artifacts like in Cyberpunk and Alan Wake.

EDIT: To be a bit specific, I got 46 fps with DLAA and Ray Tracing: Very High + Frame Gen. With same settings and DLSS Quality I got 78 fps, which is a huge jump.

Last edited:

Is it because even RT off is using lumen? Your probably just changing from lumen to rtxdi, the performance difference is smaller going from lumen to RT than raster to RT.Does the raytracing option work properly? I don't get much difference in FPS from off to max.

Bavarian_Sloth

Member

If the benchmark roughly reflects real game performance, I will probably play with 4k DLSS quality (67%), FG off, additional RT effects off and details on high.

That should be enough for a constant 60 fps and deliver good image quality thanks to DLSS "Quality" + Details on High.

I'll probably leave FG out due to the nature of the gameplay.

That should be enough for a constant 60 fps and deliver good image quality thanks to DLSS "Quality" + Details on High.

I'll probably leave FG out due to the nature of the gameplay.

tommib

Banned

Ok I'm not a pc gamer (anymore) but there's barely anything happening in the benchmark. No destruction physics, combat, alpha effects, particles, fast cuts in the camera world placement… it's a camera flowing around a river. Is this your typical pc benchmark? How is this stretching hardware at any level? How would the results be representative of your frame rate during gameplay?

Last edited:

Mister Wolf

Member

Is it because even RT off is using lumen? Your probably just changing from lumen to rtxdi, the performance difference is smaller going from lumen to RT than raster to RT.

I'm looking forward to seeing the difference between maxed out Lumen vs Nvidia's per pixel RTGI in the DF video. As it stands now I'm satisfied with just using maxed Lumen for lighting and reflections.

winjer

Member

Ok I'm not a pc gamer (anymore) but there's barely anything happening in the benchmark. No destruction physics, combat, alpha effects, particles, fast cuts in the camera world placement… it's a camera flowing around a river. Is this your typical pc benchmark? How is this stretching hardware at any level? How would the results be representative of your frame rate during gameplay?

No. it's not how PC benchmarks usually work.

This Black Myth Wukong Benchmark Tool, is just bad at what it's supposed to be.

For example, this is the Lobby benchmark from 3DMark 2001. Based on the Max Payne engine.

As you can see, even 23 years ago, PC benchmarks had tons of action, physics, alpha effects, animations, etc.

SolidQ

Member

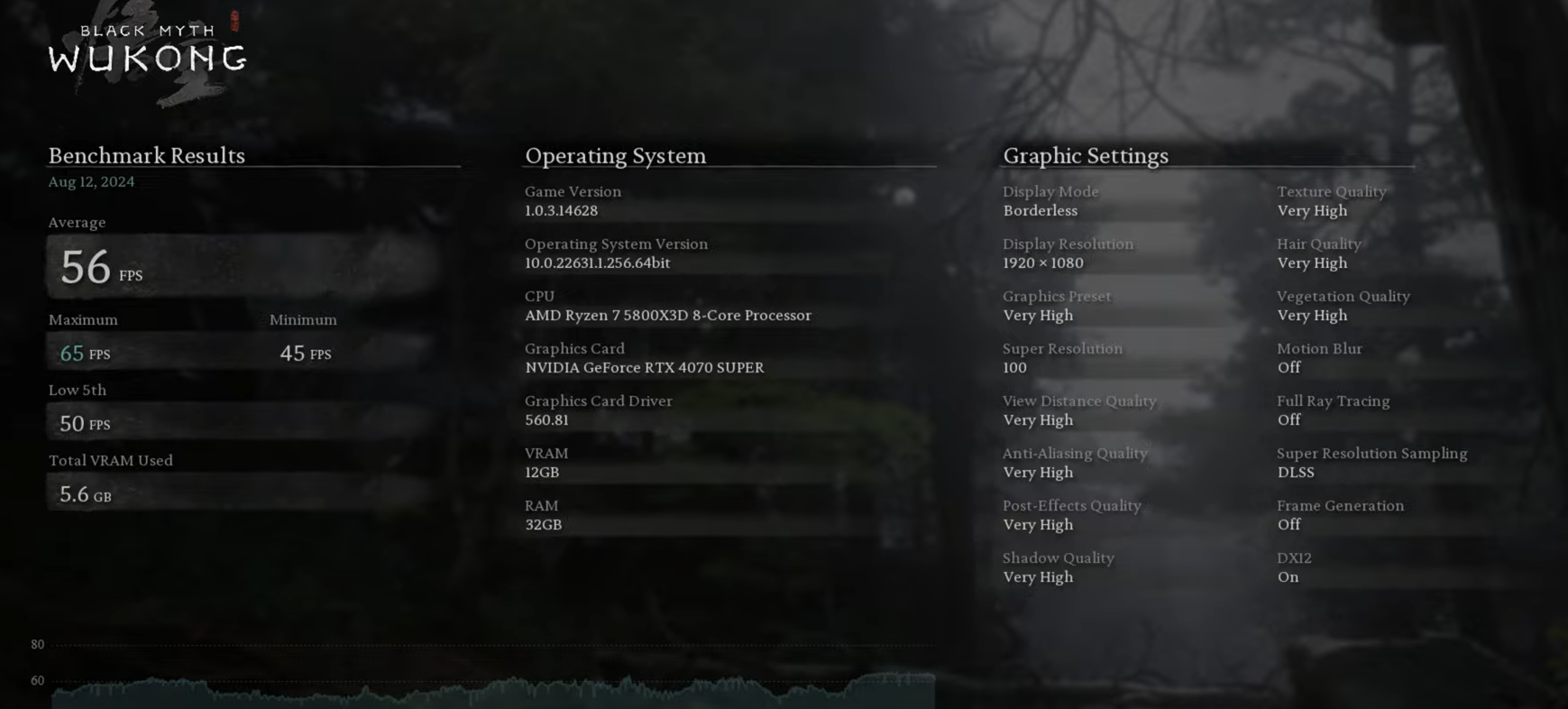

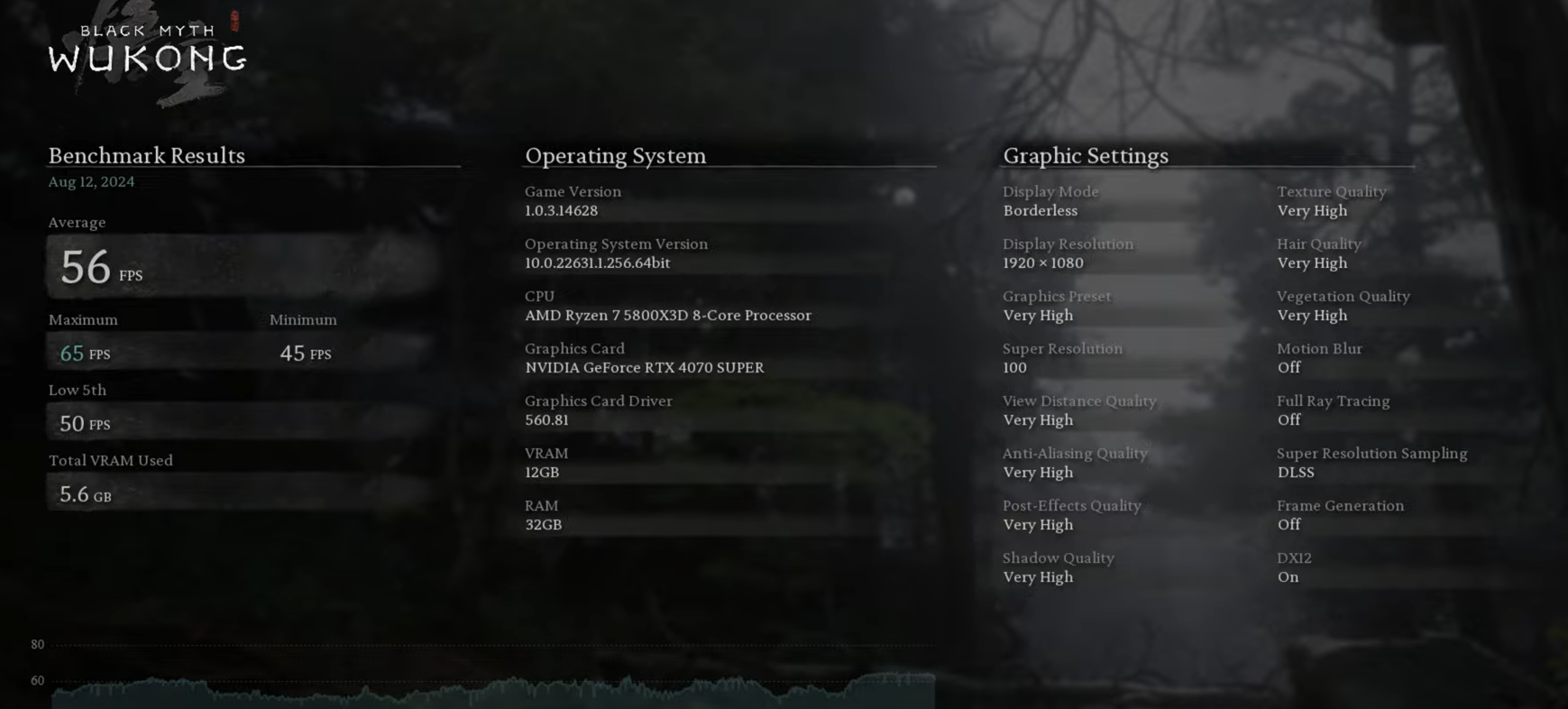

seems unoptimized game. found video with 5800X3D + 4070S at 1080p 56fps, without RT, also Very High, not cinematicNo RT, max settings, 100% resolution

Last edited:

winjer

Member

seems unoptimized game. found video with 5800X3D + 4070S at 1080p 56fps, without RT

What resolution scale?

I'm using 100%, so it's native.

SolidQ

Member

that from videoI'm using 100%, so it's native.

Looks like same setting as Alone in the dark was Demo. Try on High settings instead cinematic, you shoud get better image quality and more fps.

Last edited:

Skifi28

Member

Seems terrible for native 1440p with no RT if I'm honest.What resolution scale?

I'm using 100%, so it's native.

Last edited:

winjer

Member

Seems terrible for native 1440p with no RT if I'm honest.

It is, really bad. Upscaling helps, but not a lot.

And image quality is bad. It has a grainy and over sharpened look, and no option to disable fiml grain and sharpen.

And with max RT, it goes to 7 fps. Mind you, this is an RDNA2 card.

Last edited:

Bojji

Member

Show one with frame gen off, we will see the difference between high end Ampere and Ada (RT is optimized to Ada according to Nvidia in this game).

Same settings but without frame gen obviously:

Everything on high (textures on cinematic) and RT medium:

Everything on high (textures on cinematic) and RT OFF, finally playable!

Bavarian_Sloth

Member

This Benchmark only seems to torture the GPU, I think (Lumen + Nanite + additional RT features (translucency, ...).

Last edited:

winjer

Member

that from video

Very high settings and 1080p.

I'm using cinematic settings and 1440p. That explains the difference.

SolidQ

Member

in Alone in the Dark it's was fixed from Cinematic to Highimage quality is bad. It has a grainy and over sharpened look, and no option to disable fiml grain and sharpen.

The only thing I can think of is that the CPU isn't taxed that much in this game anyway. I've not seen anything that would be taxing to the CPU in trailers or gameplay. Hence why the benchmark is just a flythrough.This Benchmark only seems to torture the GPU, I think (Lumen + Nanite + additional RT features (translucency, ...).

Topher

Identifies as young

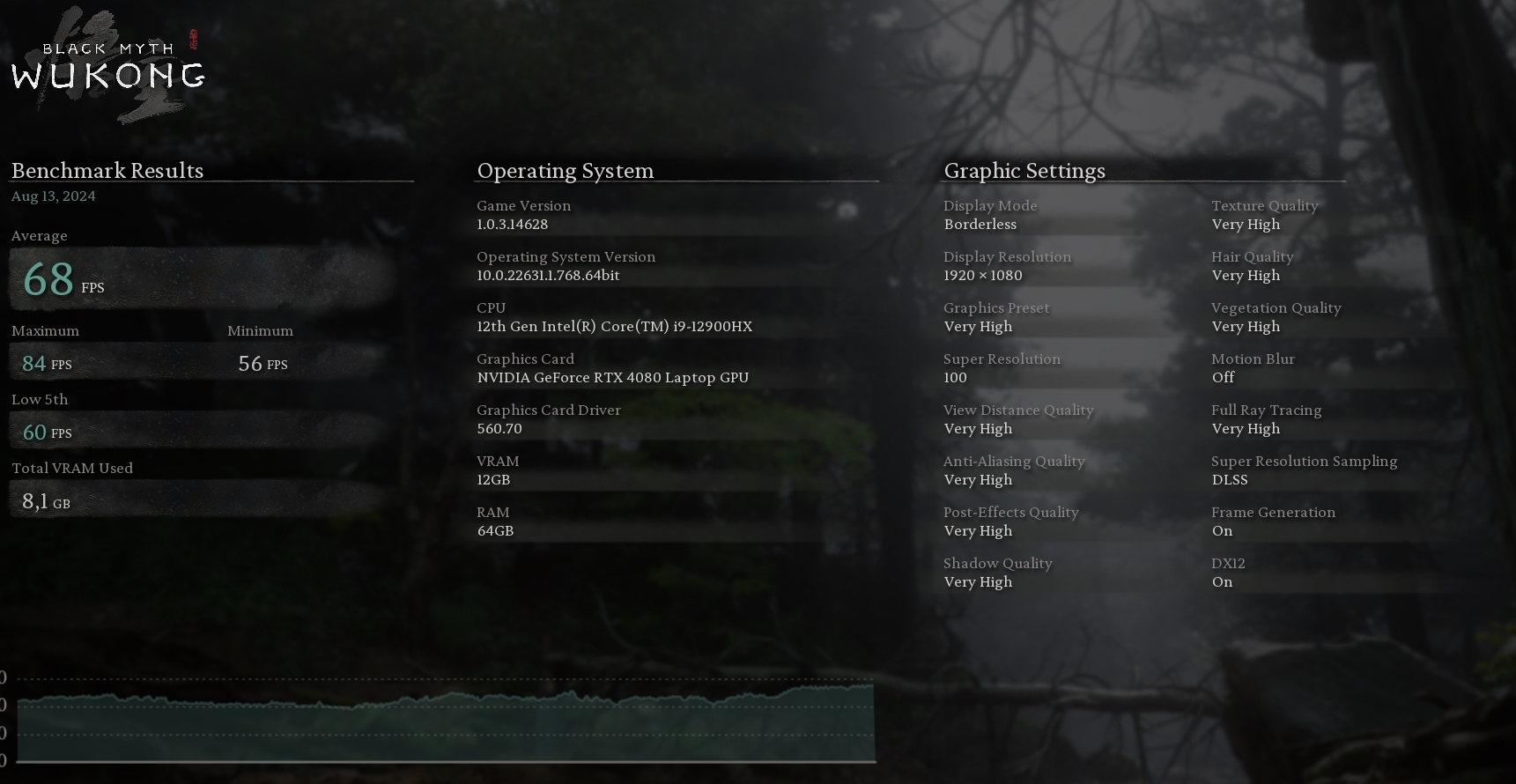

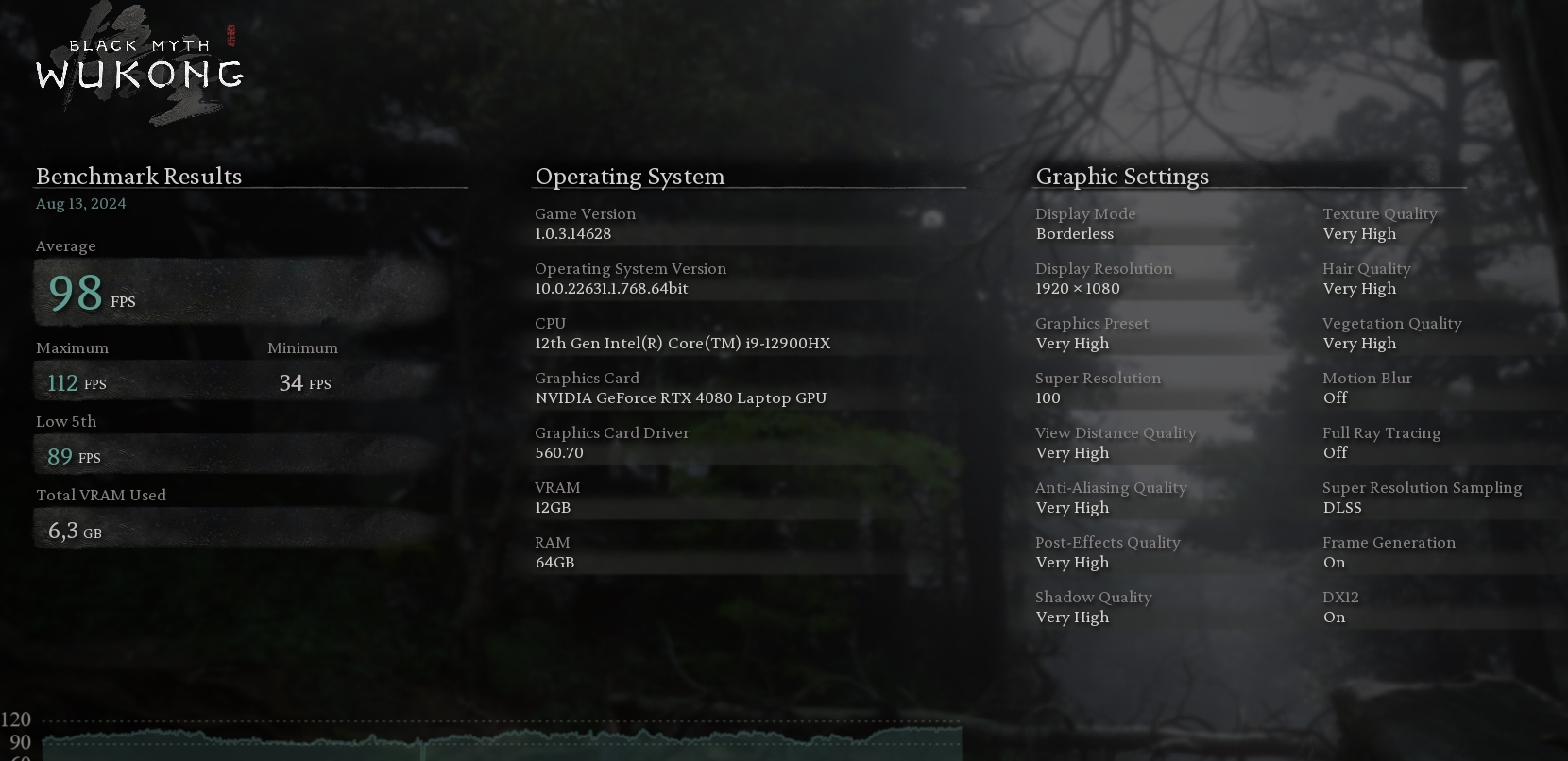

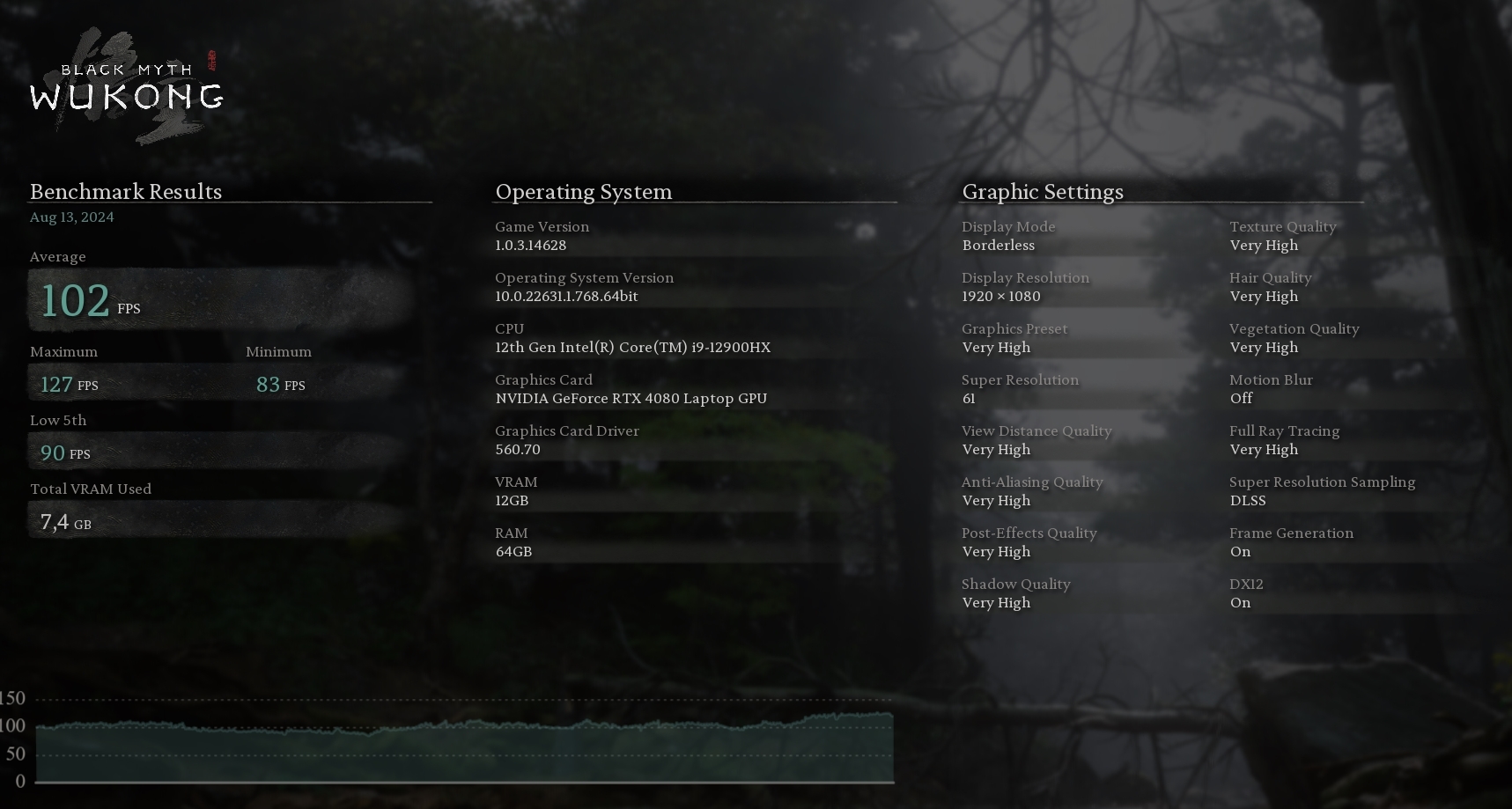

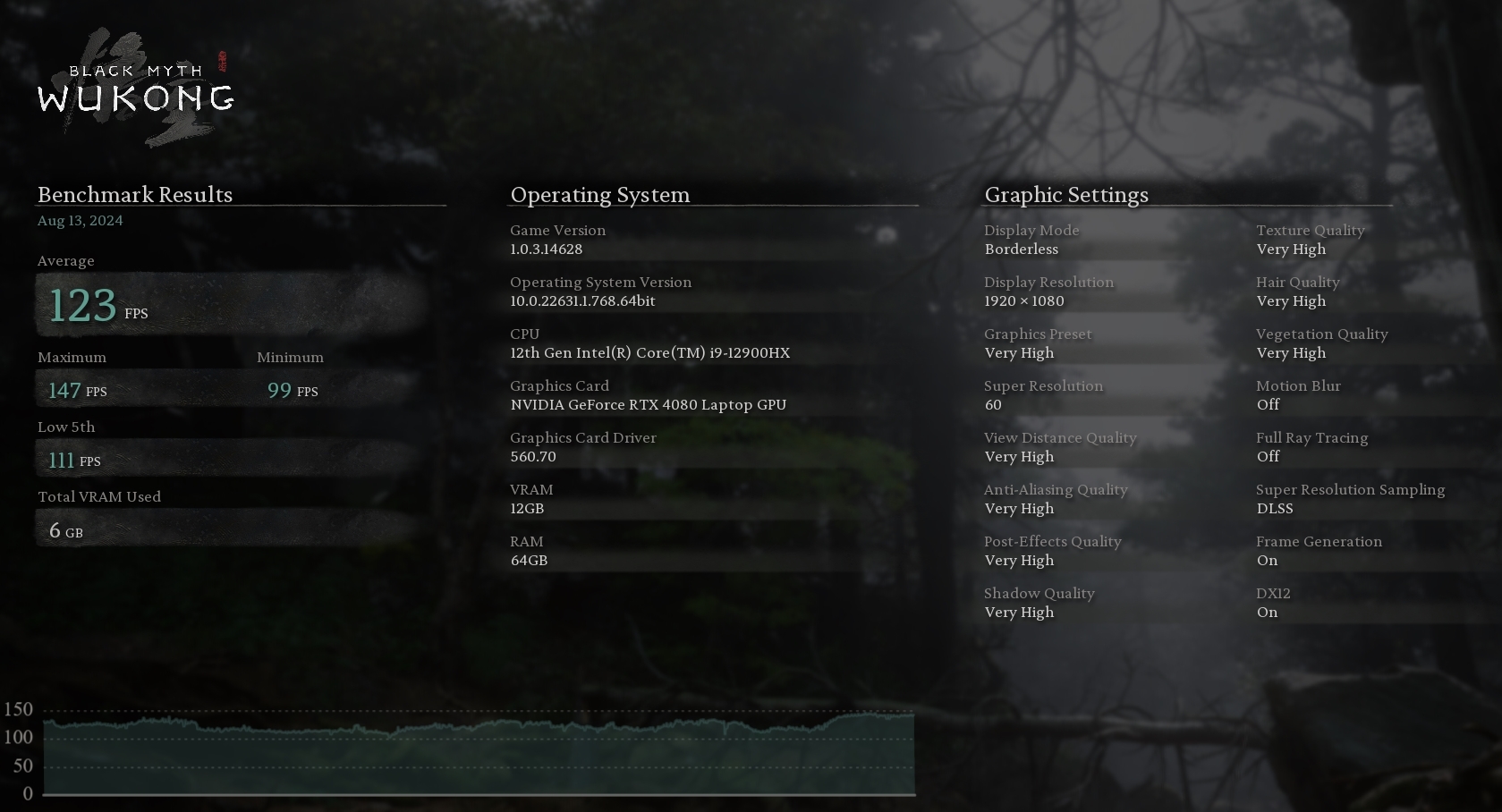

Three Runs: 100, 88, 50

Edit: Added RT Very High at 50. Anyone else get some major stuttering with RT on?

Edit: Added RT Very High at 50. Anyone else get some major stuttering with RT on?

Last edited:

tommib

Banned

No. it's not how PC benchmarks usually work.

This Black Myth Wukong Benchmark Tool, is just bad at what it's supposed to be.

For example, this is the Lobby benchmark from 3DMark 2001. Based on the Max Payne engine.

As you can see, even 23 years ago, PC benchmarks had tons of action, physics, alpha effects, animations, etc.

Oh man this brings back memories. I ran this so many times just because it was the coolest shit.

And that's indeed what I would call a benchmark tool.