GametimeUK

Member

Whatever they ask as long as my PC is more powerful than my friends consoles because that's what gives my opinion more credibility during gaming debates.

"Rasterization" should not matter to anyone buying a new GPU in the year 2025.It's not that far off

Are they?They are direct competitors.

Are they?

See above about why AMD has priced them as they did - for a nice change from previous two generations where they basically thought that no one cares about RT for some reason.

The cards are competitive precisely because they come with a discount in comparison to Nvidia this time. Thank god that AMD has figured this one out on a third approach.

This.Depends on how superior they are, right now it seems like amd is catching up with fsr4 so i would not pay a huge tax.

That's $4,853 (US) for a graphics card.I paid £1800 over the retail price for my 5090 which is £1940

totally worth it. this card is amazing. trying to get a 9950X3D to let the 5090 go even further

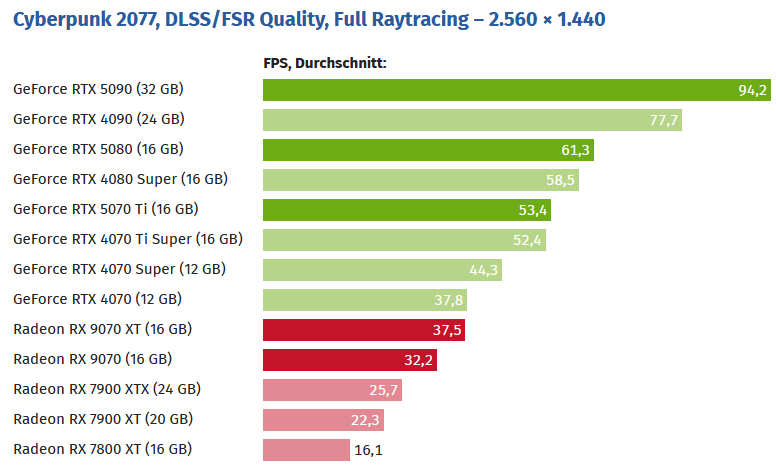

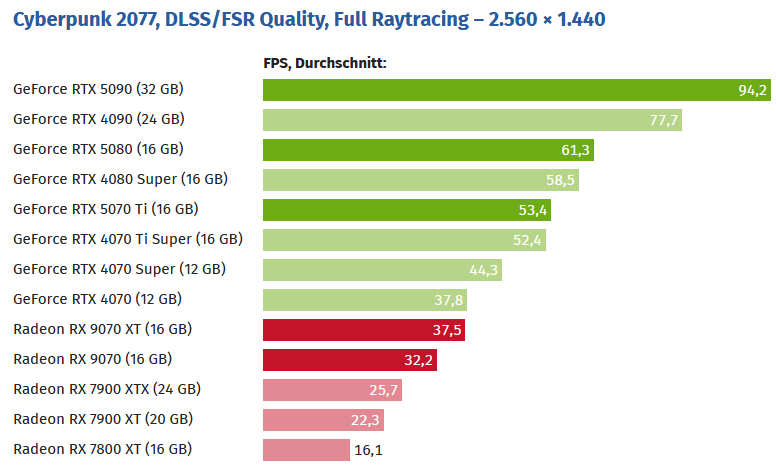

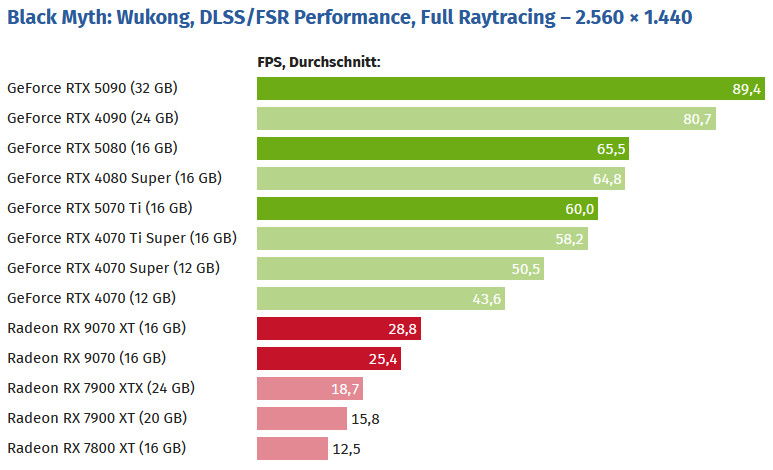

Lumen does not use RT cores. That's why the 9070XT is so competitive. The same game, but with hardware RT.Yes they are.

It wins some it loses some but on average they are in the same class power wise.

Price wise the 9070XT wins outright.

Games with excessive RT it loses, no one is denying that, but as I said on average because no one ONLY plays heavy RT games and most games arent RT heavy, they are ~5% apart at 1440p.

With Unreal Engine being the most popular engine going forward they are basically the same so yes they are direct competitors:

Moore's Law Is Dead dropped a truth bomb a while ago:

People want "competition" against Nvidia not because they want to buy AMD or Intel, but because they want to buy Nvidia at a lower price.

Jensen has everyone by the balls.

Don't worry about it. PC gamers enjoy paying more to Nvidia. Ever since the GTX 970 I've never managed to keep a Nvidia GPU. Every time I bought one I managed to sell it without even trying. I've felt guilty selling them for far more than I bought them for. But not anymore as I've come to the realisation PC gamers perversely enjoy paying Nvidia's excessive prices. Otherwise they'd stop buying them to send a message to Nvidia. I've managed to get a 2080TI quite cheap 2nd hand for my needs. But selling those cards has basically paid for my consoles and subs and my 2080ti.That's $4,853 (US) for a graphics card.

Comparing fps with multi framegen on with one and off with the other is pretty silly no? Why are they using FSR3 anyway?21fps 9070XT vs 166fps 5070ti

How is 13.9GB VRAM usage and 166fps possible with maximum settings and full path-tracing on a 5070ti when I climbed over 16GB on a 4080 Super and ended up with 10-20fps or lower?21fps 9070XT vs 166fps 5070ti

This isn't true at all.With Unreal Engine being the most popular engine going forward they are basically the same so yes they are direct competitors:

This is the only correct answer.Fuck all

I also showed results without FG (17fps vs 53fps). As for gameplay screenshot both both cards used FG in that test, but the 9070XT only supports FGx2 (at least on paper, because FSR FG is crap and I consider it unusable).Comparing fps with multi framegen on with one and off with the other is pretty silly no? Why are they using FSR3 anyway?

It's 1440p. At 4K cards with 16GB VRAM need to reduce the texture streaming pull from maximum to very high (texture quality still looks the same), otherwise performance will drop to single digits.How is 13.9GB VRAM usage and 166fps possible with maximum settings and full path-tracing on a 5070ti when I climbed over 16GB on a 4080 Super and ended up with 10-20fps or lower?

1080p?

A patch has lowered memory consumption?

MFG is what's crap. One is using MFG and the other is not. That comparison of fps is meaningless.I also showed results without FG (17fps vs 53fps). As for gameplay screenshot both both cards used FG in that test, but the 9070XT only supports FGx2 (at least on paper, because FSR FG is crap and I consider it unusable).

Youtuber Jansn Benchmarks tested full potential of both cards in this particular test. FGx4 is perfectly usable on nvidia cards because it renders motion smoothly without judder (flip metering running on tensor cores ensures perfect frame pacing) and with minimum input latency, while FGx2 on AMD card cannot render motion smoothly (even at 60fps base framerate FSR FG has judder) and input lag problem.MFG is what's crap. One is using MFG and the other is not. That comparison of fps is meaningless.

Youtuber Jansn Benchmarks tested full potential of both cards in this particular test. FGx4 is perfectly usable on nvidia cards because it renders motion smoothly without judder (because it emulates flip metering on tensor cores and that ensures perfect frame pacing) and with minimum input latency, while FGx2 on AMD card cannot render motion smoothly (even at 60fps base framerate FSR FG has judder) and input lag problem.

Nvidia lied when they compared the 5070 performance to the 4090, but MFG is still awesome feature to have. Being able to boost framerate into 200 fps territory certainly improves the experience.

Even the biggest AMD die hard fan on entire YT likes MFG (Frogboy even received a free 9070XT signed by AMD CEO), although in this particular video he should cap his framerate to 237fps to avoid judder with FGx4 on his 240Hz monitor.

Youtuber Jansn Benchmarks tested full potential of both cards in this particular test. FGx4 is perfectly usable on nvidia cards because it renders motion smoothly without judder (flip metering running on tensor cores ensures perfect frame pacing) and with minimum input latency, while FGx2 on AMD card cannot render motion smoothly (even at 60fps base framerate FSR FG has judder) and input lag problem.

Nvidia lied when they compared the 5070 performance to the 4090, but MFG is still awesome feature to have. Being able to boost framerate into 200 fps territory certainly improves the experience.

Even the biggest AMD die hard fan on entire YT likes MFG (Frogboy even received a free 9070XT signed by AMD CEO), although in this particular video he should cap his framerate to 237fps to avoid judder with FGx4 on his 240Hz monitor.

At the moment, the price difference between the 9070XT and 5070ti in my country is $175 USD (currency converted). A few days ago, the price difference was only $100, but now 9070XT are getting cheaper and cheaper. I know that $175 is a lot for some people, but from my perspective, paying an additional $175 just to have better gaming experience for the next couple of years is worth it. With the nvidia card, I would not have to worry about whether games that use neural rendering features will work properly. Also performance in PT will be acceptable. My card has comparable performance to 5070ti and I get 110-170fps in PT games at 1440p DLSSQ + FGx2, that's playable experience in my book. On AMD card I would need to turn PT off.I can’t use FG. Anything that adds latency is useless.

I don’t think anyone disputes that Nvidia reigns supreme in heavy RT, but price differences can reflect that. My Asus TUF 9070xt cost $1,059 CAD ($735 US) but the equivalent 5070ti in TUF form is 37% more expensive at $1,449.

now it's as good

It's true that real frames and AI-generated frames are not the same, therefore reviewers should focus on comparing raw performance, but DLSS FG certainly improves motion smoothness and clarity. That's not a meaningless difference.I have a 5090, I don't need a video review to tell me what it does or tell me it's usable. Using MFG in a framerate comparison is meaningless though, those are not actual frames and not indicative of performance. It would be like enabling upscaling then comparing to native res. Saying one is 1080p the other 4k.

Unless you activate RT....Ok AMD's new GPU won't do as good of a job keeping my house warm by catching on fire but at playing games it will be fine.