Affected games:

Monster Madness: Battle for Suburbia

Tom Clancy’s Ghost Recon Advanced Warfighter 2

Crazy Machines 2

Unreal Tournament 3

Warmonger: Operation Downtown Destruction

Hot Dance Party

QQ Dance

Hot Dance Party II

Sacred 2: Fallen Angel

Cryostasis: Sleep of Reason

Mirror’s Edge

Armageddon Riders

Darkest of Days

Batman: Arkham Asylum

Sacred 2: Ice & Blood

Shattered Horizon

Star Trek DAC

Metro 2033

Dark Void

Blur

Mafia II

Hydrophobia: Prophecy

Jianxia 3

Alice: Madness Returns

MStar

Batman: Arkham City

7554

Depth Hunter

Deep Black

Gas Guzzlers: Combat Carnage

The Secret World

Continent of the Ninth (C9)

Borderlands 2

Passion Leads Army

QQ Dance 2

Star Trek

Mars: War Logs

Metro: Last Light

Rise of the Triad

The Bureau: XCOM Declassified

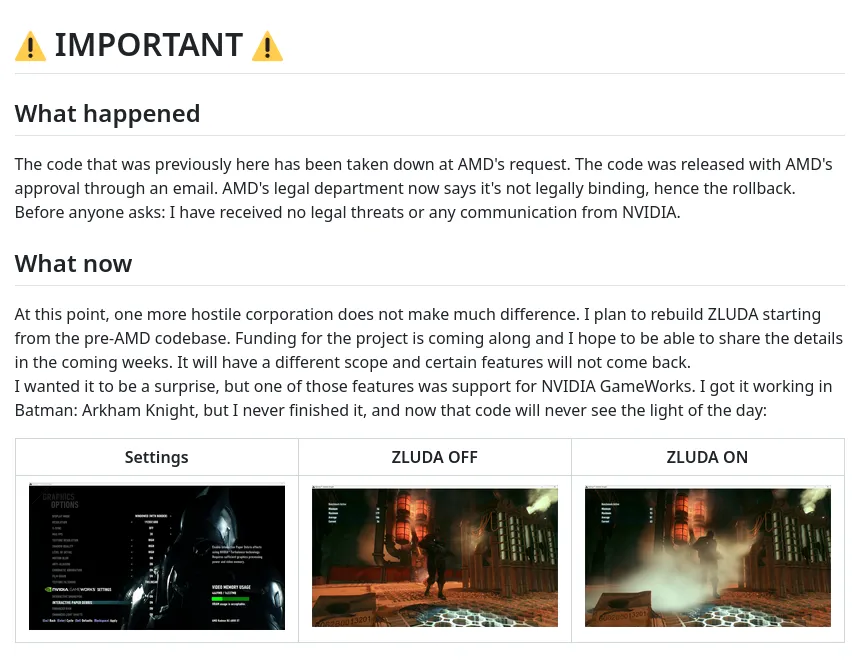

Batman: Arkham Origins

Assassin’s Creed IV: Black Flag

Using only the CPU has a giant impact:

It has been about 2 years since Nvidia has made open-source the kernel module and this will be the future model, so much so that the RTX 50 on Linux supports only the open module.

With the R515 driver, NVIDIA released a set of Linux GPU kernel modules in May 2022 as open source with dual GPL and MIT licensing. The initial release targeted datacenter compute GPUs…

developer.nvidia.com

Part of what was proprietary was migrated to the GPU firmware. Userspace also remains proprietary, but with the kernel module open, Red Hat is developing an open source userspace called NVK.

www.phoronix.com