SolidQ

Member

Same as me, only care about pure price/perfomance, doesn't care about RT yet/Upscalers.they just care about other features like raw performance

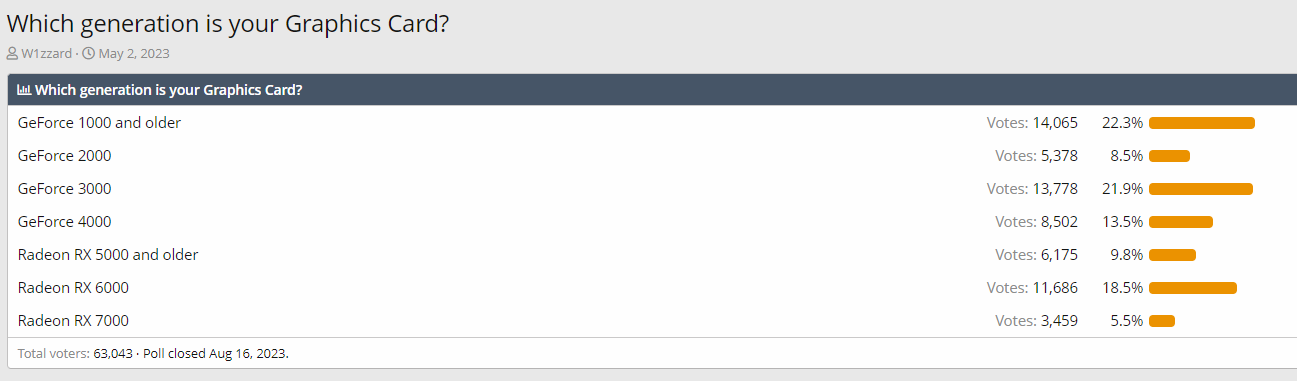

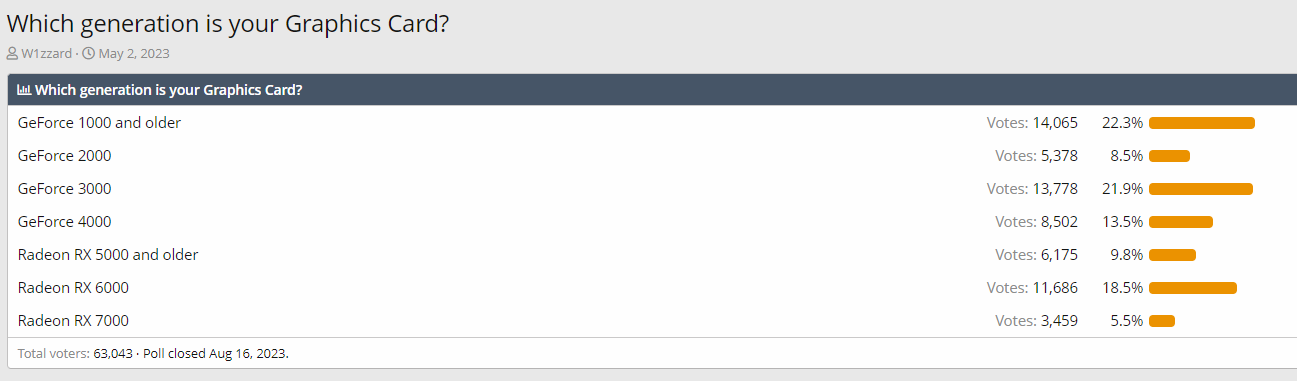

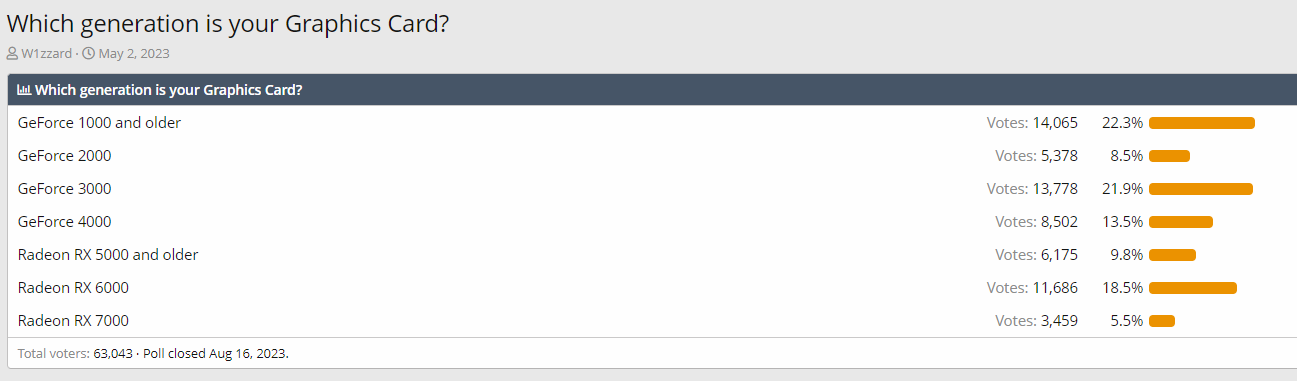

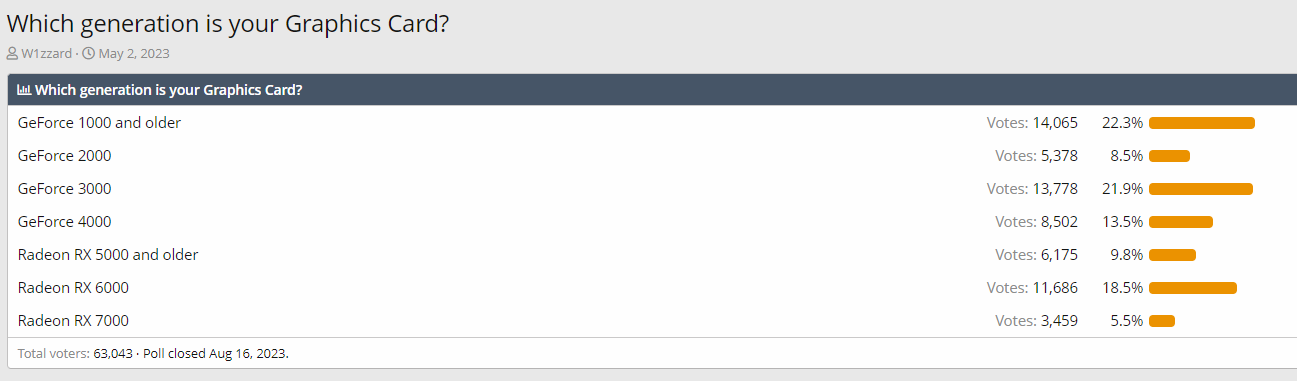

That one an interesting poll with 60k+ voters

Same as me, only care about pure price/perfomance, doesn't care about RT yet/Upscalers.they just care about other features like raw performance

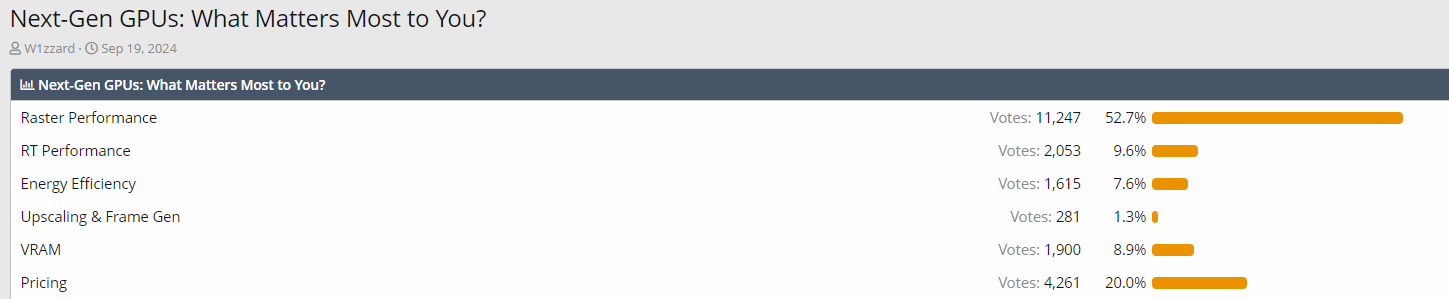

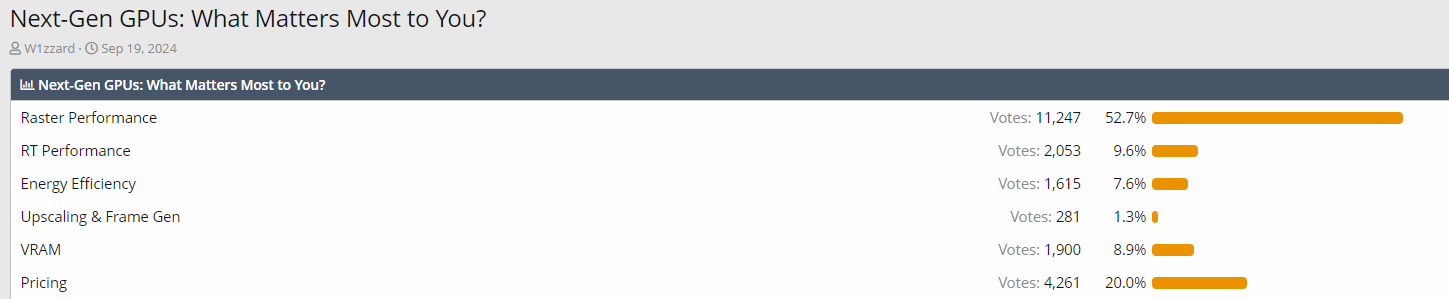

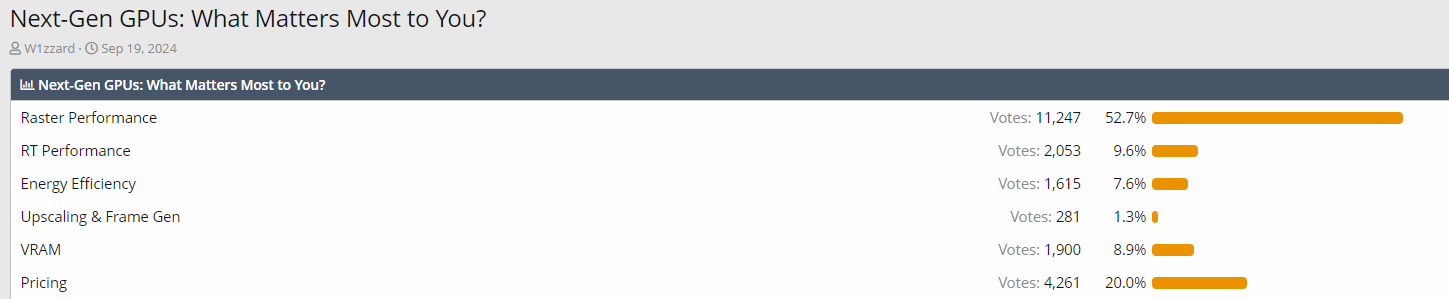

That's copium, DLSS is huge. I'm considering getting a 5080 next gen even though I generally really dislike nvidia as a corporation simply because AMD hasn't shown it can compete. If it had 24gb vram i'd be there day one.That interesting poll from TPU. Only 1.3% care about upscaling

That next time for RDNA5/UDNAbecause AMD hasn't shown it can compete

It looks like 16GB unfortunately on 256bit interface for 5080, which kind of sucks.That's copium, DLSS is huge. I'm considering getting a 5080 next gen even though I generally really dislike nvidia as a corporation simply because AMD hasn't shown it can compete. If it had 24gb vram i'd be there day one.

You'll care when AMD finally comes out with decent RT performance and ml upscalerSame as me, only care about pure price/perfomance, doesn't care about RT yet/Upscalers.

That one an interesting poll with 60k+ voters

8gb of VRAM was good for awhile in the past but no longer even on 1080p. Get 16gb of vram just to be safe even if you're on 1080p. Lots of newer games are pushing 8gb vram or more now.From what I've played of pc gaming 4gb ram did a lot, I think 8, 16 gb of ram is safe.

Nope, i will care about RT when industry will move at least 50% games to RT requirements(like Metro EE), but for now like 99%+ games still raster.You'll care when AMD finally comes out with decent RT performance and ml upscaler

The 1000 series was the best, it lasted the longest out of all the gens. Started with gtx 260 -> gtx 670 -> 970 -> 1070 and haven't upgraded since. Next upgrade is going to be 5060, was going to go for the 70 tier but with how overpriced things are now, I'm aiming for 5060 instead of 5070.Same as me, only care about pure price/perfomance, doesn't care about RT yet/Upscalers.

That one an interesting poll with 60k+ voters

Same, but my upgrade going to RDNA4 due VRAM and priceNext upgrade is going to be 5060, was going to go for the 70 tier but with how overpriced things are now, I'm aiming for 5060 instead of 5070.

That interesting poll from TPU. Only 1.3% care about upscaling

PCMR have gotten soft.

In 2016: "Hahaha, look at you console peasants with your chequerboard upscaling, we in PCMR can run native resolutions and high framerates"

In 2024: "Actually guys, Image upscaling and frame generation is important for me and my $1200 GPU"

Yet, DLSS1 got absolutely shat on and nobody wanted it. DLSS now is almost as good as native while being 30% more performant when using quality. PC gamers are rightfully embracing it, but you want them to be morons by continuing to eschew upscaling because they didn't care about checkerboarding which was nowhere near as good as DLSS?PCMR have gotten soft.

In 2016: "Hahaha, look at you console peasants with your chequerboard upscaling, we in PCMR can run native resolutions and high framerates"

In 2024: "Actually guys, Image upscaling and frame generation is important for me and my $1200 GPU"

I think the danger here is devs being lazy and using it as a crutch, we already have default-on FSR in some games that make them look like utter crap. AMD needs to get its shit together already, I can't believe they waited 2 years between FSR updates and it's still mostly trashYet, DLSS1 got absolutely shat on and nobody wanted it. DLSS now is almost as good as native while being 30% more performant when using quality. PC gamers are rightfully embracing it, but you want them to be morons by continuing to eschew upscaling because they didn't care about checkerboarding which was nowhere near as good as DLSS?

Yeah, stupid post. "Don't use DLSS PCMR. Stick to native even though DLSS is almost as good but performs much better!"

Seems to be already happening.I think the danger here is devs being lazy and using it as a crutch, we already have default-on FSR in some games that make them look like utter crap. AMD needs to get its shit together already, I can't believe they waited 2 years between FSR updates and it's still mostly trash

Yet, DLSS1 got absolutely shat on and nobody wanted it. DLSS now is almost as good as native while being 30% more performant when using quality.

With it drawing so much power, won't it wear it out faster? It can't be easy to crank through that much juice?

Yeah its still funny how goalposts change.Yet, DLSS1 got absolutely shat on and nobody wanted it. DLSS now is almost as good as native while being 30% more performant when using quality. PC gamers are rightfully embracing it, but you want them to be morons by continuing to eschew upscaling because they didn't care about checkerboarding which was nowhere near as good as DLSS?

Yeah, stupid post. "Don't use DLSS PCMR. Stick to native even though DLSS is almost as good but performs much better!"

I agree it shouldn't be needed but when you have GPUs that come out every 2 years, and with massive price increases, then it's tough. And yea it's by design.Yeah its still funny how goalposts change.

Consoles were shit on before for using upscalers to run at higher resolutions than optimal with good enough performance. Nowadays, PC gamers are doing the same thing. Its just funny how things swing around.

I'm not saying not to use DLSS. Use it if you want. It shouldn't be needed though.

They haven't and DLSS1 being ridiculed disproves the false narrative you're peddling. The goalposts haven't been shifted. No one wanted DLSS1 because it sucked. People want DLSS2-3 because they're good. You wanted people to embrace garbage tech?Yeah its still funny how goalposts change.

Consoles were shit on before for using upscalers to run at higher resolutions than optimal with good enough performance. Nowadays, PC gamers are doing the same thing. Its just funny how things swing around.

Eh, no. The voters could only vote on the one thing they care most about. So unless the voters were able to vote on multiple items it does not mean they don't care about upscaling.That interesting poll from TPU. Only 1.3% care about upscaling

That's not what happened at all. You have a disingenuous or faulty memory. Obviously, AI upscaling is relatively mature technology now.PCMR have gotten soft.

In 2016: "Hahaha, look at you console peasants with your chequerboard upscaling, we in PCMR can run native resolutions and high framerates"

In 2024: "Actually guys, Image upscaling and frame generation is important for me and my $1200 GPU"

Driver nerf for the 4090 is coming before these arriveCan someone tell me how 5080 can be 10% faster than 4090? 4090 has 60% more cores and is better in every aspect:

Even less power draw so 4GHz clock (lol) is out of the picture.

I'm looking forward to what it will cost.

5080 still gonna be 16GB for sure.

As of today, there are more than 100 games with RT support, and new RT games are being added every week. People who build a new GPU right now should think about RT performance, because what's the point of buying a new PC for old games, when even old and cheap GPU will run those games. Some games no longer even support raster, and Nvidia card is crushing AMD when it comes to RT performance.Same as me, only care about pure price/perfomance, doesn't care about RT yet/Upscalers.

That one an interesting poll with 60k+ voters

I like to use both DLSS balance and DLDSR at the same time. The image quality always looks better than TAA native or DLAA because DSR is basically downsampled image but without performance penalty thanks to "DLSS balance" upscaling. If the game has a good DLSS implementation, even DLSS Ultra performance + DLDSR looks better than TAA native and I get 2x better performance in such game.yup. people forget that DLSS1 was worse than FSR2. it was absolutely horrifically bad.

PC players will brutally shit on your tech if it sucks, and embrace it if it works.

DLSS 2.0+ is almost literally free performance at this point. Having to only render 50-75% of the pixels while getting close to or better than native quality is just not something anyone should ignore.

it is of course dependent on the game. in extreme cases like Doom Eternal you can get image quality that beats the 4k image of the console versions while rendering only 25% of the pixels... like... how crazy is that?

so why shouldn't the "PC master race" jump on it?

"I can render 25% of your pixels and still outclass you!" what a flex is that!? work smarter not harder.

It's not meant for people who need to know this...

PCMR have gotten soft.

In 2016: "Hahaha, look at you console peasants with your chequerboard upscaling, we in PCMR can run native resolutions and high framerates"

In 2024: "Actually guys, Image upscaling and frame generation is important for me and my $1200 GPU"

For 4x the price .. fantastic valueNothing changes, top GPU right now is more than 2x more powerful than most powerful console. Anyone can play at native 4k but why do that when you can have 50% (or more) better FPS with comparable image quality via DLSS?

It's coming back around with PSSR.Yeah its still funny how goalposts change.

Consoles were shit on before for using upscalers to run at higher resolutions than optimal with good enough performance. Nowadays, PC gamers are doing the same thing. Its just funny how things swing around.

I'm not saying not to use DLSS. Use it if you want. It shouldn't be needed though.

For 4x the price .. fantastic value

I never said that 4090 is good value

Where I live I can buy Pro for:

4090 for:

That's 2.4x more. Power difference between 4090 and 7700xt is 2.2:

Between 4090 and 4070 (you can say that Pro has more feature parity with this one) is exactly 2x.

Still bad value but not 4x. Probably depends where you live.

This is a scary response to my comment. Things are getting weird.

Oh.. I didn't know you can play games on the GPU alone! News to me

Thanks

Discussion was about GPU alone so far.

For someone that already has pc with good enough CPU, GPU alone is needed for upgrade (plus you get money from selling old GPU).

Anyway, xx90 series is not for people that seek value. 5090 will have even more ridiculous price.

Bolded can be applied to PS5 for people upgrading to PRO?! So my original point is still valid, anyways whatever suit you man

Also I can apply your end quote to PS5PRO and say that its not for people who seek value too!!

Its crazy to me that you had this conclusion and yet you are in every PRO thread crying about its price

Oh, look, another thread is devolving into the usual PS5 Pro vs PC pissing match.

Back to the topic: What's the predicted price for 5080, 1000$ or 1200$? I don't expect anything lower from company that don't have any competition and is focused on Ai market right now (GPUs are like their hobby right now, hahaha).

You couldn't be further from the truth.Why?

The 90 class is meant for people for whom dropping $2K+ on a GPU is no more significant than you or I dropping $5 on a cheeseburger.

It sucks that PC gaming is like that now but it is what it is.

..and besides, despite all the hype, 90 class sales only represent 1-2% of the GPU market.

Why?

The 90 class is meant for people for whom dropping $2K+ on a GPU is no more significant than you or I dropping $5 on a cheeseburger.

It sucks that PC gaming is like that now but it is what it is.

..and besides, despite all the hype, 90 class sales only represent 1-2% of the GPU market.

It's scary for the part that I bolded. It's the fact that you so easily accept it that's scary. $2,000 for a GPU should never be okay.

I think it's one of those things where it exists only because people will buy it that high.

Plus the 5090 is probally more than 2k.

No way LOL! Are you serious or you being funny?

Same as me, only care about pure price/perfomance, doesn't care about RT yet/Upscalers.

That one an interesting poll with 60k+ voters