People think that just because you stick parts inside of a proprietary box you get some kind of free performance increase because of "optimization".

You will get more performance if you optimize your code (amongst other things). But that's not "free", it costs a lot of money, and I don't think anyone assumes that.

Generally in the past the delta between consoles at launch vs PCs of the day has been because of the highly customized parts designed specifically for the console boxes and the high level of specialization. Consoles played games and didn't need to devote resources towards anything else.

Wether a console dedicates the performance to games or to other uses has nothing to do with using stock parts.

In addition there was a fairly level playing field in terms of thermals and power draw. PCs weren't sucking down wattage and so consoles could roughly match them in terms of transistor count and design complexity.

I don't see how thermals and power draw have to limit a console (at this price class!). There is no law which says that consoles have to be incredibly small or that consoles can not have high power draw.

All of this is different now.

1. Consoles next gen are going to get parts either directly off the shelf or only lightly customized for their use. The cost of R&D in the semiconductor space has skyrocketed, and the parts made for PC and mobile are good enough that there's no real benefit to coming up with your own silicon from scratch. This sea change actually came during the design process for the current gen, but Nintendo threw a monkey wrench into the works by going with overclocked Gamecube chips and Sony obviously cost themselves a boatload of money by betting badly with Cell and tossing the RSX in at the last minute.

The 360 got a prototype unified shader GPU but you won't be seeing this happen next time. Both MS and Sony are going with AMD-based GPU designs and there's nothing far enough along in production that could conceivably go into for the new consoles. They're getting GCN architecture, the only question is transistor count and clock speed.

I don't think you can yet make any statements about that. Microsoft is the creator of DirectX and they work closly with hardware vendors. I think it is absolutely possible that they will use some kind of custom design which is based on a existing design.

2. Consoles aren't as specialized anymore. The current offerings all do a lot more than just play games and everyone in the hardware space is doubling down on this for the future. We're going to get boxes that try to do everything and thus have to devote CPU clocks and RAM space to OS level functionality. This will ultimately reduce the resources available to games.

But how much will this reduce resources? It seems that Microsoft will make heavy use of such features, but it is entirely possible that Sony will be conservative with this, and will only make relatively small improvements (see PS Vita). And that would not need much resources.

n addition there won't be as much low-level coding as there has been in the past. Everyone's concentrating on multiplatform releases which means higher levels of coding abstraction and less platform-specific optimization. More middleware also means less programming to the metal. Ballooning budgets will take their toll here too, but I'm just concentrating on the technical aspects here.

I don't think that's true. Maybe average dev teams won't do much low-level coding, but the developers of the middle ware will still do this (and bigger dev teams). And budgets won't grow that much.

3. As I mentioned, thermal draw is possibly the biggest factor. High end PCs have power supplies that can draw over 1000W and GPUs that take up a substantial fraction of that. Consoles will simply not be able to measure up.

I don't think this is an issue here, or can you build a $400 PC which draws so much power, that you can not build a comparable console? Thermal draw is irrelevant in this price category.

It's not my intention to really disparage the consoles here. For the amount of money you'll spend I'm sure both Sony and MS will be providing a better experience than buying a $300-400 PC (for the first year or two anyway), and developers will (stupidly IMO) still be putting out console-only games that you just can't get on PC.

But when you're talking purely in technical terms, I think it's pretty safe to say that Durango and Orbis will definitely not be able to measure up.

It makes no sense to compare PCs to consoles without looking at the price. PCs are highly scalable, of course you can build a more powerful machine if you want.

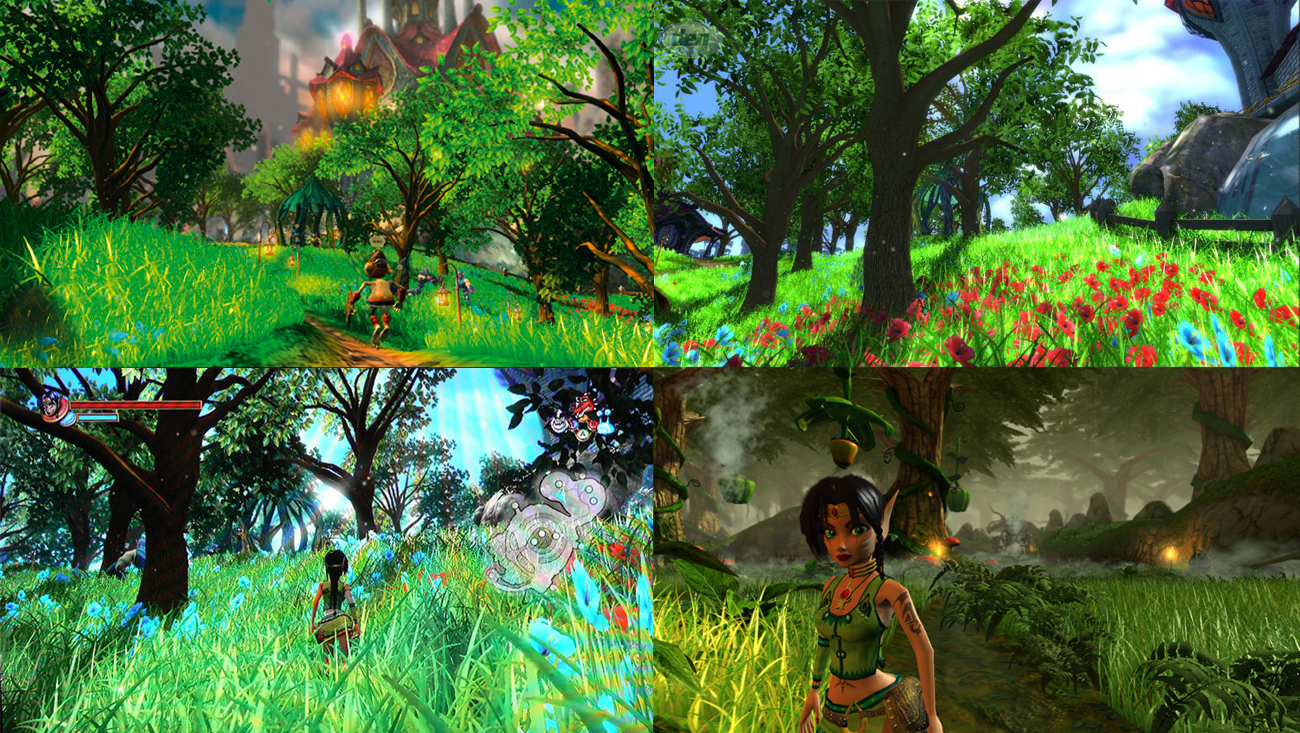

[...]Some shitty textures aside, it takes a lot more hardware grunt than any of the next gen consoles have to run it at Ultra at 1080p (and a lot more than that to keep it at 60fps). I think that's what some of us are trying to point out.

I am sure next gen consoles will be able to display a game like BF3 with ultra settings at 1080p/30fps. But they won't get this game, they will probably get BF4 with super ultra settings at 720p/30fps.