Buggy Loop

Member

Or more precisely, with NERF-like variants like 3D Gaussian Splatting

It's basically a new graphic pipeline that splits from the graphic pipeline tree all the way back to the first rasterization, before shadows, cube maps, lighting techniques etc.

The beauty of it is that its not 2D, by taking videos of real-life this tech basically makes a 3D scene out of it. I doubt it would be functional for a huge game or a very complex map, thus why in the thread title I go back to the pre-rendered background games we know, small scenes that would still be 3D but with a visual quality of real life.

This is a good explanation

Now its equivalent to a "cloud" of splats, so it currently breaks down when you don't have enough data, or if you zoom in or out too much. Thus the pre-rendered background type of game ala Resident Evil requirement.

The tech of this is advancing so rapidly that training AI for these scenes in the video above went from 48h in MipNeRF360 a year ago to 6.1 minutes in Ours7K a few months ago, a 1/50th training time advancement.

Can also have some crazy effects like a scene just burning out and in of existence (Silent Hill )

)

Or your next Waifu

But its static! Well for now, already papers on making dynamic with neural networks. Its a question of time before AI basically manipulates a cloud of splats like it would animate a skeleton of a game's character.

Those clouds can keep shrinking as they find new algorithms to speed things even faster to the point where accuracy is nearly at microscopic details, or even AI can help with that even further.

Point is, its a new branch of rendering and its evolving super rapidly. It might catch up faster in making photorealistic games than raw rendering techniques with path tracing and so on. Or maybe not Its part of the AI revolution and its very hard to predict how it'll be in just a few years.

Its part of the AI revolution and its very hard to predict how it'll be in just a few years.

Be it this tech (eventually), or photogrammetry, I can't be the only one that would love a modern RE with real-life like backgrounds? An indie dev will surely think of it, its a matter of time.

It's basically a new graphic pipeline that splits from the graphic pipeline tree all the way back to the first rasterization, before shadows, cube maps, lighting techniques etc.

The beauty of it is that its not 2D, by taking videos of real-life this tech basically makes a 3D scene out of it. I doubt it would be functional for a huge game or a very complex map, thus why in the thread title I go back to the pre-rendered background games we know, small scenes that would still be 3D but with a visual quality of real life.

This is a good explanation

Now its equivalent to a "cloud" of splats, so it currently breaks down when you don't have enough data, or if you zoom in or out too much. Thus the pre-rendered background type of game ala Resident Evil requirement.

The tech of this is advancing so rapidly that training AI for these scenes in the video above went from 48h in MipNeRF360 a year ago to 6.1 minutes in Ours7K a few months ago, a 1/50th training time advancement.

Can also have some crazy effects like a scene just burning out and in of existence (Silent Hill

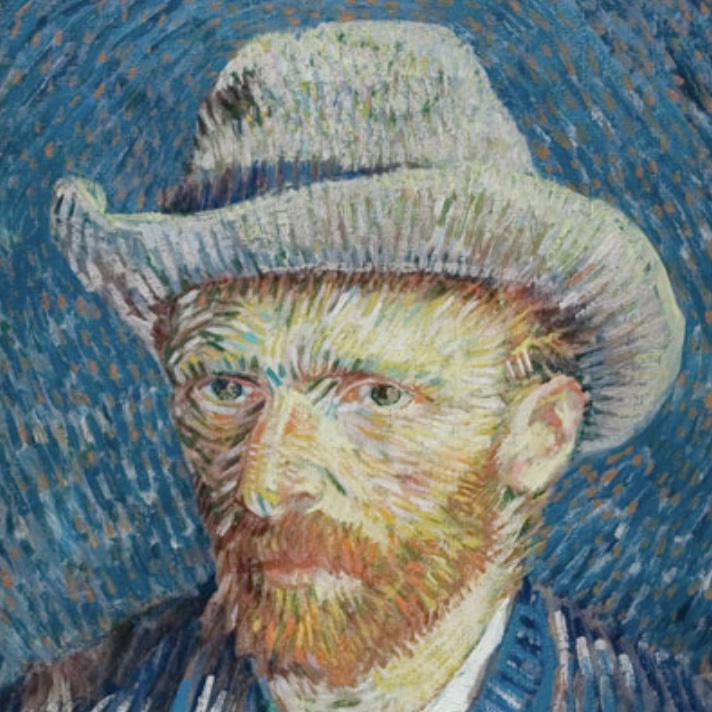

Or your next Waifu

But its static! Well for now, already papers on making dynamic with neural networks. Its a question of time before AI basically manipulates a cloud of splats like it would animate a skeleton of a game's character.

Those clouds can keep shrinking as they find new algorithms to speed things even faster to the point where accuracy is nearly at microscopic details, or even AI can help with that even further.

Point is, its a new branch of rendering and its evolving super rapidly. It might catch up faster in making photorealistic games than raw rendering techniques with path tracing and so on. Or maybe not

Be it this tech (eventually), or photogrammetry, I can't be the only one that would love a modern RE with real-life like backgrounds? An indie dev will surely think of it, its a matter of time.

Last edited: