DanielG165

Member

Very curious to see how Indy performs on Xbox. I know that it’s using IDTech, and both modern DOOM games are incredibly well optimized, but Indy is an entirely different beast with a much larger scope. And, that PC spec sheet is nuts; it has to be pushing path tracing at the highest level in that case. Hopefully, we can see a 60 fps mode for Series X, as my 2080S will likely struggle due to lack of VRAM.

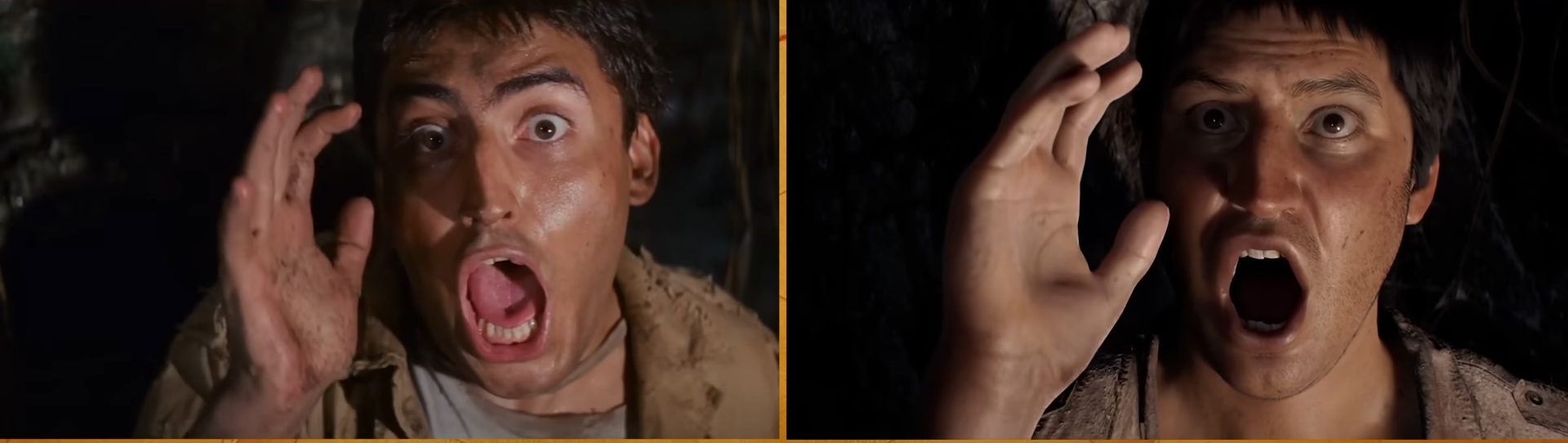

I’m also still one of the few here that genuinely believes that Indy looks incredible across the board. Detail, fidelity, lighting, faces, all of it looks fantastic. Looking forward to next week.

I’m also still one of the few here that genuinely believes that Indy looks incredible across the board. Detail, fidelity, lighting, faces, all of it looks fantastic. Looking forward to next week.