I'm not going to say I regret stepping in your defense when people there were calling you a

clown in that Thread,

Corporal.Hicks

Corporal.Hicks

, as that wouldn't be very mature or true to who I am as a person, but I would be lying if I said I don’t now lean toward that opinion myself.

I am not surprised at all to see you completely omitted these from your reconstruction of the events.

As unmasking the situation for the obvious genuine attempt at discovering the truth wouldn't fit with the narrative you're attempting to fabricate.

Or the way you phrased this:

As if, yet that again, I wasn't simply reporting what was available online:

Or that my own framerate assessments on the game, in that same message, turned later on to be completely accurate:

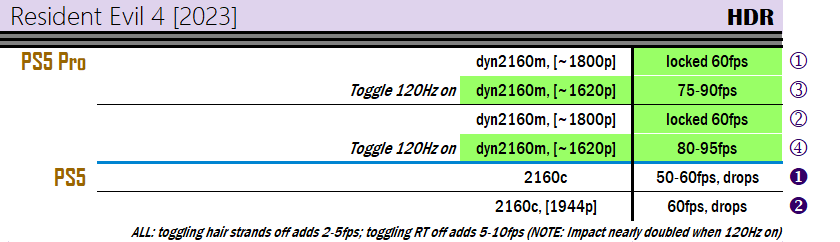

Or that you bring up this chart:

From ResetERA, containing incorrect information such as the game in the mode we were referring to being machine leaning (PSSR) upscaled, or it being locked 60fps:

When other users, playing the game, were already telling you PSSR is not being used in such mode.

No, not at all. Most people would simply recognized this as a good faith mistake caused by incorrect sources:

Combined with analysis on the game screenshots not matching Interlacing issues, not producing Checkerboard artifacts, and not using PSSR.

It wouldn't be different than me calling you a liar for repeating what was contained in that ResetERA chart.

And, as it was told to you already, I was offended because you implied I did this on purpose, to prop up the PS5 Pro version with malicious intent intentionally, when these were my previous post in that same Thread waring people about the version when I, unknowingly, had a glitch that caused incorrect visuals:

Which is something you did again in this Thread when you implied I was harsh with my definition of how Path Tracing runs on PC while favoring the PS5 version, nothing more than a bold-faced lie given my only ever posts on said console version being:

You appear to have a serious issue when it comes to jumping to wrong conclusions, and being obnoxious when faced with it. And now, have done the same for a

third time:

At this point I am confident many people would simply abandon their composure.

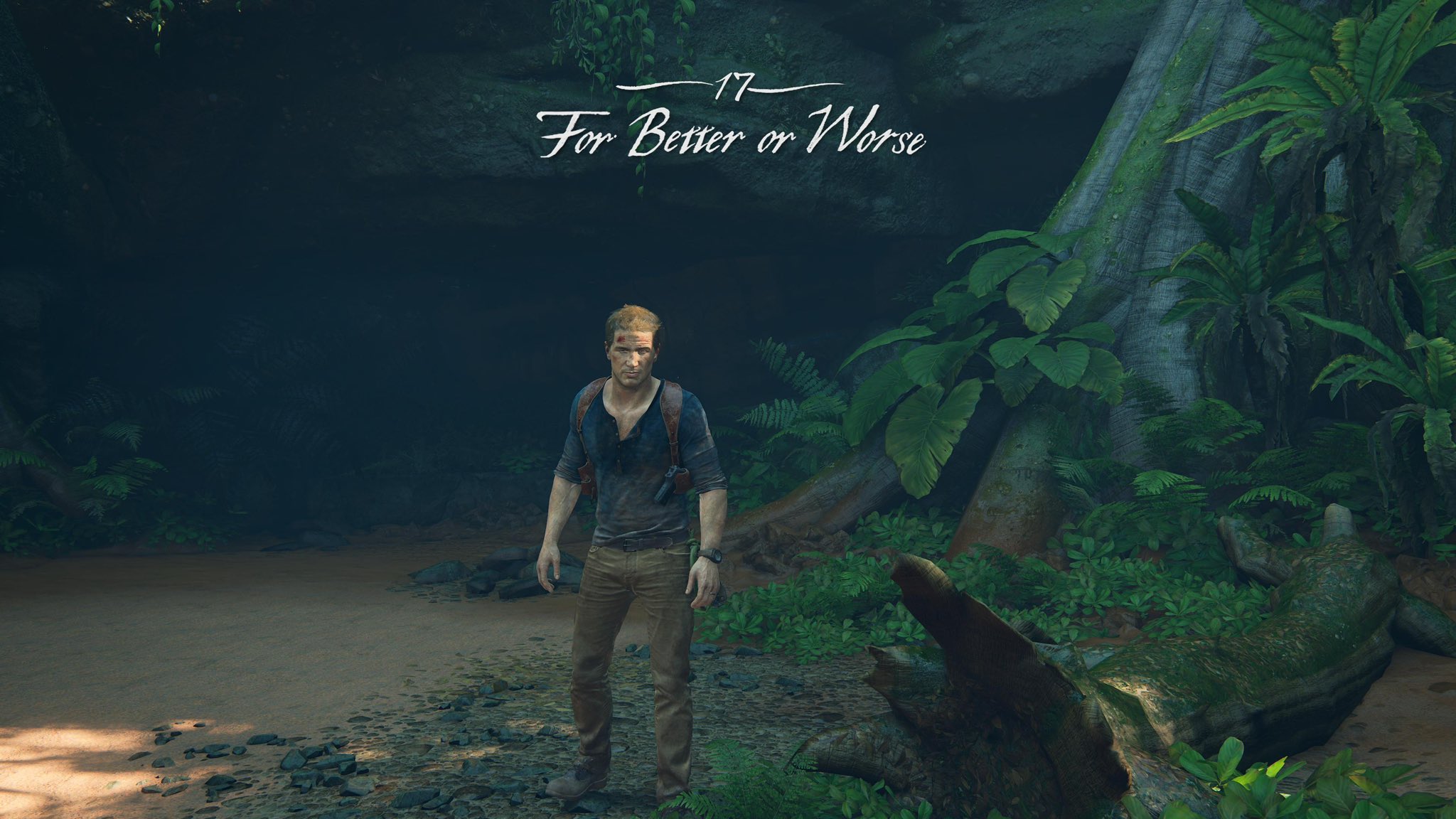

PS4 Version:

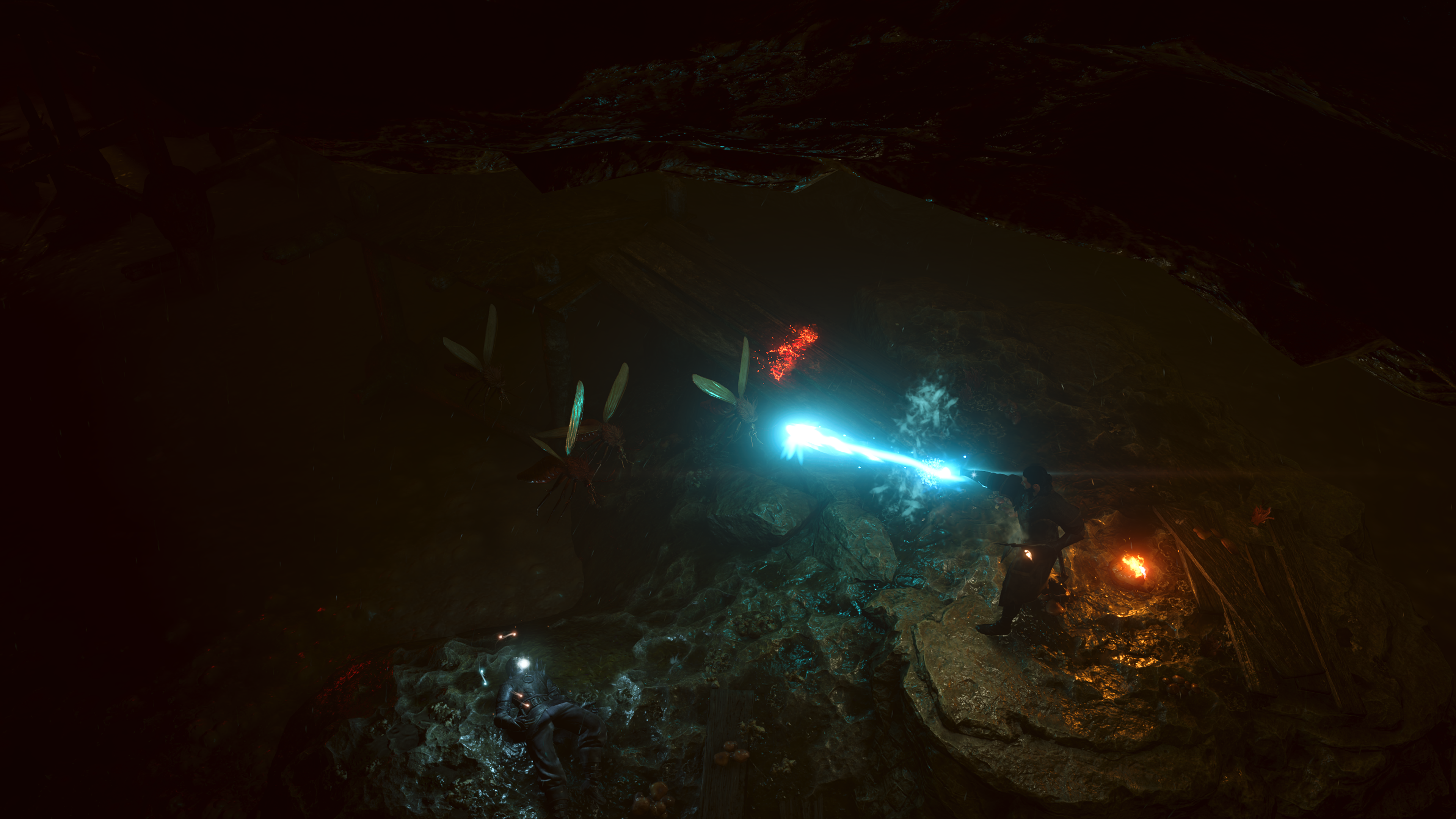

PC Max 4090:

As also obviously depicted in the image above the one you chose..

Every single one of the screenshots I posted, (nothing more than a sample of literal

hundreds issues between missing shadows, reflections, shaders, VFX, particles etc.) is made in regards to the original 2016

PS4 version, not PS5 version.

And as for your bizarre persistence when it comes to your issues with Uncharted 4 lighting, there is not much more I could do other than once more exposing them as aimlessly ramblings, as those specific issues have nothing to with the Thread given the situation you described has been addressed by Threat Interactive in the video (time-stamped) in the very first post of this Thread:

And do not represent what rasterized solutions could be ultimately delivering, as already depicted in many other games.

Ultimately, your entire point on the 2016 software created to run on $399 2013 hardware is an absolutely obvious one everyone with an IQ surpassing the single digit threshold knows already, and that I've been saying myself more than once in this very Thread..

So when you reveal yourself as an edgy teenager with statements like this:

I am only confused once more as to what kind of discussion you believe is taking place, considering the only point advanced is that gains RT would bring in selected instances due to the use of lighter and alternative solutions, isn't worth the drastic drop in resolution, framerate, and image quality issues such as reconstruction/denoising artifacts, on

the target hardware this Thread is about.

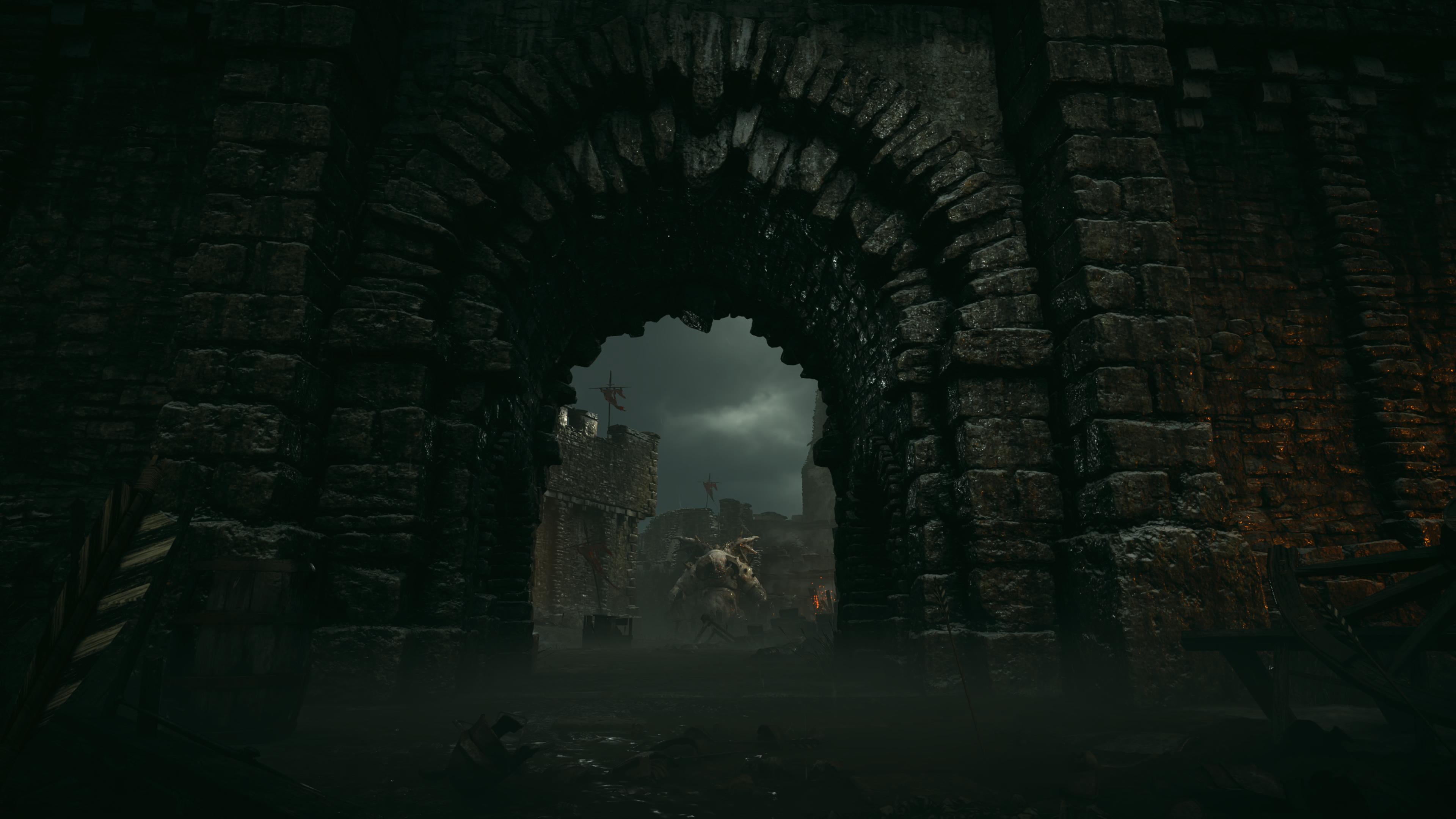

You mentioned Metro Exodus multiple times, but this is the kind of image it produces on PS5:

Reconstruction/denoising mess, shading on tree worthy of PS2 software.

That even pretending the base, crude version of Metro Exodus under the RT implementation being even close to something like Uncharted 4 when it comes to the underlying technical structure and hardware cost due to assets, mechanics, animations, to begin with.. or that 9 out 10 random people wouldn't all pick PS4 Uncharted 4 as the better looking game even in spite of RT.

The entire concept, simple enough to be toddler-proof, has been pointed out to you repeatedly and yet somehow still hasn't landed.

Would

you rather play something at a shimmering/noisy/artifacty (often around 800p internal) software with RT, or something like an evolved 2025 version of 2016 PS4 Uncharted 4/Lost Legacy at native 4K 60fps and 1440p 120fps?

Because it's as simple as that. And:

"Yeah, I'd take the much better resolution, IQ, framerate and clean visuals looking often absolutely incredible still as you clearly have shown multiple times, artists and competent people created over what Alan Wake 2, Wukong, Jedi Survivor or Outlaws delivered on the PS5 I'm playing on, because I'm fucking anal bastard that loves to put his 2.5K PC and IQ at the center of virtually every single post here, and I could never stand such glaring compromises when playing on this console"

Is the only possible answer here, and happens to immediately put an end to the argument.

And SVOGI, not being RT or PT, completely lands into my own point about what would be possible to achieve in absence of heavier alternatives provided by RT and PT..