T4keD0wN

Member

Can someone tl dr me without DLSS and AI bullshit,

what is raw performance improvement:

4080 to 5080 ?

Or better yet. Someone calculate 3080 vs 5070ti vs 5080.

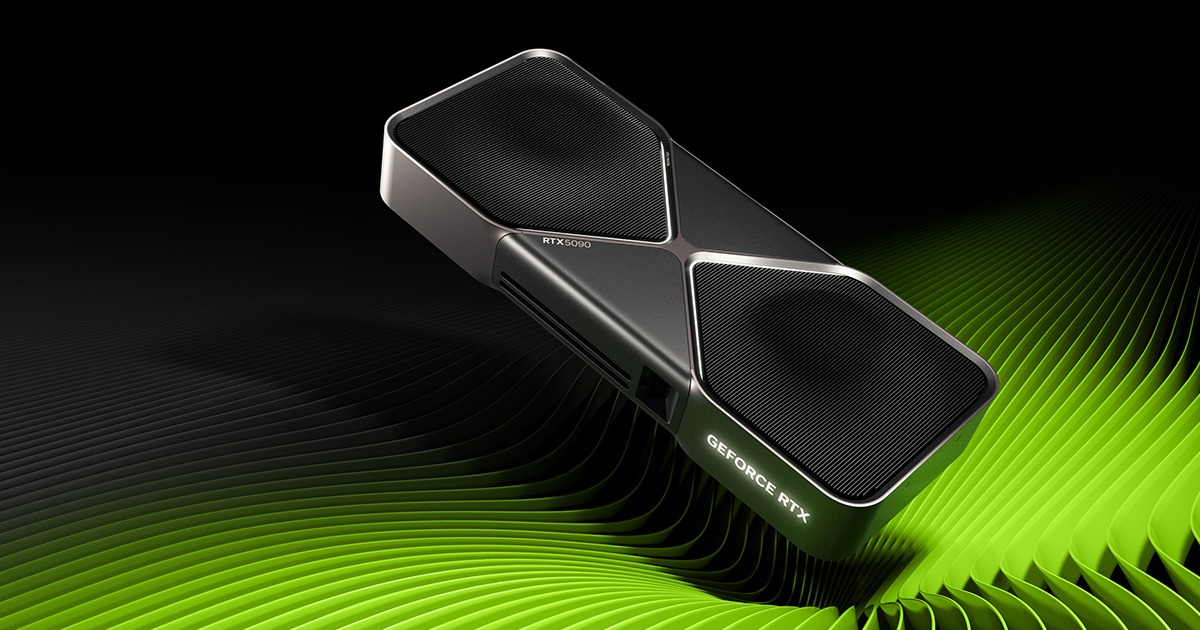

Look at Far Cry 6: green is 5080, grey 4080. Its with RT and the RT cores are better (different amounts further complicate it) so the true raw performance improvement should be even lower than this, so its barely an improvement in that regard, its all about AI bullshit now.

4080 is about 149% of a 3080. 4080 to 5080 is a smaller improvement. ~35% with RT and less than 30% without RT probably, so 5080 should be slightly less than double of 3080, but without vram possibly holding it back, with RT you should be looking at 200%+ going from 3080 to 5080.

Worth noting that Far Cry 6 ray tracing is very light so it should not play a big role here, theres also a possibility of a CPU bottleneck since Dunia Engine does not have the best multithreading. My pessimistic estimate for raster 3080 to 5080 (if i presume better RT cores affect the chart) is about ~1.90x, optimistic ~2.00x if they dont.

Last edited: