YeulEmeralda

Linux User

I'm wondering if a 5070/80 will be enough to last the PS6 generation. Planning on building a new PC next year.

4090 is shit tier when it comes to ai training though.You guys definitely need a 5090 to play all those indie games lmao. Wake me up when we get actual demanding visual beasts on PC. Until then, 7080 is a more realistic wait for buy.

i got mine at launch (generic gigabyte) for 1600 or so and never regretted itI've been one of the premium retards who bought a 4090 for the price Nivida were asking for.

What can I say? I am a massive idiot.

I want to confirm if the "fire" reaction fromI'm more concerned about the power port they're using these days, literally the only thing keeping me from thinking on getting an Nvidia card until it's solved, but I'm whiling to give it a go if reports are good.

Eh. It's actually one of the reasons why I bought one. It's not that bad. You can do surprisingly a lot with 24 gigs of RAM.4090 is shit tier when it comes to ai training though.

Lol. Okay.5080 should once again be the sweet spot in terms of performance and power consumption. In for one when it shows up.

Hopefully this means they’ve got 5xxx series laptop GPUs for next year’s gaming laptop refreshes too

not sure what kind of training you're doing but i've trained my own lora's all the training i've done has taken 4+ hours at least, if not 8. of course, it could be the way i set it up but based on the it/sec it only makes sense the 5090 would handled these operations significantly faster. shit tier for me is anything that makes you wait for hours.Eh. It's actually one of the reasons why I bought one. It's not that bad. You can do surprisingly a lot with 24 gigs of RAM.

But for anything related to LLMs (even with LoRA etc.) you probably need something with 64 gigs or more. Amazon AWS, Google Colab, etc. Or you use a smaller LLM, like Mistral 7B or so. And you can often get by with just Retrieval Augmented Generation instead of fine-tuning it for real.

Training time is not really my concern, rather if I can fit my model on the card during training (I am already using stuff like gradient accumulation etc.). So I guess we have just different aspects that are important to us.not sure what kind of training you're doing but i've trained my own lora's all the training i've done has taken 4+ hours at least, if not 8. of course, it could be the way i set it up but based on the it/sec it only makes sense the 5090 would handled these operations significantly faster. shit tier for me is anything that makes you wait for hours.

i've also trained my own checkpoints which took even longer

ah, thanks, i had to look up gradient accumulations. i'm using kohya_ss and wonder if that's an option there, and if it would speed up my training given the right parameters.Training time is not really my concern, rather if I can fit my model on the card during training (I am already using stuff like gradient accumulation etc.). So I guess we have just different aspects that are important to us.

Ah, I am not familiar with Kohya_ss. At first glance, it seems like a training library/framework for diffusion models? Interesting. I am using vanilla diffusion models as pre-trainer for my encoder-decoder networks. But I had so many specific things that I opted to write my own stuff (using Pytorch, obviously).ah, thanks, i had to look up gradient accumulations. i'm using kohya_ss and wonder if that's an option there, and if it would speed up my training given the right parameters.

OK, let's just hope the 5080 is actually affordable and a worthy upgrade from the 3080.

yeah i don't have the time to get that low level i am basically just kiddie scripting my way through it with a very rough understanding.Ah, I am not familiar with Kohya_ss. At first glance, it seems like a training library/framework for diffusion models? Interesting. I am using vanilla diffusion models as pre-trainer for my encoder-decoder networks. But I had so many specific things that I opted to write my own stuff (using Pytorch, obviously).

...which was a fucking bitch to debug.

4080/4090 are already PS6 proofI'm wondering if a 5070/80 will be enough to last the PS6 generation. Planning on building a new PC next year.

Training taking hours doesn’t make a card “shit tier”. You would have to pay significantly more to get much better results. A 48GB version of basically 4090 tuned for AI will cost at least 3x and it’s only going up from there.yeah i don't have the time to get that low level i am basically just kiddie scripting my way through it with a very rough understanding.

Let’s not get crazy. PS6 will launch in 2028 and the gen will go for 7-8 years if it’s business as usual.4080/4090 are already PS6 proof

Thats crazy talk…4080/4090 are already PS6 proof

Nah. If the ps5 pro is supposedly 4070 level, the 4090 is not safe at all. It should either get matched or beaten by the ps6.4080/4090 are already PS6 proof

Your math is a bit off.Let’s not get crazy. PS6 will launch in 2028 and the gen will go for 7-8 years if it’s business as usual.

4090 would be a 14 year old card by end of that gen. Even a 5090 would be a 12 year old card by then. I don’t believe that will possibly be enough. Either way you would need to upgrade somewhere in the PS6 generation, sooner with a 4090.

You are missing a year here and there. Let’s say 5090 would launch at end 2024. PS5 launches toward end of 2028 (probably Nov as usual). That’s a 4 year card.Your math is a bit off.

PS6 is likely to launch in late 2027/early 2028. The 4090 would be just a bit over 5 years old by then. Add another 7 years and it'd be just a bit over 12 years old.

The 5090 is presumably launching in late 2024/early 2025. It would be just about 3 years old by the time the PS6 launches and about 10 years old at the end.

We're talking 10 and 12 years old, not 12 and 14. Not a big difference but still. The GTX 1080 is turning 8 years old this month. I believe it can last the rest of gen as long as ray tracing remains optional. A 5090 could feasibly get someone through the PS6 generation.

PS6 is unlikely to launch at the end of 2028. End of 2027 is more plausible.You are missing a year here and there. Let’s say 5090 would launch at end 2024. PS5 launches toward end of 2028 (probably Nov as usual). That’s a 4 year card.

Nope, it would be 7 years. PS5 launched in Nov 2020, less than 2 months before 2021. The 4090 launched in Oct 2022. If the PS6 launches in Nov 2027, the 4090 will be 5 years and 1 month old. Basically 5 years.PS5 launched in 2020. PS6 would launch in 2028. That’s an 8 year interval. So again, a 12 year card. 4090 will be a 2 year old card so at the end of gen it will be 14.

What do you mean, "cut to 7 years"? It's been 7 years since the PS3 days.Sony might cut next gen to 7 years or may launch in early 2028, who knows, but if things go as with past gen’s, the above is likely outcome. IMO a better bet is to get a 5080, save up some money and get a 6080 halfway through. That is if a 90 series card midway replacement is out of budget.

And they still work without ray tracing. RT will invariably be optional in console games because they don't have the horsepower to run it properly most of the time. There's also the fact that the Series S needs to be supported until the end of the generation and a 1080 will destroy that console. A 1080 will last to the end of the generation. It won't run game great or anything, but if you're willing to lower some settings and forego ray tracing, it'll last you until 2028.In any case, a 1080 wouldn’t last the rest of this gen well in either case. Some games are already coming out with RT being used as the main option.

Obviously. I wouldn't recommend anyone riding any GPU for a decade but hey, if you wanna do it, you can. Just don't expect great results.I guess we will see what happens with 5000 series cards, but IMO, a much better experience would be to get say a 5080 and halfway through the gen replace it.

Keep in mind that Kopite7kimi might be seeing a cut down die and thinking that it’s the 5080… while Nvidia will release that die and named it 5090 at $2000

i was exaggerating but let's be honest who wants to wait around for hours unless they have a dedicated machine or are renting a cloud GPU. it's true that vram is really more important for reducing time although as far as I understand the tensor cores are involved in operations getting the data back and forth from the vram, and generally newer generations have more performant tensor cores, i'm pretty sure the 5090 will be faster than a 4090 even if it is still only 24GB.Training taking hours doesn’t make a card “shit tier”. You would have to pay significantly more to get much better results. A 48GB version of basically 4090 tuned for AI will cost at least 3x and it’s only going up from there.

For the price a 4090 isn’t bad. Also as long as the model does into memory you can have parallel cards to decrease the time required, again depends on what you are doing of course.

It will be faster, sure, but nobody will know till specs are released.i was exaggerating but let's be honest who wants to wait around for hours unless they have a dedicated machine or are renting a cloud GPU. it's true that vram is really more important for reducing time although as far as I understand the tensor cores are involved in operations getting the data back and forth from the vram, and generally newer generations have more performant tensor cores, i'm pretty sure the 5090 will be faster than a 4090 even if it is still only 24GB.

even with inference if i want to create a simple animatediff at 30fps that's a lot of frames to generate and queuing that up could potentially be just as long as training a model, correct me if i'm wrong but this is more reliant on tensor cores because you aren't using as much vram for each frame generation, and in this case a later generation tensor core such as those presumably that will be found in the 5090 will improve these kinds of processes as well. i wonder, would having twice as much vram even matter for that case?It will be faster, sure, but nobody will know till specs are released.

And really, inference is more of an issue time wise as you need a big enough farm to answer requests in seconds. Well, for actual service providers vs individual uses of course.

Training taking hours is ok in that scenario. But yeah, if this is your main PC, that could be an issue.

This is all true, but what I am saying is that for the last 1.5 years or so, 4090 was a very good card for AI compared to its price point. You would need to spend like $5-6K on something remotely better. That’s not shit tier. Training just takes time.even with inference if i want to create a simple animatediff at 30fps that's a lot of frames to generate and queuing that up could potentially be just as long as training a model, correct me if i'm wrong but this is more reliant on tensor cores because you aren't using as much vram for each frame generation, and in this case a later generation tensor core such as those presumably that will be found in the 5090 will improve these kinds of processes as well. i wonder, would having twice as much vram even matter for that case?

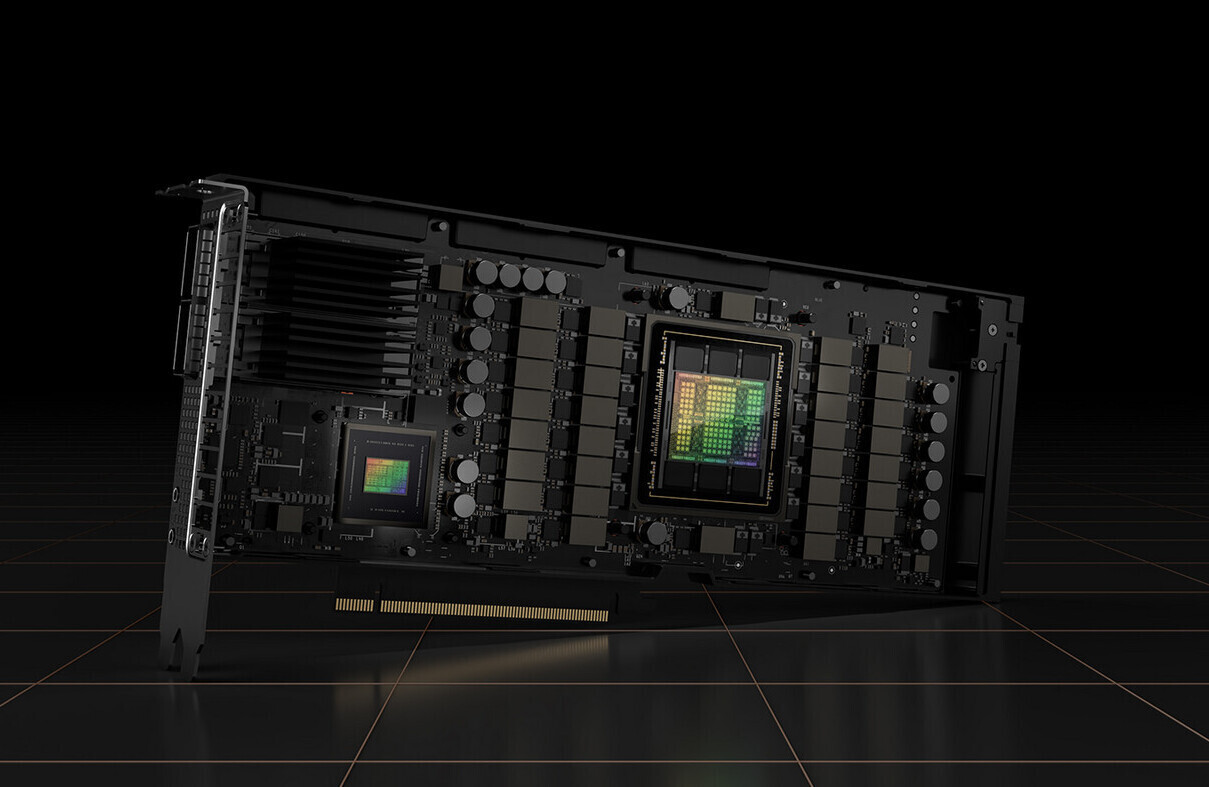

According to Benchlife.info insiders, NVIDIA is supposedly in the phase of testing designs with various Total Graphics Power (TGP), running from 250 Watts to 600 Watts, for its upcoming GeForce RTX 50 series Blackwell graphics cards. The company is testing designs ranging from 250 W aimed at mainstream users and a more powerful 600 W configuration tailored for enthusiast-level performance. The 250 W cooling system is expected to prioritize compactness and power efficiency, making it an appealing choice for gamers seeking a balance between capability and energy conservation. This design could prove particularly attractive for those building small form-factor rigs or AIBs looking to offer smaller cooler sizes. On the other end of the spectrum, the 600 W cooling solution is the highest TGP of the stack, which is possibly only made for testing purposes. Other SKUs with different power configurations come in between.

We witnessed NVIDIA testing a 900-watt version of the Ada Lovelace AD102 GPU SKU, which never saw the light of day, so we should take this testing phase with a grain of salt. Often, the engineering silicon is the first batch made for the enablement of software and firmware, while the final silicon is much more efficient and more optimized to use less power and align with regular TGP structures. The current highest-end SKU, the GeForce RTX 4090, uses 450-watt TGP. So, take this phase with some reservations as we wait for more information to come out.