Maybe I'm crazy, but a game running better on more threads doesn't sound like "horrible code" to me. If anything, shouldn't it be the norm that saturating more threads leads to better performance in CPU limited scenarios? Otherwise we're back to games being bottlenecked by mostly using one or two main threads and only IPC making a difference. Sounds like the latter is the worse code to me in the era of 8 cores and 16 threads.

That’s a fair reaction; I was unintentionally misleading.

First off, you are correct in an apples-to-apples vacuum. But consider this: keeping your CPU threads fed is often more important than how powerful those threads are. Every nanosecond of wasted time is wasted performance.

Though the 3700/X has two more threads, it is an older Zen2 architecture on a larger 7nm node. Consider then, the 5600/X is built on the Zen3 architecture on a smaller 5nm node.

Why do you want more cache on the chip? Because then the CPU can store more bits of data it needs when it needs them “on hand.”

A big penalty for games, due to their dynamic nature versus regular old applications, is that what the CPU (and the whole system) needs can change a lot per frame.

Back to split versus unified L3: this change reduced data contention and waiting. Zen3 made many other improvements (they had more room to do so because they were produced at a smaller scale, so you could fit more transistors in the same area), but the given example is more elucidative than exhaustive.

If the term “L3” sounds familiar, it’s because that’s the cache type stacked atop the chips for the X3D chips! (Also, games that are reliant on cache and scale wildly can obliterate X3D performance if they exceed the X3D cache amount. See Factorio.)

Because, god help you, if you are constantly going to main memory to fetch all the data the CPU needs—especially if you might be evicting data the CPU needs in the very next frame (or sooner).

Sorry, this is just a long-winded way of saying that the architectural improvements of Zen3 almost always outweighed a 2-thread deficit on Zen2.

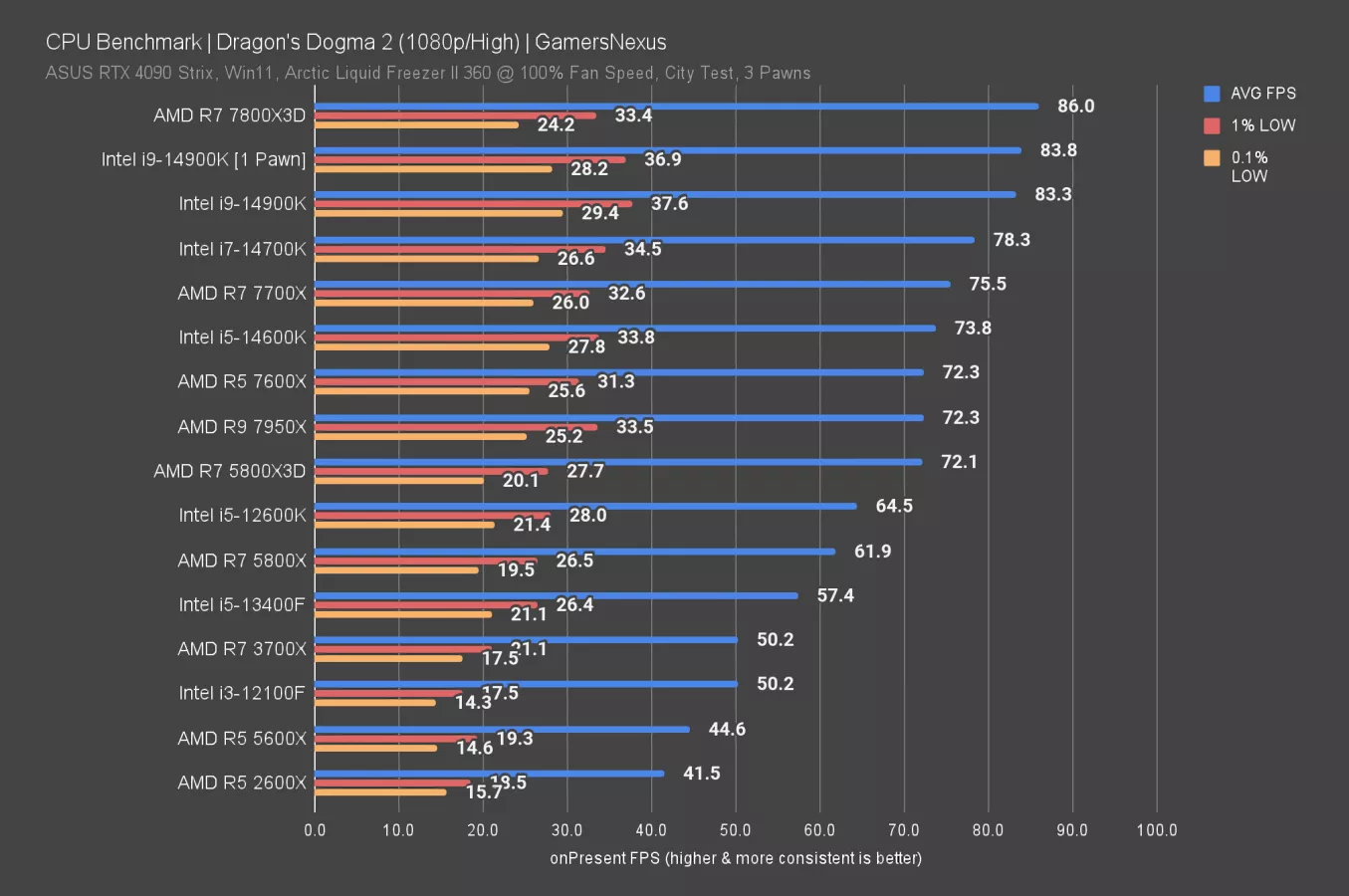

I’d go on to Dragon’s Dogma being a hot mess, but I’d just be reposting things from more knowledgeable people on the subject. Plus, I need to take medication from surgery (woo!). You’re just going to have to trust the internet on that one. Consider that DD2 might be so thread-hungry because it does such an inefficient job of utilizing threads to begin with.